- Google Cloud

- Cloud Forums

- Apigee

- Apigee mTLS: Ports usage

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Apigee Community,

On the Apigee mTLS documentation page,

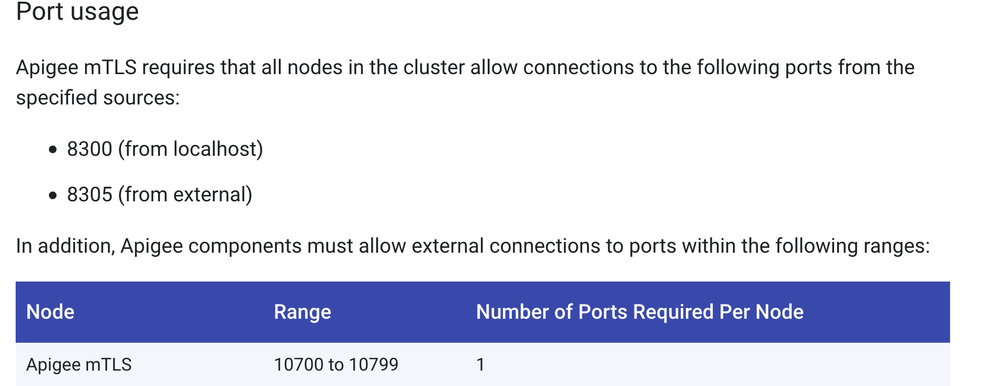

I see the following requirements:

Does it mean, that each Apigee mTLS node communicates with other Apigee mTLS node via 8305?

For example:

Node 1 (Port 10700) communicates with Node 2 via 8305.

Node 2 (Port 10701) communicates with Node 1 via 8305.

Thank you in advance for help!

- Labels:

-

Private Cloud Deployment

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Anton,

The 8305 port is used by the cluster members to talk to the mTLS control plane which is the consul server in this case. Each cluster member needs to be able to talk to the consul server ring using the 8305 port. However, it need be opened on all nodes but just the ones that are running the consul server and additionally you can ensure that only IP addresses allowed to connect to that port are the cluster member IPs.

This looks like a bug in the documentation after we made this change between alpha release and the GA release. I would file a doc bug to get this fixed.

In addition to that I believe it would be also beneficial to call out specifically what the following 3 ports are used for:

- 8300: Consul Server

- 8305: RPC port for communication with the server

- 8302: WAN port for gossip in multi-dc setup

Thanks for pointing this out. I would get the changes made asap.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Argo,

Thank you — this is very helpful. Please allow for a few follow-up questions:

- When you say “consul server”, do you mean the servers installed on Zookeeper nodes, correct?

- Also, what is this Apigee mTLS Node (mentioned in the table in documentation). Is this actually the Consul Server?

Regarding ports usage:

- If I get it correctly, all Consul proxies (egress, I assume) talk to these Consul servers using the 8305 port, right?

- Port 8300 needs to be opened only on Consul Servers (for localhost), correct?

- In sum, ports 8305 & 8300 are needed to be open only one Consul Servers nodes?

Also, is Administration Machine and Consul Servers required for operations after the Apigee mTLS has been configured?

What if one of the Consul Servers becomes unresponsive — will this have an impact on operations?

Thank you very much in advance, I understand that this is a lot to cover in once comment, but mTLS is very important for our use case.

Best regards,

Anton

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Anton,

I am following up to answer the remaining open follow-up questions.

You can find the Consul documentation on port usage here (note our port numbers might differ from the defaults).

Note that the mTLS solution relies on the same binary/daemon and running as:

a) server mode (tier-0 service, so you deploy only one of them, can't be an highly available approach, for 0-downtime production guarantee, Consul requires 3 server nodes, which elect a leader using the raft consensus approach. These could be deployed on dedicated node(s) or in conjunction with existing topology nodes (like our example for a dual-dc install, continue reading, I have added details in the following answer). For more details, see also here.

b) sidecar mode (each of which will be controlled by the Consul server/s, and provide mTLS bidirectional communications across the other nodes of the mesh).

> When you say “consul server”, do you mean the servers installed on Zookeeper nodes, correct?

Yes, the wording "Consul Server" above is used above to refer to one or more nodes running the Consul daemon in server mode.

Assuming you are referring to the example in the dual-DC installation, then - yes - that topology describes the installation of three Consul Server nodes in each of the two regions, looking at the IP addresses listed in the two configuration files.

Ref doc here.

> Also, what is this Apigee mTLS Node (mentioned in the table in documentation). Is this actually the Consul Server?

Yes, with the explanation at the points above, should appear clear now.

> If I get it correctly, all Consul proxies (egress, I assume) talk to these Consul servers using the 8305 port, right?

Consul proxies (i.e. the sidecars) - running on different nodes than the Consul Servers - use target TCP port 8305 to connect to all Consul Servers in our setup. Details here.

> Port 8300 needs to be opened only on Consul Servers (for localhost), correct?

and:

> In sum, ports 8305 & 8300 are needed to be open only one Consul Servers nodes?

Only for Consul Servers, but it's a TCP RPC port and needs to be exposed to the other Consul Servers (if you choose to have min 3 per data centre as tier-0 HA service).

> Also, is Administration Machine and Consul Servers required for operations after the Apigee mTLS has been configured?

Consul Servers: yes, they coordinate the sidecars, providing health-check services and latency monitoring.

Admin Machine: it's the one used for the setup of the CA and the generation of the configuration files. It is not involved in the traffic. If no other components are installed and running on it, then you don't need up and running, but you will still need its attached filesystem to have it online again during upgrades and certificates refresh, rotation and topology changes.

> What if one of the Consul Servers becomes unresponsive — will this have an impact on operations?

Yes, for HA and zero downtime operations, you will need to:

a) Deploy 3 Consul Servers per DC (not a single point of failure)

b) monitor their health.

They will re-elect the leader from the residual if the current leader disappears. See above.

Let me know if you need further clarifications or we need to dig deeper.

Regards,

Nicola

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have installed the system and did some learning about it, all seems working perfectly. So a couple of explanations for the community:

All communication for bootstrapping between sidecars (proxy-services) made via https query 8503(mtls) from sidecars to your zookeeper cluster (where you have the mtls servers installed). They(proxies) pull up relevant meta-data, according to their service-id, difined in the server.json and also in the service start-up script, e.g:

"id": "x-node-10-10-10-10"

From there, they have to run local upstreams, e.g. 127.0.0.1:xxxxx >> all traffic routed to relevant(local) ports and is retranslated to 'destination' configured in the server.json under relevant service. Each port on localhost reserved for relevant target service. On target server, they decrypted and re-routed to original local port.

From ports POV:

You have to open 8xxx ports from mtls-service to your zookeeper nodes:

8503 is the https(API) endpoint for grabbing the meta-data

8301 for broadcast and other communication between nodes (it's retranslated if needed)

8302 > this one is only for multi-dc Apigee deployment

8502 > I didn't noticed traffic on this port, it seems all going via 8503, according to docs it is reserved for envoy proxy, and not for built-in

Between your cluster(zoo's) you need to open also ports for internal communication

Hope this also clarify some questions

p.s. all system works like clock

-

Analytics

497 -

API Hub

75 -

API Runtime

11,663 -

API Security

175 -

Apigee General

3,028 -

Apigee X

1,273 -

Developer Portal

1,906 -

Drupal Portal

43 -

Hybrid

461 -

Integrated Developer Portal

87 -

Integration

309 -

PAYG

13 -

Private Cloud Deployment

1,067 -

User Interface

75

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter