- Google Cloud

- Cloud Forums

- Apigee

- File Transfer via HTTP - Apigee On-premise - Guide...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What are best practises for file transfer via HTTP?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Generally a bad idea unless the file size is small.

A better idea, if you have larger files (images, archives), is to use Apigee as the control plane to generate a signed URL, a one-use URL that is time-limited. Apigee woudl then send THAT to the client, and the client could directly contact the storage system, like Google Cloud Storage, AWS S3, or Azure Storage.

From my friend Steve:

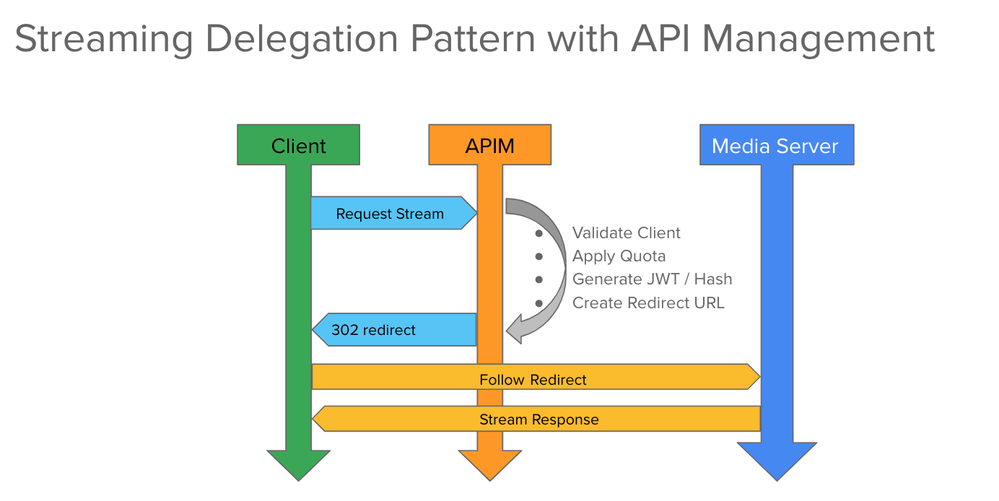

the client sends the request to retrieve a large stream into Apigee. Apigee authenticates it, maybe rate limits, and etc, and then generates a signed URL and redirects to the storage server. The client follows the redirect to a server that will serve up the stream directly. This allows the backend stream/media platform to scale horizontally and it enables features like resuming stream if for some reason the connection gets disconnected during the stream, etc.

The key question is "what is small, and what is large?" If you look at the service limits for Apigee, the largest file you should expect to transmit through the proxy is 10mb. (check the limits doc). But anything file-ish that is over 1mb is probably too much data.

Google Cloud storage supports the pattern I described implicitly with "Signed URLs". But you can implement this on your own backend streaming or storage serving system without too much trouble. The pattern is the same.

BTW here is an Apigee proxy that shows how to implement this pattern with GCS as the backend:

https://github.com/DinoChiesa/ApigeeEdge-Java-GoogleUrlSigner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Generally a bad idea unless the file size is small.

A better idea, if you have larger files (images, archives), is to use Apigee as the control plane to generate a signed URL, a one-use URL that is time-limited. Apigee woudl then send THAT to the client, and the client could directly contact the storage system, like Google Cloud Storage, AWS S3, or Azure Storage.

From my friend Steve:

the client sends the request to retrieve a large stream into Apigee. Apigee authenticates it, maybe rate limits, and etc, and then generates a signed URL and redirects to the storage server. The client follows the redirect to a server that will serve up the stream directly. This allows the backend stream/media platform to scale horizontally and it enables features like resuming stream if for some reason the connection gets disconnected during the stream, etc.

The key question is "what is small, and what is large?" If you look at the service limits for Apigee, the largest file you should expect to transmit through the proxy is 10mb. (check the limits doc). But anything file-ish that is over 1mb is probably too much data.

Google Cloud storage supports the pattern I described implicitly with "Signed URLs". But you can implement this on your own backend streaming or storage serving system without too much trouble. The pattern is the same.

BTW here is an Apigee proxy that shows how to implement this pattern with GCS as the backend:

https://github.com/DinoChiesa/ApigeeEdge-Java-GoogleUrlSigner

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

File transfer over http doesn't sound good. FTP is fine. Apigee should not be used for file transfer.

-

Analytics

497 -

API Hub

75 -

API Runtime

11,660 -

API Security

174 -

Apigee General

3,020 -

Apigee X

1,262 -

Developer Portal

1,906 -

Drupal Portal

43 -

Hybrid

459 -

Integrated Developer Portal

87 -

Integration

308 -

PAYG

13 -

Private Cloud Deployment

1,067 -

User Interface

75

| User | Count |

|---|---|

| 5 | |

| 2 | |

| 2 | |

| 1 | |

| 1 |

Twitter

Twitter