- Google Cloud

- Cloud Forums

- Apigee

- Identify source of 30 second proxy latency

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've recently started getting complaints from clients that proxies that normally are sub-second will occasionally take >30 seconds.

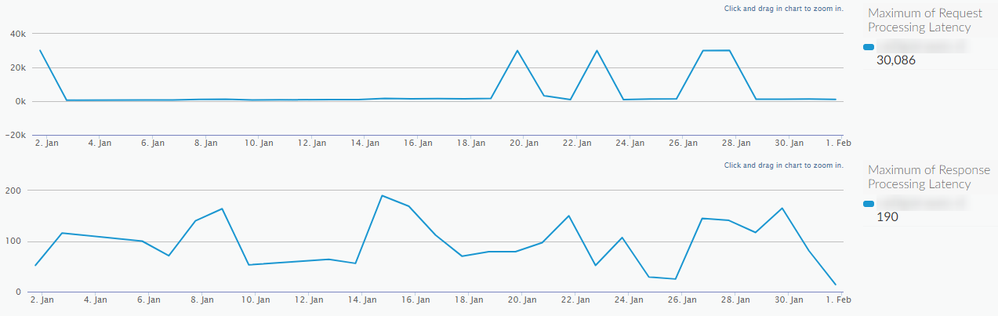

I used a custom report to help identify whether the spike is happening on the request or response, and its definitely on the 'request' side of the flow. Its always a spike of 30s.

Heres an example where you can see the request side spikes up to 30Kms while response is consistently under 200ms.

This is happening a few times a day across a handful of proxies. We don't know how to recreate it so I've never been able to catch it while running a trace.

Any advice or suggestions for what I can do to narrow it down further and identify which specific policy or sharedFlow that is creating these delays?

edit: These are executing in Edge public cloud in a paid org.

- Labels:

-

API Runtime

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have any policies such as a service callout or javascript making external call outs?

Also, you should look at analytics relating to your proxy's target as well. Response processing latency is not target response time, but time on your proxy's response flows ie policies.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dane,

Thanks for taking the time to respond!

Yes, the proxies do all use a sharedFlow we use for validating authentication. The sharedFlow also has a javascript policy. We use this sharedFlow on over 250 proxies, but I'm seeing this problem happen on only 13 proxies so I'm hesitant to blame the sharedFlow quite yet. Its worth some extra scrutiny though.

I've been running custom reports and comparing with logs some more and this does not appear to be a critical problem. Most of the time the client receives a '200 OK' and its only happening to about 0.1% of the traffic, so my hunch is that this is related to a component in AWS needing to auto-scale.

I recognize that the "request" and "response" processing latency is time spent within Apigee and not the targetServer. I could tell from other reports that the backend targets were not the source of these 30 second delays, so my next step was trying to determine if it was on the 'request' vs 'response' flow of the proxy. As seen in the 'exhibit-a', its definitely on the 'request'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As per another question of a similar nature,

Use https://github.com/yuriylesyuk/ets utility to automate sampling of traces. For each captured trace identify requests that take more than 30 seconds. You can create a trivial script that would parse trace files and identify times spent in each request and each policy.

Make ets utility invoked in a loop and let it run... until you catch your culprit.

I have attached an awk script that filters out debug request id, policy name, and policy duration.

WARNING, it is a gawk awk's dialect [for regex]!

Command:

$ awk -f trace-policyelapsedtimes.awk trace-1581013540920.xml | awk '$3 > 30'

will print out any policies, whose duration is more than 30 seconds.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll give this a try, thank you!

-

Analytics

497 -

API Hub

75 -

API Runtime

11,664 -

API Security

175 -

Apigee General

3,029 -

Apigee X

1,273 -

Developer Portal

1,907 -

Drupal Portal

43 -

Hybrid

461 -

Integrated Developer Portal

87 -

Integration

309 -

PAYG

13 -

Private Cloud Deployment

1,068 -

User Interface

75

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter