- Google Cloud

- Cloud Forums

- Apigee

- What are the pros & cons of making HTTP calls from...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have a requirement of calling cache related policies in loop. Since Apigee does not support looping of policies, we were trying to implement it using one of these approaches below:

1) Having the caching logic in a separate proxy and invoking the new proxy via HTTPclient in a loop, from a JavaScript policy.

2) Calling a shared flow X number of times, (but here X has to be a static number)

Since approach 2 is less dynamic design, we want to understand what are the risks if we opt for approach 1.

After reading some community posts and Proxy chaining documentation, we understand that every HTTP call made from JavaScript policy will involve a network hop (i.e. a round trip to Apigee Load balancer). And, number of API calls are directly related to Pricing, so we also need justification if we go with approach 1.

- Labels:

-

API Runtime

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Sonal

As you rightly mentioned, your option 2 is not scalable design wise. On the other hand you are correct with respect to the option 1's network hop.

Before I can provide some comments, would like to know the actual requirement on why you need to be calling the cache policies in loop ? Can you explain the use case please ? Probably we could come up with a design that just does one lookup and you are good..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Our use case is to extract and cache individual messages from a response containing an array of messages. In example json below, we would like to store and retrieve messages by id as cache key.

{

"msg" : [

{

"id" : 1234,

"body" : "Msg3"

},

{

"id" : 5678,

"body" : "Msg2"

},

{

"id" : 3345,

"body" : "Msg4"

}

]

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why not do the looping in a nodejs target ? This is exactly the use-case for which the nodejs target in Apigee Edge was intended.

If each additional request is to an Apigee Edge endpoint, then yes, you may incur additional cost for those APIs. But if each additional request out is sent to an API endpoint not hosted by Apigee Edge, there's no additional cost.

Your nodejs logic can also employ caching of responses to minimize requests and latency.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Dino, I tried finding samples which could explain how using nodejs is beneficial in this case.

If we already have the above json before invoking the node.js proxy flow, we spun a server in node.js script. Are you saying we can call another flow or proxy on that server? How is that different? We cannot use management APIs to populate the cache.

There are also mentions of using Trireme for running node.js in apigee edge. Information around its usage is also not clear.

-------------------------------

Tried creating my first node.js proxy with an simple test to read request payload and return the same in response. The console shows that node.js server was started multiple times, even though I invoked the proxy just once. There are no loops or callbacks added as of now.

*** Starting script

node.js application starting...

Node HTTP server is listening

*** Starting script

node.js application starting...

*** ReferenceError: "request" is not defined.

ReferenceError: "request" is not defined.

at /organization/environment/api/hello-world.js:6

at module.js:456

at module.js:474

at module.js:356

at module.js:312

at module.js:497

at startup (trireme.js:142)

at trireme.js:923

*** Starting script

node.js application starting..

......similar trace printed few more times...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sonal, it seems the "request" module is not found. You have not correctly structured the nodejs code so that it can be imported & deployed successfully. Often this means a dependency is missing from package.json .

ps: If you want to use "request", you will need to use an older version. I suggest:

"request": "^2.67.0"

---------

Are you saying we can call another flow or proxy on that server?

No, I'm saying you could populate the cache from within the nodejs logic. It probably looks something like this:

// sonal.js

var apigee = require('apigee-access');

var cache = apigee.getCache(undefined, {scope: 'application'}); // the default Apigee Edge cache ("un-named")

var requestjs = require('request');

var async = require('async');

var app = require('express')();

var bodyParser = require('body-parser');

var cacheItemTtl = 600;

var data = {

"msg" : [

{

"id" : 1234,

"body" : "Msg3"

},

{

"id" : 5678,

"body" : "Msg2"

},

{

"id" : 3345,

"body" : "Msg4"

}

]

};

// ================================================================

// Server interface

app.use(bodyParser.json());

app.use(bodyParser.urlencoded({ extended: true }));

function getOne(options) {

return function(item, cb) {

options.uri = "https://foo.bar.bam/" + item.id;

requestjs(options, function(e, httpResp, body) {

if (e) { return cb(e); }

var cacheKey = 'element-' + item.id;

cache.put(cacheKey, body, cacheItemTtl, function(e) {

cb(e, "ok");

});

});

};

}

app.get('/foo', function(request, response) {

var options = {

timeout : 66000, // in ms

uri: "https://foo.bar.bam/", // will be replaced in the loop

method: 'get',

headers: {

accept : 'application/json'

}

};

async.mapSeries(data.msg, getOne(options), function(e, results) {

if (e) {

response.header('Content-Type', 'application/json')

.status(500)

.send(JSON.stringify({ error: e }, null, 2) + "\n");

return ;

}

response.header('Content-Type', 'application/json')

.status(200)

.send(JSON.stringify(results, null, 2) + "\n");

});

});

// default behavior

app.all(/^\/.*/, function(request, response) {

response.header('Content-Type', 'application/json')

.status(404)

.send('{ "message" : "This is not the server you\'re looking for." }\n');

});

var port = process.env.PORT || 5950;

app.listen(port, function() { });

The nodejs logic can use requestjs to invoke requests, and then can cache responses or anything it likes into the Apigee cache.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the sample @Dino. It definitely introduces new possibilities.

Although, at this point, I am not even sure if our existing architecture can handle introducing node.js modules. From what I read till now, deployment of node.js application requires configuring apigeetool. Still lot of things are there to be explored.

But I am sure the sample will be useful to someone facing similar challenge.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I read till now, deployment of node.js application requires configuring apigeetool.

Not true. the use of nodejs in an api proxy does not introduce new requirements for import & deployment.

There is an Administrative API for deploying API Proxies into Apigee Edge, whether the API Proxy uses nodejs or not. You can use that admin API directly, or through a "Wrapper tool". apigeetool is one such tool. There are other wrappers, like

- pushapi - written in bash

- Powershell module - specifically the Import-EdgeApi cmdlet

- apigee-edge-js - specifically the importAndDeploy tool.

- and probably others

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @DChiesa with NodeJS no longer being supported by APigee and it being decommissioned what is the recommended solution to cache multiple values in an API?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

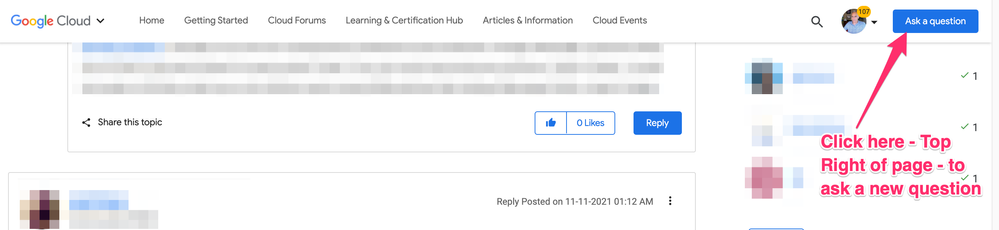

I appreciate your question and would like to answer it. Please ask your question in a new thread, rather than appending it as a comment to a 5-year old thread.

When you DO ask the new question, maybe provide more context and information around what you mean by "cache multiple values". There's a set of cache policies in Apigee. Maybe in your question explain why those are not suitable for your purposes.

-

Analytics

497 -

API Hub

75 -

API Runtime

11,664 -

API Security

175 -

Apigee General

3,029 -

Apigee X

1,273 -

Developer Portal

1,907 -

Drupal Portal

43 -

Hybrid

461 -

Integrated Developer Portal

87 -

Integration

309 -

PAYG

13 -

Private Cloud Deployment

1,068 -

User Interface

75

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter