- Google Cloud

- Cloud Forums

- Apigee

- running multiple edgemicro instances to point to d...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have multiple edgemicro instances (each running on different server) front ended by NGINX load balancer, the proxy endpoint to target endpoint mapping is the same for each edgemicro instance. How do you load balance the traffic to different instances of your API service?

For example, the HTTP request to Nginx is http://myNginx:8080/myservice. The request is round robin to two edgemicro instances: http://server1:8000/myservice and http://server2:8000/myservice

Since the proxy /myservice to target URL mapping is the same for these two edgemicro instances, the traffic will point to the same service end point again( the same instance of my API service).

This is not what we want for using front end load balancer. We need to redirect traffic to different instances of the my API service.

Thanks,

- Labels:

-

API Runtime

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi James,

I'm not entirely sure what is being asked. I'll try to re-state. Let me know if this is the question.

You have Nginx fronting multiple edgemicro instances. So the relationship is the following:

req--> myNginxL8080/myservice --> server1:8000/myservice or server2:8000/myservice

You have 1 nginx instance load balancing multiple instances of edgemicro.

The path to load balance to these instances is /myservice, but this also corresponds to a path to a proxy on edgemicro also called /myservice. This means that you can only call one of the proxies in edgemicro due to this pathing issue. Correct?

I'm not entirely sure how to structure edgemicro behind nginx like that. Here are a few options:

- If nginx is only fronting edgemicro instances is it possible to just loadbalance on / and send the whole path back?

- Is it possible to change the path you're matching on to a generic path that is stripped out by nginx? Say /myservices which translates it to /myservices/myservice and nginx removes /myservices before sending the request to edgemicro?

- Or is it possible to configure multiple paths in nginx to load balance to edgemicro. It's not ideal, but if /myservice matches the /myservice endpoint on edgemicro then you should be able to match a /myserviceTwo to /myserviceTwo on edgemicro as well.

-Matt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Matt. Sorry for the confusion. My question is if you have a base path to target endpoint mapping in edgemicro, for example: /myservice ---> /mytargetServer1:8080, this mapping is shared by all edgemicro instances. I can't have another mapping /myservice ---> /mytargetServer2:8080.

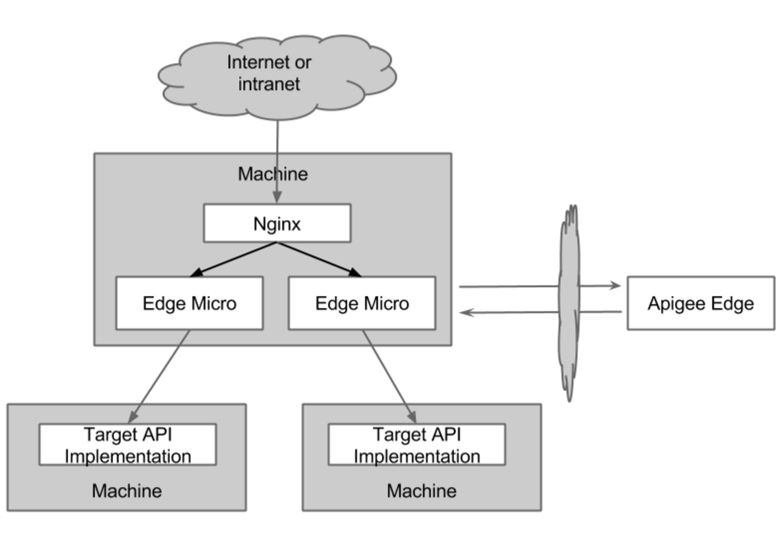

See picture below from Apigee doc. How do you have first edgemicro instance point to first Target API server and the second edgemicro instance point to the second Target API server if the base path is the same /myservice (which is the upstream from Nginx)

I could use option 3 you suggested above to configure multiple paths in Nginx upstream to load balance to edgemicro instances. But that is a work around, not the normal configuration of a load balancer. I am not sure other type load balancers (for example, layer 4 load balancer) can configure multiple upstream paths like this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ah. I see. Going off that diagram each instance of edgemicro would have a /myservice path, and that path would point to each target API implementation. Is that what you're going for?

-Matt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, this is what I am looking for. I have two instances of backend micro services on two different servers. I need traffic to be load balanced to these two servers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Gotcha. Can you point me in the direction of where you found that picture. I can't find the article for some reason.

-Matt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Matt,

The picture is at overview part of latest Microgateway doc:

http://docs.apigee.com/microgateway/latest/overview-edge-microgateway

Also there is an article from apigee for Nginx/microgateway integration:

https://apigee.com/about/blog/developer/managing-apis-apigee-edge-microgateway-and-nginx

Apigee Microgateway really should provide some support of load balancing of backend services, just like Netflix/Zuul gateway is doing.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey James,

So this isn't really something that is supported currently by EM. It's not currently possible to set a target endpoint based on path in a scalable way. Here are two options that may work though.

- Colocating edgemicro on each microservice box. You'd be able to simply have nginx load balance as normal, and edgemicro proxy to the services on the colocated box.

- Adding another instance of nginx between edgemicro and the microservice instances. You'd have proxies point at that nginx, and have nginx do the load balancing between microservices.

Would any of these work?

-Matt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Matt!

Regarding to solution one colocating edgemicro on each microservice box, It seems I need to build a proxy mapping on edge from /myservice to http://localhost:port ? This mapping is shared by each edgemicro instance to point to local microservice port (and port has to be the same) This should work but not flexible.

For solution 2 adding nginx between edgemicro and the microservice instances, the load balancer has to be colocating with edgemicro instance too and I need to build a proxy mapping on edge from /myservice to http://localhost:port where port is the nginx listening port.

I think the general issue here is Edge does not have individual edgemicro instance configuration. The proxy endpoint to target endpoint mapping is shared globally by all edgemicro instances within an organization. This makes microsevrice load balancing within a data center more complicated and not flexible.

Let me know your thought on this. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey James,

Sorry for being slow getting back to you. Yes you'd need to map to http://localhost:<port>/myService.

We'll be evaluating how to do this better going forward in EM. Stay tuned. I'll update this thread in the future if we have something that makes this use case easier.

-Matt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sounds good. Thanks!

-

Analytics

497 -

API Hub

75 -

API Runtime

11,662 -

API Security

175 -

Apigee General

3,027 -

Apigee X

1,271 -

Developer Portal

1,906 -

Drupal Portal

43 -

Hybrid

459 -

Integrated Developer Portal

87 -

Integration

308 -

PAYG

13 -

Private Cloud Deployment

1,067 -

User Interface

75

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter