- Google Cloud

- Cloud Forums

- Apigee

- What is exact function of zookeeper

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear All,

From apigee document i understand below as function of zookeeper:

contains configuration data about all the services of the zone and which notifies the different servers of configuration changes.

Can anyone tell me the detailed functions of zookeeper ? e.g. what kind of write operations are being performed and what kind of read operations are being performed ? What are the configurations being maintained and what kind of notifications are being sent by zookeeper ?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @krishna sharma ,

Please find answers for your queries below, Thank you @sparamanandhan for providing more details regarding same.

- Zookeeper is the centralized repository for configuration related to information which will be consumed by different distributed applications.

I am sure after reading above line you will be, I know that's what wiki says. Let's talk about why do we need zookeeper,

- Let's say multiple software components running on different computers distributed across networks but you would like to run them as a single system.

- Goal of distributed computing is to make such a network of systems to run as a single computer.

- Why do we need distributed computing? Scalability: so that you can add more machines, Redundancy: So that if one machine goes down other can take over so that work doesn't stop.

-- So obviously, distributed systems need coordination so that they can work together. Let's say you would like to change a configuration that needs to be picked up by several programs running on different machines across networks. i.e sharing config information across all nodes.

-- Find a single machine across clusters of 1000 of servers, zookeeper provides naming service using which you can target single machine to deal with that particular machine.

-- Distributed synchronizations, let's say locking, maintaining queues etc.

-- Group Services, Leader election & more.

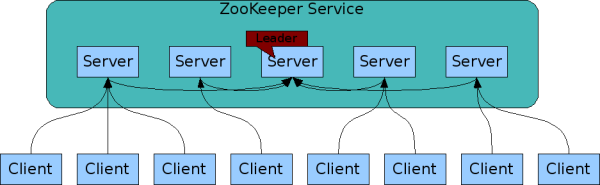

Client always connects to single zookeeper server to get config information, if the connection breaks it will connect to next zookeeper server. Each zookeeper servers knows about all other servers.

Let's talk about how Apigee uses zookeeper,

As you know, Apigee has the concept of orgs. Each org needs multiple message processors, routers, cassandra db, postgres etc in Apigee Enterprise platform so that entire thing can work as a single system called Apigee Edge. As you see, multiple software components like message processors, routers , cassandra run on different computers in different networks

Let's say in Apigee Edge cloud, when you would like to deploy an API Proxy, when you make Management API call, the call should know which message processors, cassandra details, router details to deploy your proxy like ip address , endpoints, uuid etc. This information is stored in ZooKeeper. API call that deploys proxy gets information from zookeeper server to which are all message processors it needs to send proxy details & further process the request.

In Zookeeper reads can happen from any zookeeper server, but writes always go through leader. Let's say when a new message processor is added to one of the org to handle spike in traffic, mp information will be written to zookeeper leader. All the followers will replicate the data & servers management API calls. If leader goes down, other followers will elect a new leader.

Zookeeper is open source software & provides above functionality out of the box without reinventing the wheel to manage distributed applications.

what kind of write operations are being performed and what kind of read operations are being performed?

- Particular org related wiring information like mps, routers, cassandra, postgres and other softwares part of Apigee Edge information is written to zookeeper.

- Apigee Edge management API calls reads above wiring information to perform actions like deploy proxy etc

Keep us posted if you have any further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @krishna sharma ,

Please find answers for your queries below, Thank you @sparamanandhan for providing more details regarding same.

- Zookeeper is the centralized repository for configuration related to information which will be consumed by different distributed applications.

I am sure after reading above line you will be, I know that's what wiki says. Let's talk about why do we need zookeeper,

- Let's say multiple software components running on different computers distributed across networks but you would like to run them as a single system.

- Goal of distributed computing is to make such a network of systems to run as a single computer.

- Why do we need distributed computing? Scalability: so that you can add more machines, Redundancy: So that if one machine goes down other can take over so that work doesn't stop.

-- So obviously, distributed systems need coordination so that they can work together. Let's say you would like to change a configuration that needs to be picked up by several programs running on different machines across networks. i.e sharing config information across all nodes.

-- Find a single machine across clusters of 1000 of servers, zookeeper provides naming service using which you can target single machine to deal with that particular machine.

-- Distributed synchronizations, let's say locking, maintaining queues etc.

-- Group Services, Leader election & more.

Client always connects to single zookeeper server to get config information, if the connection breaks it will connect to next zookeeper server. Each zookeeper servers knows about all other servers.

Let's talk about how Apigee uses zookeeper,

As you know, Apigee has the concept of orgs. Each org needs multiple message processors, routers, cassandra db, postgres etc in Apigee Enterprise platform so that entire thing can work as a single system called Apigee Edge. As you see, multiple software components like message processors, routers , cassandra run on different computers in different networks

Let's say in Apigee Edge cloud, when you would like to deploy an API Proxy, when you make Management API call, the call should know which message processors, cassandra details, router details to deploy your proxy like ip address , endpoints, uuid etc. This information is stored in ZooKeeper. API call that deploys proxy gets information from zookeeper server to which are all message processors it needs to send proxy details & further process the request.

In Zookeeper reads can happen from any zookeeper server, but writes always go through leader. Let's say when a new message processor is added to one of the org to handle spike in traffic, mp information will be written to zookeeper leader. All the followers will replicate the data & servers management API calls. If leader goes down, other followers will elect a new leader.

Zookeeper is open source software & provides above functionality out of the box without reinventing the wheel to manage distributed applications.

what kind of write operations are being performed and what kind of read operations are being performed?

- Particular org related wiring information like mps, routers, cassandra, postgres and other softwares part of Apigee Edge information is written to zookeeper.

- Apigee Edge management API calls reads above wiring information to perform actions like deploy proxy etc

Keep us posted if you have any further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot Anil. It was very informative. I was always curious to know how apigee uses zookeeper and I am sure other folks of apigee community will also find this very useful 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep, That's true. I also learnt some new things. Great Question & thank you for asking same.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Anil, I've asked similar question for cassandra. Would be great if you can give such amazing points again 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anil Sagar gave a very good explanation. I was again going through the cluster setup and another question struck me :

Let's say 3 out of 2 zookeepers are down so no new write operations will happen. Now let's say that we are not adding any new components for time being then write operations will not be required right ? If new writes are not required then it's safe to assume that our cluster will work seemlessly untill there is a need to add a new component or the working zookeeper also goes down.

Please let me know if my understand is correct.

-

Analytics

497 -

API Hub

75 -

API Runtime

11,663 -

API Security

175 -

Apigee General

3,028 -

Apigee X

1,272 -

Developer Portal

1,906 -

Drupal Portal

43 -

Hybrid

460 -

Integrated Developer Portal

87 -

Integration

309 -

PAYG

13 -

Private Cloud Deployment

1,067 -

User Interface

75

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter