- Google Cloud

- Cloud Forums

- Apigee

- Using Apigee to push real-time API insights to Ama...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We've recently been starting to get involved with feeding API traffic to Marketing "Big Data" systems.

To me, this seems comes down to is that we have tons of api traffic flowing through Apigee Edge that we'd like to siphon to land on Amazon S3 buckets so our Data Scientists can do what ever they do with Hive/Pig/Hadoop etc.

The basic problem I've been looking to solve is to POST json data that can be persisted on Amazon S3. This seems pretty straight forward at a glance because S3 has a REST API and we love those!

However, I'm concerned about the scalability of such an approach. This has has me googling around for other options and I came across Amazon Kinesis.

From product documentation - "Amazon Kinesis is a fully managed service for real-time processing of streaming data at massive scale. Amazon Kinesis can continuously capture and store terabytes of data per hour from hundreds of thousands of sources."

Yup, that sounds like what I'm looking for. I need to feed information about requests that are happening in Apigee Edge to be stored on S3.

Does anyone have some experience wiring in Amazon S3 buckets to Apigee? Does Kinesis offer any benefits I need to consider? It appears they have a node JS "client" https://github.com/awslabs/amazon-kinesis-client-nodejs but is this a feasible use case for Edge?

Thanks for your insights!!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The final approach for us here was to dissect the https://www.npmjs.com/package/volos-s3 implementation and roll our own Node.js API Proxy that used the Node.js AWS-SDK and an associated Node.js Client.

Then we developed a command-line Node.js client to take advantage of the more advanced requirements for app developers migrating from FTP to S3.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Kristopher Kleva, It's great to hear from you! that sounds really cool! I may have a hack for you 🙂 inspired by Fun Integrations for Fun and Profit session from @Marsh Gardiner and @Carlos Eberhardt at #ILOVEAPIs 2015.

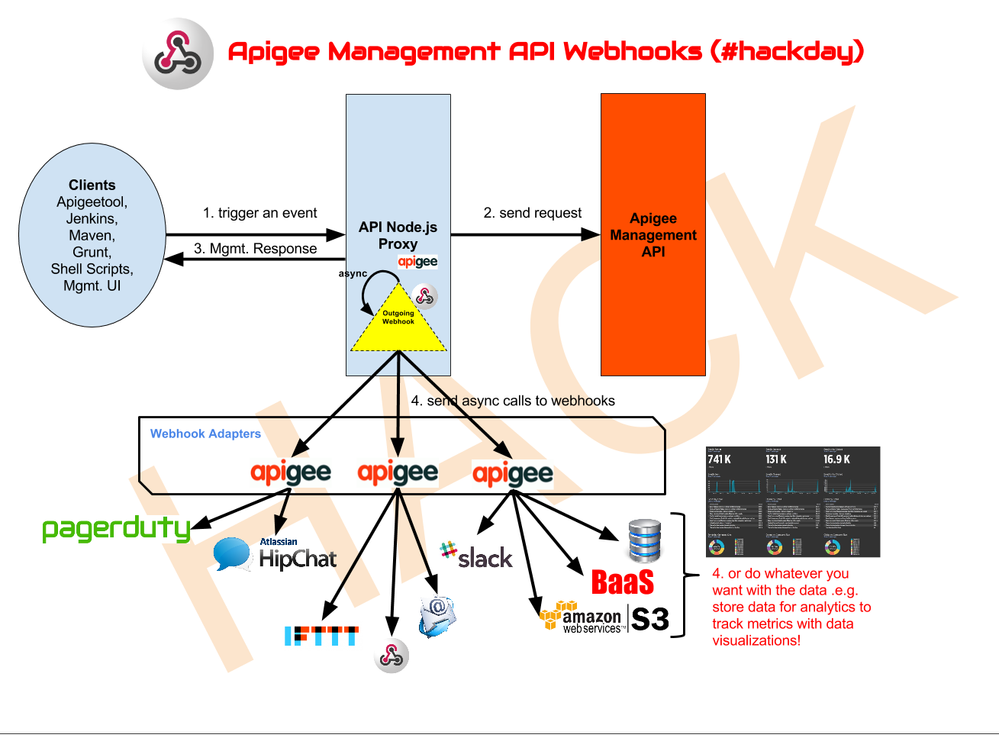

@Alex Koo, @Maruti Chand, @Vinit Mehta, and I started to experiment with API augmentation capabilities by setting proxies in front of existing APIs on our #HackDay at Apigee. The concept follows EDA (event-driven architecture) with the proxy/facade pattern, but there are a few tweaks to it with the adapter pattern. The result of this experiment is Apigee Webhooks. A tiny Node.js App and adapters that also implement the pub/sub pattern by enabling a proxy in front of any API. It works end-to-end with the Management API for now, but it can be easily extended it with any other API. With enough curiosity, I believe you could leverage it with Amazon Kinesis or S3 without impacting latency on your APIs. The secret sauce to it is that it leverages streaming and async capabilities of Node.js. Check it out here!

I'll soon post an article about it. But to give you a more insight on it, check out the following image:

@Sanjeev here's a use case for it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kristopher Kleva, when you say "I need to feed information about requests that are happening in Apigee Edge to be stored on S3", did you mean more information than what is being stored right now in Analytics?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kristopher Kleva - seems like what you want is to periodically dump analytics data into S3.

That seems like a reasonable product capability request. Let us think about that for a bit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Moving data from Apigee edge message processors to analytics then extracting and posting to s3 in may work.

I'll attempt something along those lines and get back.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Dino and @Diego Zuluaga I've got a good demo together for our team based on one of the volos-connectors for S3. However, a blocker appears to be the payload size that can be PUT to through Apigee to S3. Documentation suggests a maximum post of 10 MB payload on a post. This will likely not work for all of our consumers because some will need to PUT files over 20-60 GB.

I'm going to look into updating the volos-s3 sample to use Multi-part uploads to S3. http://docs.aws.amazon.com/AmazonS3/latest/dev/mpuoverview.html if this works out and we can junk these 20GB files into 10MB chunks I may be close to a good solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It certainly sounds like a compelling use case for streaming with multipart data. I've seen this implemented as a firehose with Twitter APIs.

More on Multipart uploads to S3 https://aws.amazon.com/blogs/aws/amazon-s3-multipart-upload/. Do keep us posted.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The final approach for us here was to dissect the https://www.npmjs.com/package/volos-s3 implementation and roll our own Node.js API Proxy that used the Node.js AWS-SDK and an associated Node.js Client.

Then we developed a command-line Node.js client to take advantage of the more advanced requirements for app developers migrating from FTP to S3.

-

Analytics

497 -

API Hub

75 -

API Runtime

11,660 -

API Security

174 -

Apigee General

3,020 -

Apigee X

1,262 -

Developer Portal

1,906 -

Drupal Portal

43 -

Hybrid

459 -

Integrated Developer Portal

87 -

Integration

308 -

PAYG

13 -

Private Cloud Deployment

1,067 -

User Interface

75

| User | Count |

|---|---|

| 5 | |

| 2 | |

| 2 | |

| 1 | |

| 1 |

Twitter

Twitter