- Google Cloud

- Articles & Information

- Community Blogs

- Choosing between Google Kubernetes Engine (GKE) mu...

Choosing between Google Kubernetes Engine (GKE) multi-cluster solutions in Google Cloud

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In this article, I'll be walking you through different Google Kubernetes Engine (GKE) multi-cluster solutions and providing recommendations on which one to choose depending on the situation, including the advantages and disadvantages associated with each one of them.

This article will focus on these solutions from a GKE perspective, but the same can be extended to other hosting platforms, like managed instance groups, Cloud Run, etc.

- Multi-cluster solutions offered for GKE in Google Cloud

- 1. Standalone network endpoint groups (NEGs)

- 2. Multi Cluster Ingress

- 3. Multi Cluster Gateway

- 4. Anthos Service Mesh

- Feature comparison of solutions

- Conclusion

There are multiple GKE multi-cluster solutions that we can utilize when we have to distribute traffic between multiple clusters and apply multiple load balancing policies. Based on different use cases, we have to distribute the traffic in different formats.

Multi-cluster solutions offered for GKE in Google Cloud

- Standalone network endpoint groups (NEGs)

- Multi Cluster Ingress (MCI)

- Multi Cluster Gateway (MCG)

- Anthos Service Mesh (ASM)

Lets go step by step and understand each of these different approaches.

1. Standalone network endpoint groups (NEGs)

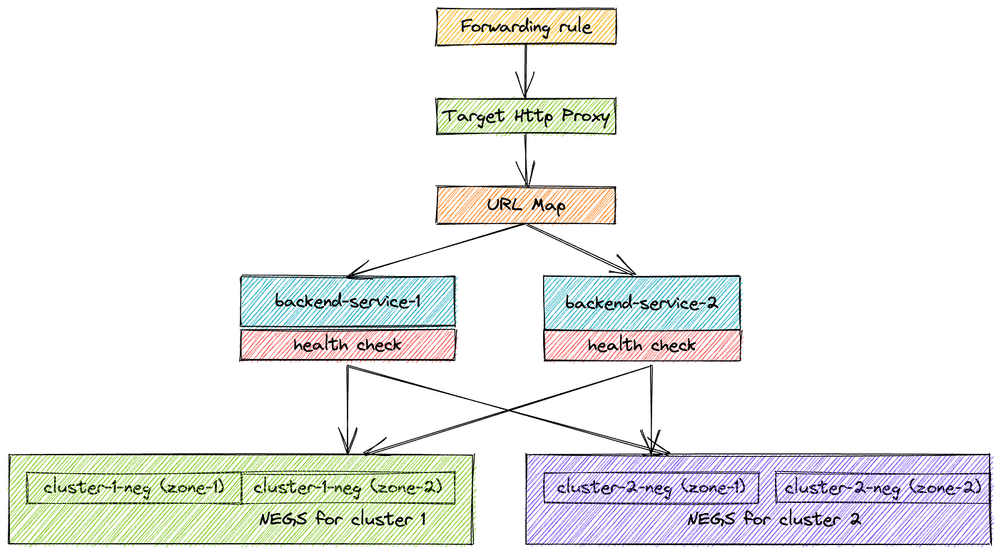

Through this strategy, we distribute traffic between two clusters by creating NEGs for each cluster and connecting them with backend service, health check, url map, target http proxy, and forwarding rule.

Explanation of key terms

- Forwarding Rule : Each rule is associated with a specific IP and port. Given we’re talking about global HTTP load balancing, this will be anycast global IP address (optionally reserved static IP). The associated port is the port on which the load balancer is ready to accept traffic from external clients. The port can be 80 or 8080 if the target is HTTP proxy, or 443 in case of HTTPS proxy

- Target HTTP(S) Proxy : Traffic is then terminated based on Target Proxy configuration. Each Target Proxy is linked to exactly one URL Map (N:1 relationship). You’ll also have to attach at least one SSL certificate and configure SSL Policy In case of HTTPS proxy.

- URL Map : The core traffic management component and allows you to route incoming traffic between different Backend Services (incl. GCS buckets). Basic routing is hostname and path based, but more advanced traffic management is possible as well — URL redirects, URL rewriting and header- and query parameter-based routing. Each rule directs traffic to one Backend Service.

- Backend Service : Logical grouping of backends for the same service and relevant configuration options, such as traffic distribution between individual Backends, protocols, session affinity or features like Cloud CDN, Cloud Armor or IAP. Each Backend Service is also associated with a Health Check.

- Health Check: Determines how individual backend endpoints are checked for being alive, and this is used to compute overall health state for each Backend. Protocol and port have to be specified when creating one, along with some optional parameters like check interval, healthy and unhealthy thresholds or timeout. Important bit to note is that firewall rules allow health-check traffic from a set of internal IP ranges.

- Backend: Represents a group of individual endpoints in given location. In case of GKE our backends will be Network Endpoint Groups (NEGs). Network endpoint groups overview one per each zone of our GKE cluster (in case of GKE NEGs these are zonal, but some backend types are regional).

- Backend Endpoint: Combination of IP address and port, in case of GKE with container-native load balancing.

Steps to set up and deploy standalone NEGs

See this article for steps to set up and deploy standalone NEGs.

Limitations of standalone NEGs to consider

- Limited protocol support: Standalone NEGs support only HTTP(S) and HTTP/2 protocols. Other protocols like TCP or UDP are not natively supported, limiting their applicability for non-HTTP workloads.

- No global load balancing: Standalone NEGs are regional, meaning they can’t be used for global load balancing. If you need to distribute traffic globally, you must use Google’s external HTTP(S) load balancer or other global load balancing solutions.

- No managed instance groups (MIGs): Standalone NEGs do not support integration with Managed Instance Groups, which are often used for auto-scaling and managing virtual machine instances. This can complicate the scaling and management of backend instances.

- No auto-healing: Standalone NEGs do not provide auto-healing capabilities. If a backend instance in a NEG becomes unhealthy, it won’t automatically be replaced or repaired, potentially leading to service disruptions.

- Limited health check options: Standalone NEGs offer limited health check options compared to standard load balancer configurations. This can impact the ability to define custom health checks for more complex application scenarios.

- No built-in SSL/TLS termination: Standalone NEGs do not offer built-in SSL/TLS termination. If you require SSL/TLS termination for your application, you must handle it at the backend instance level, which can be more complex.

- No support for IP address ranges: Standalone NEGs do not support IP address ranges as backend endpoints. You can only use IP addresses of individual instances as backend endpoints.

- Limited to Google Kubernetes Engine (GKE): Standalone NEGs are primarily designed for use with Google Kubernetes Engine (GKE). They may not be as suitable for other use cases or cloud providers.

2. Multi Cluster Ingress

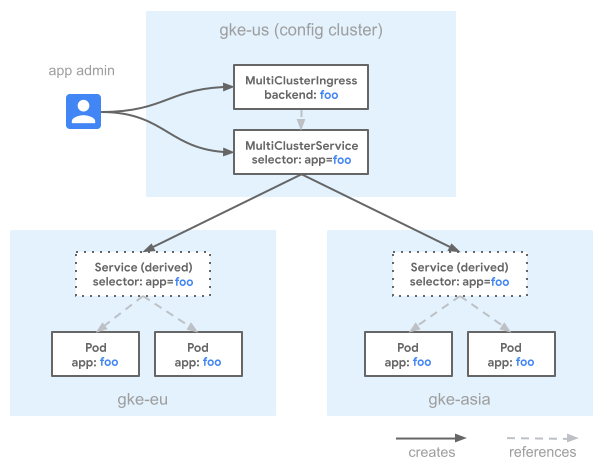

Multi-cluster ingress in Google Cloud is a networking concept that enables the efficient and secure routing of incoming traffic to multiple Kubernetes clusters deployed across different regions or environments. It serves as a critical component for high availability, disaster recovery, and load balancing strategies.

With multi-cluster ingress, you have the following capabilities:

- Global load balancing: Utilize a global load balancer to distribute traffic across clusters in different geographic locations, ensuring optimal user experience and fault tolerance.

- Failover and Redundancy: Implement failover mechanisms, where traffic automatically reroutes to healthy clusters if one becomes unavailable, enhancing application reliability.

- Traffic Shaping: Control traffic distribution by applying rules and policies, such as canary deployments or A/B testing, to specific clusters or versions of your applications.

- Security: Enforce security policies by routing traffic through Google Cloud's Web Application Firewall (WAF) or Cloud Armor for protection against DDoS attacks and unauthorized access.

- Centralized Management: Simplify ingress configuration and management with a centralized control plane, making it easier to scale and maintain applications across clusters.

Overall, multi-cluster ingress in Google Cloud streamlines the deployment and management of applications across distributed Kubernetes clusters - enhancing performance, resilience, and security while reducing operational complexity.

Steps to set up and deploy Multi Cluster Ingress

See this article for steps to set up and deploy multi-cluster ingress.

Limitations of Multi Cluster Ingress to consider

- Complex configuration: Setting up and managing multi-cluster ingress can be complex, especially when dealing with multiple Kubernetes clusters across different regions or environments. Configuring routing rules and policies across clusters can become intricate.

- Limited protocol support: Multi-cluster ingress primarily focuses on HTTP(S) traffic, and handling other protocols (e.g., TCP, UDP) may require additional configuration and workarounds.

- Cross-region latency: Routing traffic between clusters in different regions or zones can introduce latency, impacting application performance for latency-sensitive workloads.

- Global load balancing: Multi-cluster ingress doesn’t inherently provide global load balancing. To achieve global load balancing, you typically need to use Google’s external HTTP(S) load balancer or other global load balancing solutions.

- Data transfer costs: Transferring data between clusters in different regions or zones may incur additional data transfer costs, which can affect your overall operational expenses.

- Security configuration: Ensuring consistent security policies and access control across multiple clusters can be challenging. You need to carefully manage identity and security settings.

- Limited built-in SSL/TLS termination: Multi-cluster ingress may not offer built-in SSL/TLS termination, requiring additional setup for secure communication.

- Health check configuration: The health check mechanisms for backend services might be limited compared to more advanced configurations, potentially impacting failover and auto-healing capabilities.

- Limited support for other cloud providers: Multi-cluster ingress solutions may be more tailored to Google Kubernetes Engine (GKE) and might not be as suitable for other cloud providers or on-premises environments.

- Continuous updates: Google Cloud services and features continually evolve, so it’s important to stay up-to-date with the latest developments and best practices for multi-cluster ingress to address any limitations or challenges.

3. Multi Cluster Gateway

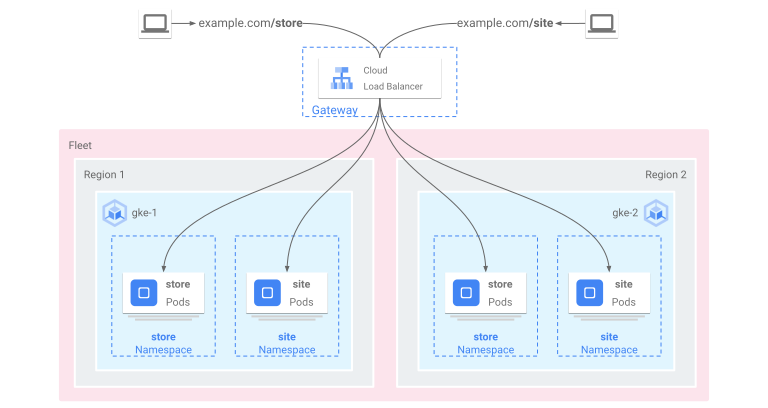

A multi-cluster Gateway is a Gateway resource that load balances traffic across multiple Kubernetes clusters. In GKE, the gke-l7-global-external-managed-mc, gke-l7-regional-external-managed-mc, gke-l7-rilb-mc, and gke-l7-gxlb-mc GatewayClasses deploy multi-cluster Gateways that provide HTTP routing, traffic splitting, traffic mirroring, health-based failover, and more across different GKE clusters, Kubernetes Namespaces, and across different regions.

Multi-cluster Gateways make managing application networking across many clusters and teams easy, secure, and scalable for infrastructure administrators.

Steps to set up and deploy Multi Cluster Gateway

See this article for steps to set up and deploy multi-cluster Gateway.

Limitations of Multi Cluster Gateway to consider

- Support: The GKE Gateway controller for multi-cluster networking, multi-cluster Gateways, are currently in the Preview launch stage which carries no SLA or technical support.

- Complexity: Setting up and managing a Multi-Cluster Gateway in Google Cloud can be complex, involving multiple services like GKE, load balancers, networking components, and service meshes. This complexity can make the configuration and maintenance challenging.

- Latency: While Google Cloud offers global load balancing, there may still be some latency introduced when traffic is routed between clusters in different regions or zones. This latency can impact application performance, especially for latency-sensitive workloads.

- Data consistency: Ensuring data consistency across multiple clusters can be challenging. You need to implement strategies like data replication, synchronization, or distributed databases to maintain data integrity.

- Cost: Running multiple GKE clusters and global load balancers can increase operational costs. It’s important to carefully plan your infrastructure to optimize cost-effectiveness.

- Limited cross-region support: Some Google Cloud services and features may have limitations when used across multiple regions or zones. It’s essential to be aware of these limitations when designing a Multi-Cluster Gateway.

- Data transfer costs: Data transfer between Google Cloud regions or zones may incur additional costs, which can impact your overall budget.

- Security and compliance: Ensuring consistent security policies and compliance across multiple clusters can be challenging. Managing identity and access control, auditing, and encryption needs to be carefully orchestrated.

- Service discovery: Discovering and routing traffic to services in different clusters may require additional configuration or third-party service mesh solutions.

- Lack of native multi-cluster gateway service: As of my last knowledge update, Google Cloud did not have a dedicated native Multi-Cluster Gateway service, which means you need to architect and implement this functionality using various Google Cloud components.

4. Anthos Service Mesh

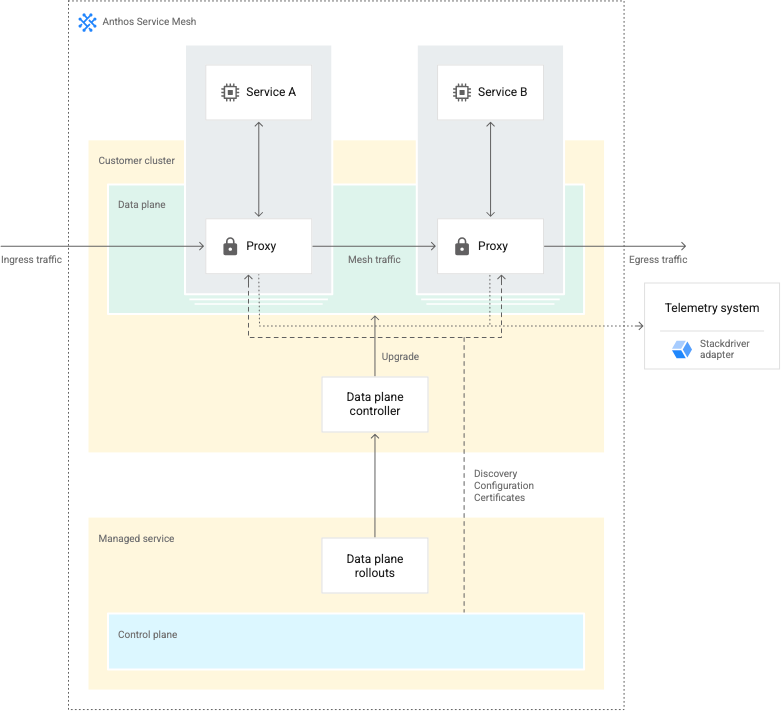

Anthos Service Mesh is a powerful cloud-native service management platform offered by Google Cloud. It allows organizations to connect, secure, monitor, and manage services running on Kubernetes clusters, whether they are on-premises, in the cloud, or in hybrid environments.

Key features of Anthos Service Mesh include:

- Service-to-service communication: It facilitates secure and reliable communication between microservices, regardless of their location. This enhances application resilience and allows for smooth deployment across clusters.

- Traffic management: Anthos Service Mesh offers advanced traffic management capabilities, such as A/B testing, canary deployments, and dynamic routing, enabling organizations to optimize service delivery.

- Security and policy enforcement: It provides robust security features like identity-based authentication, authorization, and encryption. Policies can be consistently enforced across all services.

- Observability: Anthos Service Mesh integrates with Google’s Cloud Monitoring and Cloud Logging, providing deep insights into application performance and helping identify issues faster.

- Multi-cluster support: It supports multi-cluster deployments, making it suitable for complex, distributed architectures.

- Service mesh telemetry: It collects telemetry data, helping organizations understand how their services are behaving, and provides valuable information for troubleshooting and optimization.

In summary, Anthos Service Mesh in Google Cloud simplifies the management of microservices and helps organizations build, deploy, and maintain applications more efficiently, securely, and reliably in a multi-cluster, multi-cloud, or hybrid environment. It’s a valuable tool for modernizing applications and embracing cloud-native development practices.

Feature comparison of solutions

| Feature | Anthos Service Mesh (ASM) | Multi Cluster Ingress (MCI) | Multi Cluster Gateway (MCG) | Standalone network endpoint groups (NEGs) |

| Purpose | Service mesh for managing and securing microservices | Distributing external HTTP(S) traffic across clusters | Secure communication and traffic management across clusters | Load balancing and traffic routing for GKE clusters |

| Traffic routing | Intra-cluster and inter-cluster routing with advanced features | External traffic routing and distribution | Inter-cluster routing with advanced traffic management | Load balancing and traffic routing within a single/multiple clusters |

| Load balancing | Ingress and egress load balancing within and between clusters | Global and regional load balancing for external traffic | Load balancing for inter-cluster communication | Load balancing for backend services within a single cluster |

| Security features | Identity-based authentication, authorization, encryption | SSL/TLS termination, Web Application Firewall (WAF) | Encryption, firewall policies | SSL/TLS termination (limited) |

| Health checks | Advanced health check configurations | Basic health checks | Health checks with custom rules | Health checks with limited configurability |

| Protocol support | HTTP, gRPC, TCP, and others | HTTP(S) | HTTP(S) | HTTP, HTTPS |

| Global reach | Supports multi-cloud and hybrid deployments | Limited to routing external traffic | Supports inter-cluster communication | Limited to a single/multiple clusters |

| Observability | Deep insights into service behavior, telemetry, and monitoring | Basic observability for external traffic | Basic observability for inter-cluster traffic | Limited observability |

| Managing complexity | Moderate to high complexity due to advanced features | Moderate complexity for external traffic management | Moderate complexity for inter-cluster communication | Relatively simple configuration |

| Multi-cloud / hybrid support | Supports multi-cloud and hybrid environments | Limited to Google Cloud | Limited to Google Cloud | Limited to Google Cloud |

| Data transfer costs | Data transfer costs between clusters and regions | Data transfer costs for external traffic | Data transfer costs between clusters | Data transfer costs within a single cluster |

| SLA guarantee | No inherent SLA guarantee; depends on configuration and infrastructure | No inherent SLA guarantee; depends on configuration | No inherent SLA guarantee; depends on configuration | No inherent SLA guarantee; depends on configuration |

Conclusion

Multi Cluster Ingress vs Multi Cluster Gateway

Choosing between GKE Multi Cluster Ingress and Multi Cluster Gateway depends on your specific requirements and the architecture of your application. Both options provide solutions for managing traffic across multiple clusters in GKE.

GKE Multi Cluster Ingress is a native GKE feature that allows you to expose services deployed in multiple clusters using a single external IP address and load balancer. It uses the Kubernetes Ingress resource and provides layer 7 (HTTP/HTTPS) load balancing. GKE Multi Cluster Ingress is suitable when you primarily need HTTP/HTTPS-based traffic routing and load balancing capabilities.

On the other hand, Multi Cluster Gateway is part of the Istio service mesh, which provides a more comprehensive set of features for managing traffic between multiple clusters. Istio is an open-source service mesh that enhances the capabilities of Kubernetes for handling traffic management, observability, and security. Multi Cluster Gateway in Istio enables secure communication between services deployed across multiple clusters and supports various protocols and traffic management features beyond HTTP/HTTPS, including TCP and gRPC. If you require advanced traffic management capabilities, such as circuit breaking, fault injection, or distributed tracing, Istio with Multi Cluster Gateway could be a better fit.

In summary, if you primarily require HTTP/HTTPS-based traffic routing and load balancing across multiple clusters, GKE Multi Cluster Ingress would be a straightforward option. However, if you need a more comprehensive traffic management solution with support for various protocols and advanced features, Istio with Multi Cluster Gateway would be a more suitable choice. Consider evaluating your specific requirements and comparing the features and capabilities of both options before making a decision.

Multi Cluster Ingress vs Anthos Service Mesh

In summary, while Multi Cluster Ingress focuses on external traffic routing and load balancing across multiple clusters or regions, Anthos Service Mesh is more concerned with managing and securing internal communication between services within a cluster or across clusters. Multi Cluster Ingress acts as an entry point, while Anthos Service Mesh provides advanced service mesh capabilities to enhance observability, control, and security in a microservices architecture.

Multi Cluster Gateway vs Anthos Service Mesh

In summary, the main difference lies in their primary focus and scope of functionality. Multi Cluster Gateway is primarily responsible for managing external traffic and load balancing across multiple clusters, while Anthos Service Mesh focuses on internal service-to-service communication within and across clusters, providing advanced networking, observability, and security features. They can be used together in a multi-cluster environment, where the Multi Cluster Gateway handles external traffic and Anthos Service Mesh provides service-level control and security within the clusters.

Twitter

Twitter