- Google Cloud

- Articles & Information

- Community Blogs

- Building an AI-powered BigQuery Data Exploration A...

Building an AI-powered BigQuery Data Exploration App using Function Calling in Gemini

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

Function calling in Gemini allows developers to define custom functions that can output structured data from generative models and invoke external APIs. This enables LLMs to access real-time information and interact with various services, such as SQL databases, customer relationship management systems, document repositories, and anything else with an API!

In the previous blog post on The power of function calling: A native framework to connect Gemini to external systems, data, and A..., we gave an overview of how function calling works, why developers love it, and some example use cases.

In this blog post, we’ll use function calling in Gemini to build an example app with an agent that sends live commands and generated SQL queries to BigQuery (BQ) to help users explore SQL databases using natural language. And we’ll demonstrate this capability with a detailed technical explanation and a step-by-step guide of how we built the example app.

Example app: SQL Talk with BigQuery in natural language

This app demonstrates the power of Gemini's function calling capabilities and enables users to query and understand their BigQuery databases using natural language. Users don’t need to write up complex SQL queries to work with databases, rather, they can interact with the data conversationally.

Try using the SQL Talk to BigQuery demo app now!

The demo app uses theLook e-commerce dataset, which is a synthetic e-commerce and digital marketing dataset available within BigQuery’s public datasets. The dataset contains information about customers, products, orders, logistics, web events and digital marketing campaigns. This is just an example dataset to explore in the demo app, but you can adapt this approach to any other dataset or SQL database that you want.

Ask questions! Type questions about the data in natural language, such as:

- How many different brands do we have in stock?

- Show me the price range of products in the swimwear category.

- Which distribution centers have the least available inventory?

And once you’ve experimented with the example app, you can also dive into the source code to see how it was built as we walk through the high-level implementation stages in the following sections.

How the SQL Talk app works

How we built it: We define four functions using Gemini's function calling API to: list datasets, list tables, get table details, and execute SQL queries. These functions accept parameters and descriptions, which Gemini uses to identify and invoke them based on user prompts. We've bundled these functions into a tool and registered it with Gemini.

How it works at runtime: The app uses function calling in Gemini to process the input prompt, select an appropriate function and parameters to help answer the user’s query, then the application code executes the respective queries and commands in BigQuery and returns the results in plain language. The app also supports multi-turn conversations using the chat modality of Gemini, which enables in-depth explorations of data by maintaining context. The integration with BigQuery is handled via the BigQuery Python SDK.

In the following sections, we’ll walk through the process of how we defined each major component of the application’s functionality and how we solved each challenge along the way.

Designing function calls by thinking like an expert

Imagine yourself in the role of a SQL expert. You need to answer user's questions about data in BigQuery using SQL queries. Which specific datasets or tables would you need to explore? What SQL queries would you write? When writing SQL queries against your tables, do common patterns emerge? How would you wrap these queries in Python to call them using the Python SDK for BigQuery?

During this step, it’s important to consider both the 1) reasoning and thought process that you would follow to select an appropriate function or SQL query, and 2) syntax of the function or SQL query that you would execute to interact with the database. When working with function calling, you are essentially trying to extract these two components from your own patterns of thinking and replicate them in the Gemini function calling framework so that it can use the same tools, reasoning steps, and function calls that you would perform as a SQL expert.

Think of this process as teaching someone about the purpose of different tools and how to use them to perform various actions. Here we do the same thing and teach Gemini how to interact with an external system on the user’s behalf. In other words, Gemini will select and output BQ commands as structured data objects that we can use to make actual API calls to BQ in our application code. Next, we’ll get to writing code and defining functions and tools.

Defining tools for the generative model

Now that you’ve pulled out a common set of 3 to 5 functions or queries that you would like to codify, you can define a set of functions using the Gemini API for function calling that represent BQ SQL commands. In this app, we’ve specified four function declarations:

- A BQ command to list the available datasets in a given Google Cloud project

- A BQ command to list the tables within a dataset

- A BQ command to get details about a table within a dataset (e.g., fields and data types)

- A SQL query to send to BQ to learn about the data to answer the user’s question

Each function declaration includes a corresponding description and parameter definitions. Here’s example code for one of those four functions, particularly the function that performs a SQL query.

sql_query_func = FunctionDeclaration(

name="sql_query",

description="Get information from data in BigQuery using SQL queries",

parameters={

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "SQL query on a single line that will help give quantitative answers to the user's question when run on a BigQuery dataset and table. In the SQL query, always use the fully qualified dataset and table names.",

}

},

"required": [

"query",

],

},

)

This is an interesting function declaration since we are giving the generative model a function that takes in a single input argument, which seems simplistic. However, rather than a specific option or text input for this single parameter, we are also asking Gemini to fill in this input parameter with a valid SQL query. This generated SQL query will be executed after the user sends their prompt so that the model can learn about specific details within the data warehouse.

Of course, you can also specify multiple function parameters along with their types, and the description can help Gemini function calls confirm to the type and format of the structured output that you need to call a function, such as names, account numbers, latitude and longitude, currencies, or any other inputs that your actual functions need.

Note that the text within the function descriptions, parameter descriptions, and parameter types are not only there as good coding practices or for readability purposes. They are used by Gemini function calling at runtime to 1) select an appropriate function based on the user’s prompt, and 2) extract parameters from the user’s query to fill the function’s arguments. As an example of how this function call behaves in the demo app, here’s an example of a user prompt and the resulting function call for the SQL query function defined above:

User Prompt:

What percentage of orders are returned?

Model function call response:

Function name: sql_query

Function parameters: {'query': 'SELECT ROUND((COUNT(DISTINCT IF(returned_at IS NOT NULL, order_id, NULL)) / COUNT(DISTINCT order_id)) * 100, 2) AS return_rate FROM thelook_ecommerce.orders'}

Making functions available as tools for Gemini

Next, we’ll collect the four functions that we defined into a Tool so that we can register it with Gemini. And we’ll initiate a chat session with Gemini and specify the registered Tool. Note that because we’ve specified the tools keyword argument here when instantiating Gemini, the Tool and its respective functions will be used by Gemini in every conversation turn.

sql_query_tool = Tool(

function_declarations=[

list_datasets_func,

list_tables_func,

get_table_func,

sql_query_func,

],

)

model = GenerativeModel(

"gemini-1.0-pro",

generation_config={"temperature": 0},

tools=[sql_query_tool],

)

You can also specify Tools in a specific conversation turn, or you can specify Tools in the context of a text prompt instead of a chat prompt. And this is where you can specify the temperature, maximum number of output tokens, and other generative model parameters.

Handling function calls and function responses

The next part of the application that we need to build is the logic to handle the actual function call. When Gemini returns a function call as a structured data object, you can use this information in your application code to call your desired API endpoint in any language, library, or framework that you'd like.

To execute the API calls in our application, we use if statements to determine which function Gemini selects. We’ve written the equivalent code using the BigQuery SDK for Python, and we can pass the parameters from Gemini’s function call response to our templated API calls.

# Extract parameters from the function call response from Gemini

params = {}

for key, value in response.function_call.args.items():

params[key] = value

# Execute functions and API calls based on Gemini's selected function

if response.function_call.name == "list_datasets":

api_response = client.list_datasets()

api_response = str([dataset.dataset_id for dataset in api_response])

if response.function_call.name == "list_tables":

api_response = client.list_tables(params["dataset_id"])

api_response = str([table.table_id for table in api_response])

Once we get a response from the API (in this case, BigQuery), we’ll pass the information in the API response back to Gemini.

# Return an API response to Gemini as a followup to the original prompt and function call

response = chat.send_message(

Part.from_function_response(

name=response.function_call.name,

response={

"content": api_response,

},

),

)

Finally, when we get a response from Gemini after returning the API response, Gemini will either return a natural language summary of the API response that answers the user’s question -or- Gemini will request a subsequent function call to make so that it can learn additional information and explore the data in more detail.

To determine the type of response that Gemini returns, we’ll implement a while loop that inspects the resulting structured data object. If the model returns a function call, we can continue calling functions. If the model returns a model summary response, then we can stop calling functions and pass the summary response to the end user.

# Determine if Gemini returned a function call response or a summary response

while function_calling_in_process:

if response.function_call.name == "list_datasets":

[...]

if response.function_call.name == "list_tables":

[...]

Note that if statements and while loops are intentionally used here for the sake of simplicity and readability of the code and data flow in this example app. This design choice means that you might have tried sending a complicated question to the example app, and it either responded with an inaccurate answer, or resulted in a runtime error. Towards the end of this blog post, we’ll discuss the limitations of this approach and how we would handle productionizing an application such as this one.

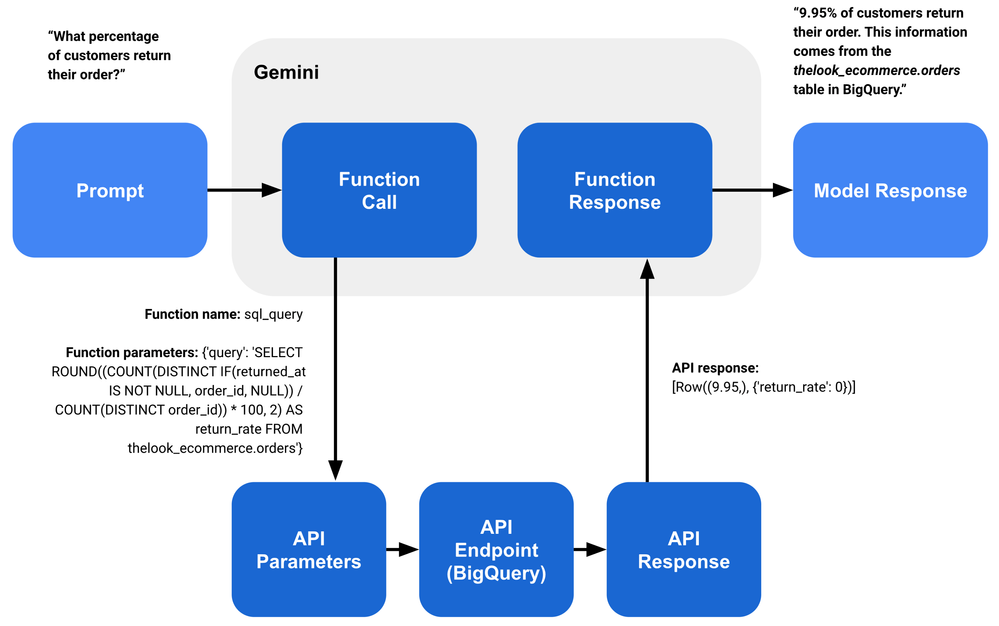

As an example of how a full conversation turn behaves in the demo app, here’s an example of a user prompt, intermediate function call and API responses, and the final model summary response for this conversation turn:

User Prompt:

What percentage of orders are returned?

Model function call response:

Function name: sql_query

Function parameters: {'query': 'SELECT ROUND((COUNT(DISTINCT IF(returned_at IS NOT NULL, order_id, NULL)) / COUNT(DISTINCT order_id)) * 100, 2) AS return_rate FROM thelook_ecommerce.orders'}

API response:

API response: [Row((9.95,), {'return_rate': 0})]

Model summary response:

9.95% of customers return their order. This information comes from the thelook_ecommerce.orders table in BigQuery.

Building a frontend for your application

Up to this point, you might have done all of your prototyping and testing in a Python script, IDE, or notebook. Now it’s time to take all of the application code and put a nice frontend interface on it for end users! We want our frontend app to be something that users can interact with naturally and intuitively. And while we want to give summarized answers in natural language, we also want to pass back the details of the SQL queries and BQ commands to the user for transparency, auditability, and traceability of their questions and answers.

In this example app, we used the Streamlit web framework for Python, but you can use your favorite framework of choice, whether it’s a Python web framework, a web and mobile app built with Flutter, or a native web application with React, Angular, or Svelte!

For the sake of simplicity, we won’t show all of the UI application code here. Instead, we’ll walk through the user journey in the example app in terms of user interactions, data flow, and state handling:

- Build the application layout, including the app header, containers, input text boxes, etc.

- Handle user inputs such as typing, sending, and displaying prompts in the chat interface

- Write out logs and information coming back from Gemini while the user is waiting for an answer

- On the backend, Gemini is hard at work selecting functions and extracting parameters while the application code makes one or more function calls and returns them to Gemini

- Once Gemini outputs a model summary response, we render the response for the end user, and collapse the runtime logs in a dropdown. This allows the user the ability to refer back to the backend logs and details for a given question and answer.

- For the next input prompt from the user, we repeat the above process in subsequent conversation turns within the chat session in Gemini.

And of course, there’s no need to handle all of this logic at once! Start simple by starting with a generalized chat interface, then inject placeholder user prompts and model responses. Following that, you can hook into actual API calls to Gemini to handle function calls, function responses, and model responses. And finally, spend some time polishing the UI/UX, get it into the hands of users, and iterate!

Lessons learned and limitations

Now, we’ll walk through some of the lessons learned and limitations that we ran into when developing this sample app.

Managing costs: Since our app can generate BQ SQL commands, it could also generate large and complex queries or get lots of usage. How can you ensure that users don't execute so many queries that this app becomes very expensive to run?

You’ll want to control costs by implementing custom quotas at the project level or the query level. In our demo app, we’ve implemented a byte limit per query, and the project level can also have quotas that are limited by cost or bytes accessed per day.

Data governance and security: How can I ensure that users aren't using SQL injection or other methods to delete or modify records and that all fine-grained access control is enforced?

Use a service account with restricted IAM permissions and use fine-grained access control policies in BQ. You can add a row-level or column-level policy that exists on a table that prevents users from accessing certain data. but you need to apply this to the service account that's used in the app, not just the user account itself.

Response quality: As with any generative model, the app will sometimes generate non-existent field names or inaccurate answers. How can we handle this better in our sample app?

Taking the same approach as you would when prompt tuning or prompt engineering, you can improve the accuracy and quality of the function calls and model summaries by adding more detail to your function and parameter descriptions.

Business logic and error handling: While the if statements and while loops are easy to implement, our example app is quite sensitive to failed or malformed queries and can result in errors or inaccurate answers for end users.

We could have added a lot more error handling, try/except statements, rewrites/retries, additional model prompts, and other logic to ensure the robustness and stability of the app while it makes function calls and API requests. But the good news is that there are strong patterns and frameworks that already exist within the generative model ecosystem that we can lean on and reuse here. Rather than having to write all of that logic ourselves, we should implement an orchestration layer in this application that involves a reAct agent using a framework such as LangChain or LlamaIndex. We’ll discuss specific ways of handling this in a future blog post!

Monolithic and intertwined app logic and frontend code: Our application is heavily coupled in terms of function calling logic, app logic, and UI logic. What do we need to change to improve the modularity and scalability of this app?

The example app is a concise proof of concept and learning experience of how we can implement function definitions, calls to Gemini, and the complete frontend in about 250 lines of Python code. If we were to move this app into production, we should break up the app into modular components. The good news about the Gemini API and its function calling SDK is that you can use whichever frontend frameworks that you prefer to implement whichever user experience and look and feel that you love the most!

Open-ended nature of the chat session: Our app is intended to be used as an open-ended, generalized, and conversational data exploration tool, mostly for teaching purposes and patterning rather than literal use of the example code. What if you wanted to implement a more specific workflow that is not a chatbot?

You could modify the structure of the app to be more tightly scoped around a particular business use case or specific type of user. We also implemented this app with the chat modality in Gemini so that it would be easy for a wide range of developers and Gemini users to try out the experience for themselves. But you don’t have to follow that pattern in your own applications! Chatbots are useful for open-ended conversations with data, but you might also want to drive this user experience as a linear set of steps in a specific workflow, or as a middleware application that takes certain inputs and generates specific outputs.

Summary

Function calling in Gemini helps you connect generative models to real-world data via API calls to accelerate the development of AI agents. By connecting LLMs to external systems and providing you with full flexibility and control in development, function calling enables you to build “online” generative AI applications that always have access to the latest data and information.

Now it’s your turn! Try examining the source code, running the app locally, and rewriting the function definitions to try new things! Consider adding tools to perform data visualization, work with other databases, or connect to other APIs such to fetch information about the weather, translate text between languages, get the latest currency exchange rates, or other awesome workflows that you think of!

To get started with function calling in Gemini, you can try interacting with data in BigQuery using the live demo app, view and extend the source code, or refer to the documentation. And check out our video below for live demos and Q&A!

We look forward to having you try things out and hearing your feedback in the Google Cloud Community forums!

Twitter

Twitter