- Google Cloud

- Articles & Information

- Cloud Product Articles

- When re-creating an Apigee X organization is neede...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

Apigee X customers may encounter situations where they need to re-create a complete Apigee X organization.

These situations may occur in mainly two different cases:

- An Apigee X customer wants to use Private Service Connect (PSC) on the Northbound but uses an Apigee X organization that does not support the PSC feature: this is typically the case for Apigee X organizations created before January 2022

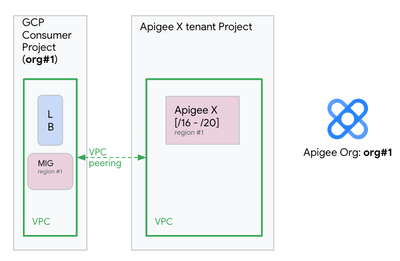

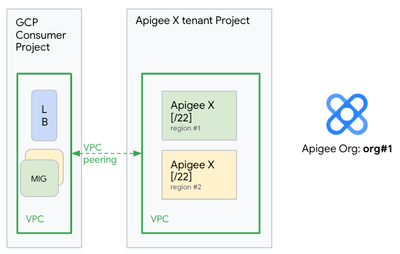

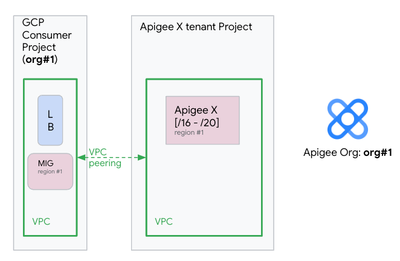

- On former Apigee X organizations (before January 2022), the workloads could not scale beyond a certain point to support more environment attachments / proxy deployments. These Apigee X organizations were typically provisioned using /16 to /20 ip ranges, as shown on the following picture for the Apigee X organization org#1:

In this article, we present a complete process when an Apigee X organization needs being re-created.

We consider two possibilities:

- The Apigee X organization is non-critical, meaning that it can be re-created from scratch

- The Apigee X organization is critical and the re-creation process requires a zero down-time approach: this is for instance the case for a production organization, regardless of the number of Apigee runtimes that are part of the Apigee X organization

Non-critical Apigee X organization

In case of a non-critical type of Apigee X organization, the following steps will recreate the instance, without the need to recreate the Apigee X organization:

- Delete the Apigee X instance

- Delete the private connection / VPC peering to release the IP range on the service network

- Delete the Managed Instance Group (MIG) used by the Internal and/or External Load Balancer

- Recreate the following elements in order:

- Private service access / VPC peering with the new IP range (/22)

- Create the Apigee X instance

- MIG + LB configuration

- Deploy the Apigee objects from your CI/CD or manually

Important: as the Apigee X organization has not been deleted, integrated developer portals do not need to be re-created.

It is also the case for all the "Apigee X organization scoped" objects like API Products, client applications or developers.

Critical Apigee X organization

Critical organization means an organization that serves production traffic. When a critical Apigee X organization must be re-created, a zero downtime approach has to be considered.

This re-creation process with zero-downtime implies the creation of supplementary and temporary Apigee X instances.

Important Considerations

As Apigee has a hard limit of one instance per region, you cannot create new Apigee X instances in the same region and switch your workloads.

Due to this limitation, you should expand to a new region temporarily, following this documented process to perform this re-creation.

Please reach out to Apigee support to get temporary exceptions on your entitlement to increase your region limit by one.

During the re-creation process the Apigee X organization is not deleted. This is the reason why any integrated developer portals do not need to be re-created.

Re-creating an instance with zero-downtime

For clarity reasons, the re-creation process has been divided into three parts:

- Part 1: Add a new Apigee X instance in a new region, on the same Apigee organization

- Part 2: Delete the Apigee X instance that needs to be re-created

- Part 3: Delete the temporary Apigee X instance

Part 1: Add a new Apigee X instance in a new region, on the same Apigee organization

We start with the Apigee X instance, which needs to be re-created, as shown on the following picture:

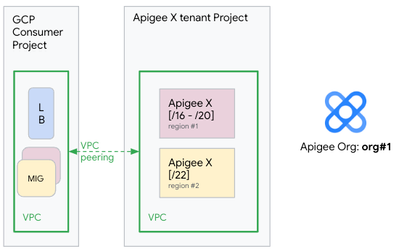

During the re-creation process we use two GCP regions:

- Ensure your entitlement allows provisioning of an additional Apigee instance in a new region.

- Setup an additional Apigee Instance in a new region

- This requires additional IP ranges on the service network (/22 & /28 blocks)

- While the '/22' block is configurable, '/28' block is auto-assigned from any available block associated with the service networking

In the same Apigee X organization, we create another Apigee X instance, on a different region (region#2) using a '/22' IP range, as shown here:

Process details for part 1

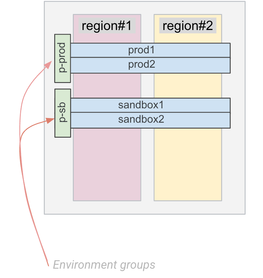

Create a new Apigee instance - through the Web UI, Apigee APIs or Terraform scripts. Attach existing environment(s) to the newly created instance.

In the example below, these environments are prod1, prod2 (attached to the p-prod environment group) and sandbox1, sandbox2 (attached to the p-sb environment group):

If your backend requires IP allow-listing, reserve static southbound NAT IP addresses

- Ensure the new IPs are activated on the Apigee instance and updated on your backend firewalls to allow-list them before proceeding to the next step

Configure northbound routing to provide internal only access or external access.

- Ensure the new region is taking traffic similar to other region(s) without any issues before proceeding to the next step.

Part 2: Delete the Apigee X instance that needs to be re-created

At this point, the two Apigee X instances are distributed across two GCP regions (region#1 and region#2) and are part of the same Apigee X organization (org#1).

This is important as the API Products, developer applications, developers and security tokens (OAuth tokens) are shared between the two instances in the two GCP regions.

A dedicated Managed Instance Group (MIG) of network bridge VMs is created in region #2 so the external load balancer can operate on the two GCP regions accordingly.

The next step consists in deleting the Apigee X instance, which needs to be re-created, as shown on the following picture:

Process details for part 2

Enable Connection Draining on the load balancer backend-service corresponding to the region that is to be deleted & recreated, to ensure any in-progress requests are given time to complete when the backend is being removed from the LB.

Remove the backend from the backend-service of the LB, corresponding to the region that you want to delete & recreate.

Get the Apigee Instance in the region for reference and make a note of the response [command]

Get any reserved southbound NAT Addresses that are in 'ACTIVE' state, associated with the instance. [command]

- Remove these IPs from the allow-list of target endpoints, as these IPs will be released.

- If there are no 'ACTIVE' IPs in the list, no action is required.

Delete the Apigee Instance in the region [command]

- Check the status of the operation periodically.

This will take ~15-45 mins to complete.

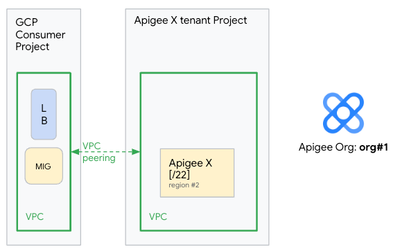

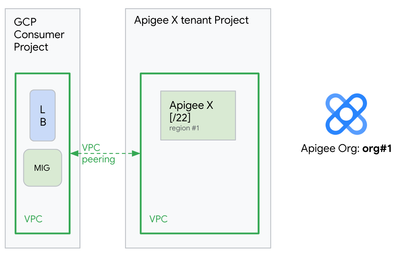

At this point we can re-create the Apigee X instance in region#1 and the network components (MIG) required to operate with an Apigee X instance in this original region.

The two Apigee X instances are distributed across the two GCP regions, as shown on this picture:

Create the Apigee Instance in the same region [command]

- The '/22' block can be from the range assigned previously to the instance.

- However, '/28' will be auto-assigned from any free range assigned to the service networking.

- As the new instance requires less IPs compared to the older, you can alter the IP ranges allocated to the service networking accordingly, if you want to.

- Check the status of the operation periodically.

- This will generally take around 45 minutes but it can take up to 90 minutes.

Attach all the previously associated environment(s) to the re-created instance [command]

If your backend requires IP allow-listing, configure southbound NAT IP addresses. Skip this step otherwise.

- Reserve NAT IP Address [command]

- Activate NAT IP Address [command]

- Repeat the reservation & activation step to reserve as many NAT addresses as you need. (Usually 1-5 IP addresses)

- Allow-list these IPs in your backend firewalls before proceeding to the next step

If you are using the 'host IP address' to connect to Apigee through the managed instance-groups (MIG):

- Compare the endpoint IP of the newly created instance with the older instance. ('host' field in the GET Instance response) [command]

- If the host IP address has not changed, then it is not required to recreate the MIG. Simply attach the MIG backend to the backend-service [command]

- If the host IP address is different from the older instance, then,

- If you are using the PSC NEG to connect to Apigee:

Part 3: Delete the temporary Apigee X instance

Last but not least, we can now delete the temporary Apigee X instance that has been provisioned in region#2. At the end of the re-creation process, we have a new Apigee X instance in the required region (region#1) that is able to deal with the API traffic.

Process details for part 3

Repeat the steps provided in Part-B to recreate all existing Apigee Instances.

Delete the temporary instance (if any) created at the beginning of the re-creation process.

- Follow previous steps to seamlessly delete the instance without any interruptions.

Once re-creation is completed for all regions, report back to Apigee to revert the temporary change in the entitlement.

Reference: gcloud and cURL commands

This section details the different gcloud and cURL commands, which are used during the re-creation process discussed in the article.

Here is an exhaustive list and description of environment variables used on these commands:

|

variable name |

description |

|

PROJECT_ID |

GCP project identifier |

|

BACKEND_SERVICE |

Backend-service of the external load balancer |

|

MIG_NAME |

Name of the Managed Instance Group used as a bridge between the external load balancer and the Apigee X instance |

|

RUNTIME_LOCATION |

Region of the Apigee X instance |

|

INSTANCE_NAME |

Name of the Apigee X instance |

|

APIGEE_ENV |

Apigee environment |

|

NAT_ID |

Name to assign for a NAT IP |

|

NEG_NAME |

Network Endpoint Group name |

|

APIGEE_ENDPOINT |

Host IP of an Apigee X instance |

|

VPC_NAME |

Name of a VPC network |

|

VPC_SUBNET |

Name of a VPC subnet |

|

SERVICE_ATTACHMENT |

Service Attachment of an Apigee X instance |

|

AUTH |

Bearer token authorization header |

|

|

Disk key used for data encryption on the Apigee X runtime |

Enable connection draining

gcloud compute backend-services update $BACKEND_SERVICE \

--project $PROJECT_ID \

--connection-draining-timeout=60 \

--globalRemove a MIG backend from a backend-service

gcloud compute backend-services remove-backend $BACKEND_SERVICE \

--project $PROJECT_ID \

--instance-group $MIG_NAME \

--instance-group-region $RUNTIME_LOCATION \

--globalRemove a PSC-NEG backend from a backend-service

gcloud compute backend-services remove-backend $BACKEND_SERVICE \

--project $PROJECT_ID \

--network-endpoint-group $NEG_NAME \

--network-endpoint-group-region $RUNTIME_LOCATION \

--globalGet details of an Apigee X instance

export AUTH="Authorization: Bearer $(gcloud auth print-access-token)"

curl -X GET -H "$AUTH" \

"https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/instances/$INSTANCE_NAME"

Fetch NAT IP addresses of an Apigee X instance

curl -X GET -H "$AUTH" \

"https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/instances/$INSTANCE_NAME/natAddresses"

Delete an Apigee instance

curl -X DELETE -H "$AUTH" \

"https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/instances/$INSTANCE_NAME"

Create an Apigee instance

curl -X POST -H "$AUTH" \

-H "Content-Type: application/json" \

"https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/instances" --data @Instance.json

Instance.json:

{

"name":"$INSTANCE_NAME",

"location": "$RUNTIME_LOCATION",

"diskEncryptionKeyName": "$DISK_ENCRYPTION_KEY",

"ipRange": "<OPTIONAL, Provide /22 range to assign to the instance>", "consumerAcceptList": "<OPTIONAL, Projects to allow connections for PSC service-attachment>"

}Attach an environment to an Apigee instance

curl -X POST -H "$AUTH" \

-H "Content-Type: application/json" \

"https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/instances/$INSTANCE_NAME/attachments " \

--data "{\"environment\": \"$APIGEE_ENV\"}"

Reserve a NAT IP address on an Apigee instance

curl -X POST -H "$AUTH" \

"https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/instances/$INSTANCE_NAME/natAddresses" \

--data "{\"name\":\"${NAT_ID}\"}"

Activate a NAT IP address on an Apigee instance

curl -X POST -H "$AUTH" \

"https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/instances/$INSTANCE_NAME/natAddresses/$NAT_ID:activate" \

--data "{}"

Attach a MIG Backend to a backend-service

gcloud compute backend-services add-backend $BACKEND_SERVICE \

--project $PROJECT_ID \

--instance-group $MIG_NAME \

--instance-group-region $RUNTIME_LOCATION \

--balancing-mode UTILIZATION --max-utilization 0.8 \

--globalDelete a Network Endpoint Group (NEG)

gcloud compute network-endpoint-groups delete $NEG_NAME \

--region=$RUNTIME_LOCATION \

--project=$PROJECT_IDCreate an Instance Template and a MIG

gcloud compute instance-templates create $MIG_NAME \

--project $PROJECT_ID \

--region $RUNTIME_LOCATION \

--network $VPC_NAME \

--subnet $VPC_SUBNET \

--tags=https-server,apigee-mig-proxy,gke-apigee-proxy \

--machine-type e2-medium --image-family debian-10 \

--image-project debian-cloud --boot-disk-size 20GB \

--metadata

ENDPOINT=$APIGEE_ENDPOINT,startup-script-url=gs://apigee-5g-saas/apigee-envoy-proxy-release/latest/conf/startup-script.sh

gcloud compute instance-groups managed create $MIG_NAME \

--project $PROJECT_ID --region $RUNTIME_LOCATION \

--base-instance-name apigee-mig --size 2 --template $MIG_NAME

gcloud compute instance-groups managed set-autoscaling $MIG_NAME \

--project $PROJECT_ID --region $RUNTIME_LOCATION \

--max-num-replicas 10 --target-cpu-utilization 0.75 --cool-down-period 90

gcloud compute instance-groups managed set-named-ports $MIG_NAME \

--project $PROJECT_ID --region $RUNTIME_LOCATION \

--named-ports https:443Create a Network Endpoint Group (for PSC)

gcloud compute network-endpoint-groups create $NEG_NAME \

--network-endpoint-type=private-service-connect \

--psc-target-service=$SERVICE_ATTACHMENT \

--region=$RUNTIME_LOCATION \

--network=$VPC_NAME \

--subnet=$VPC_SUBNET \

--project=$PROJECT_IDAttach a PSC-NEG backend to a backend-service

gcloud compute backend-services add-backend $BACKEND_SERVICE \

--project $PROJECT_ID \

--network-endpoint-group $NEG_NAME \

--network-endpoint-group-region $RUNTIME_LOCATION \

--globalDelete a MIG & instance Template

gcloud compute instance-groups managed delete $MIG_NAME \

--project $PROJECT_ID \

--region=$RUNTIME_LOCATION

gcloud compute instance-templates delete $MIG_NAME

--project $PROJECT_ID \

--region=$RUNTIME_LOCATION

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Public document has been released on Recreating an Apigee instance with zero downtime, which includes a details about the pre-requisites and steps to perform the recreation through the scripts hosted at https://github.com/apigee/apigee-x-pupi-migration

Twitter

Twitter