- Google Cloud

- Articles & Information

- Cloud Product Articles

- Mainframe Connector: Mainframe to BigQuery Data Mi...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

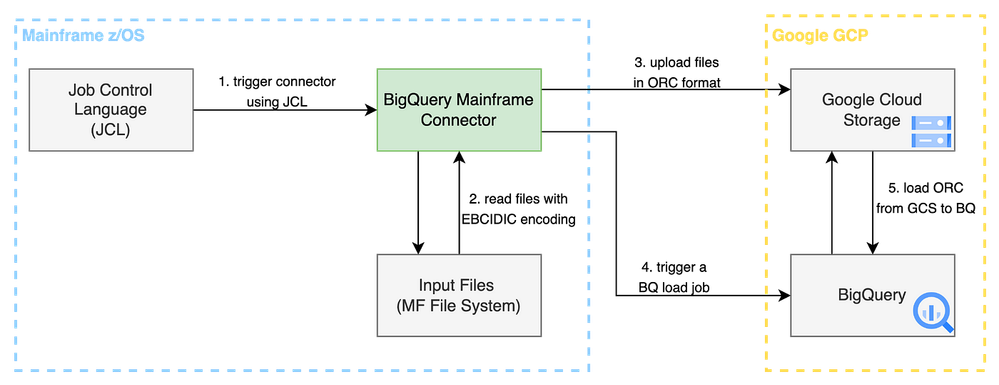

IBM Mainframes are high-performance computers with large amounts of memory and data processors. They can process billions of simple calculations and transactions in real time. IBM mainframes have been around since the 1950s and are still vital for many organizations. In recent years many companies that rely on mainframes have been working towards migrating to the cloud.

Migrating data from a mainframe to the cloud can be a daunting task, but it’s one that can offer many benefits, such as increased agility, scalability, and cost savings. Google’s Mainframe Connector, an open source product, makes this task almost effortless.

Google’s Mainframe Connector is a suite of tools and services that helps businesses migrate their mainframe data to Google Cloud. It offers several key functionalities:

- Simplified data transfer: Moves data from mainframe to Google Cloud storage services like Cloud Storage and BigQuery.

- Batch job integration: Submits BigQuery jobs from mainframe batch jobs defined by Job Control Language (JCL).

- Reduced MIPs usage: Utilizes Java Virtual Machine (JVM) to minimize mainframe processor workload during data transfer.

- Streaming transformation: Transcodes COBOL binary, packed decimal, and EBCDIC data to formats compatible with Google Cloud services like BigQuery.

Installation steps

JARs

The first step is to download the prebuilt JARs from these locations.

https://storage.googleapis.com/mainframe-connector-release/5.8.0/mainframe-connector-5.8.0.tar.gz

Service Account keyfile

Visit the Service Accounts web console to create a service account and download a json keyfile.

To create a keyfile, click the three dots to the right of the service account, click "Manage Keys" then "Add Key" then "Create new key", select "JSON" and click "CREATE".

Unix filesystem

Keyfile

There's no default path for storing the keyfile in z/OS USS. You should choose a path that is readable only to users who need the ability to act as the service account. Use FTP in binary mode to upload the JSON key file. The keyfile should be stored on the filesystem unmodified from its original UTF-8 encoding. Copy the JSON keyfile to the unix filesystem using binary transfer mode and update STDENV within the BQSH JCL procedure. Specify the path to the JSON keyfile on the line that begins “export GKEYFILE=”.

The keyfile should not be readable from USS if it was transferred correctly.

JAR file

The default suggested path to deploy the JAR file is:

/opt/google/mainframe-connector/[version]/

Use FTP in binary mode to upload the jar file to this location or a path chosen by a site administrator. Command “jar -tvf <jar file name>” can be used to validate that the JAR file was transferred correctly (the “t” option indicates to just list the contents of the JAR file).

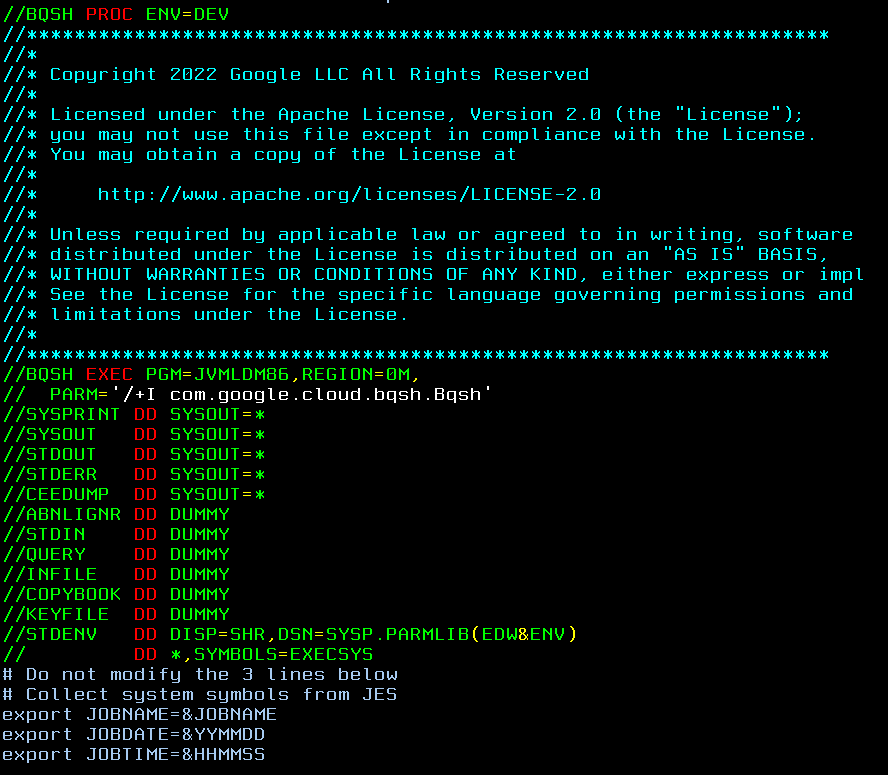

BQSH PROC

Make a copy of the BQSH JCL procedure (shown below) and make a few edits to set paths and site-specific environment variables.

//BQSH PROC

//*******************************************************************

//*

//* Copyright 2022 Google LLC All Rights Reserved

//*

//* Licensed under the Apache License, Version 2.0 (the "License");

//* you may not use this file except in compliance with the License.

//* You may obtain a copy of the License at

//*

//* http://www.apache.org/licenses/LICENSE-2.0

//*

//* Unless required by applicable law or agreed to in writing, software

//* distributed under the License is distributed on an "AS IS" BASIS,

//* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

//* See the License for the specific language governing permissions and

//* limitations under the License.

//*

//*******************************************************************

//BQSH EXEC PGM=JVMLDM86,REGION=0M,

// PARM='/+I com.google.cloud.bqsh.Bqsh'

//SYSPRINT DD SYSOUT=*

//SYSOUT DD SYSOUT=*

//STDOUT DD SYSOUT=*

//STDERR DD SYSOUT=*

//CEEDUMP DD SYSOUT=*

//ABNLIGNR DD DUMMY

//STDIN DD DUMMY

//QUERY DD DUMMY

//INFILE DD DUMMY

//COPYBOOK DD DUMMY

//KEYFILE DD DUMMY

//STDENV DD *,SYMBOLS=EXECSYS

# Service Account Keyfile

# Edit the line below to specify a unix filesystem path where

# the service account keyfile is stored.

# The service account should be granted Storage, BigQuery and Logging permissions.

export GKEYFILE="/path/to/keyfile.json"

# Path to directory containing google jar files

# Edit this to set actual path selected for your site

# it's recommended to have a path with a version identifier

# and create a symlink to the directory of the latest version

GOOGLE_DIR="/opt/google/mainframe-connector/latest/lib"

GOOGLE_CLASSPATH="$GOOGLE_DIR/*"

# Do not modify the 3 lines below

# Collect system symbols from JES

export JOBNAME=&JOBNAME

export JOBDATE=&YYMMDD

export JOBTIME=&HHMMSS

# IBM JZOS JDK Location

JH="/usr/lpp/java/J8.0_64"

export JAVA_HOME="$JH"

export PATH="/bin:$JH/bin"

# Log Level

export BQSH_ROOT_LOGGER=DEBUG

# Binary Data Sets

# Uncomment the line below to set a default output bucket for scp.

# The DSN of the input file is used as the object name.

# this may greatly reduce effort across many job steps

#export GCSDSNURI="gs://[BUCKET]/[PREFIX]"

# Generational Data Sets

# Uncomment the line below to set a default output bucket for scp GDG datasets.

# The Cloud GDG feature emulates a GDG dataset in a versioned object.

# Cloud Storage objects take precedence over local DSN when this is set.

#export GCSGDGURI="gs://[BUCKET]/[PREFIX]"

# Uncomment the line below to set a default output bucket for the gszutil command.

#export GCSOUTURI="gs://[BUCKET]/[PREFIX]"

# Mainframe Connector gRPC service

# Uncomment and edit the lines below to set the Hostname or IP Address and

# port of the gRPC data set transcoding service.

# The gRPC service converts z/OS datasets to ORC format on VMs running in

# Google Cloud VPC. This is strongly recommended when processing high volumes

# of data.

#export SRVREMOTE=

#export SRVPORT=

# Native Libraries

JL="$JH/lib"

LP="/lib:/usr/lib:$JH/bin:$JL/s390x:$JL/s390x/j9vm:$JH/bin/classic"

export LIBPATH="$LP:/usr/lib/java_runtime64"

# Java Classpath

CP="$JL:$JL/ext:/usr/include/java_classes/*"

export CLASSPATH="$CP:GOOGLE_CLASSPATH"

# JVM options

IJO="-Xms512m -Xmx512m -Xcompressedrefs -Djava.net.preferIPv4Stack=true"

export IBM_JAVA_OPTIONS="$IJO"

export JZOS_MAIN_ARGS=""

/*

// PEND

Required settings:

- GKEYFILE environment variable must specify path to json keyfile

- GOOGLE_DIR environment variable must specify path to directory containing jar file(s)

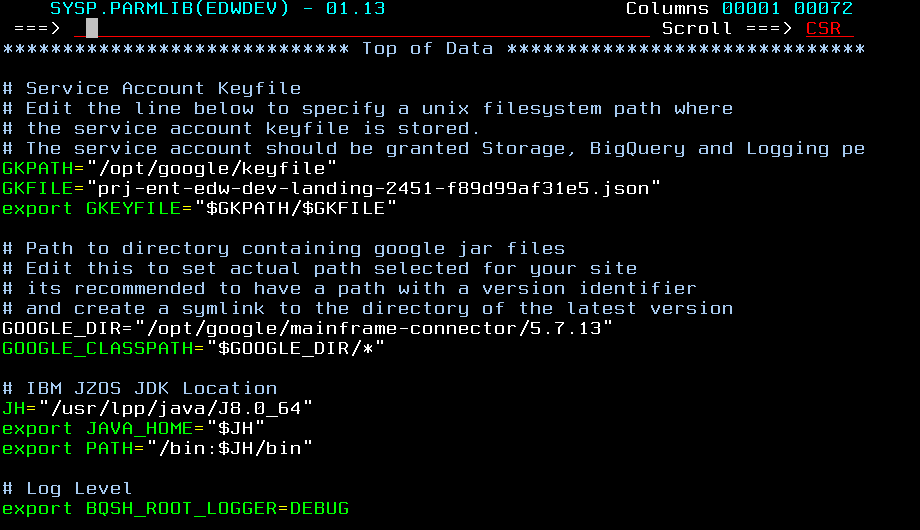

- See an example of a BQSH procedure below:

The ENV symbolic will be used to point to the Java standard environment statements and are Google Cloud environment specific. This way multiple procedures are not required for each environment and should be the easiest for the Development teams to utilize.

If changes are required for a particular environment (new JAR file, different IBM Java version, change in keyfile, etc), then just the member specific to that Google Cloud environment in system parmlib library (e.g. SYSP.PARMLIB) will need to be updated.

Listed below is an example of the DEV PARMLIB member and this shows how the keyfile and mainframe connector software jar are referenced.

If changes are required to the BQSH procedure in the future, then a newer version could be staged in the lower environment PROCLIB library, which is concatenated before system library (SYSP.PROCLIB), so the changes could be vetted against those lower regions before updating the procedure in SYSP.PROCLIB.

This method avoids the need for development teams to change their JCL/procedures to point to a new updated procedure during testing.

Enabling debug logging

Debug logging can be enabled by editing the BQSH Procedure and setting the environment variable BQSH_ROOT_LOGGER=DEBUG.

Debug logging can be disabled by commenting out the line or setting the variable to anything other than DEBUG.

RACF permissions

If you see job log messages ‘INSUFFICIENT ACCESS AUTHORITY’ then you will need to grant the following permissions required by IBMJCECCA. TLS is used on all requests made to Cloud APIs so this message will appear immediately if permissions have not been granted.

- CSFIQF (ICSF Query Facility)

- CSFRNG (Random Number Generate)

- CSFRNGL (Random Number Generate Long)

- CSFPKI (PKA Key Import)

- CSFDSG (Digital Signature Generate)

- CSFDSV (Digital Signature Verify)

Service Account permissions

At the project level, the service account should be granted the following roles:

- Logs Writer

- BigQuery Job User

On your storage bucket, the service account should be granted the following roles:

- Storage Object Admin

On your BigQuery Dataset, the service account should be granted the following roles:

- BigQuery Data Editor

- BigQuery Read Session User

Connectivity requirements

The Mainframe Connector interacts with Cloud Storage, BigQuery, and the Cloud Logging API. VPC-SC policy should allow access to these services.

PubSub, Dataflow, and Dataproc APIs may also be used for additional integration between z/OS batch jobs and data pipelines in Cloud.

Provide the following information to the network administration team:

- IP subnets assigned to z/OS LPARs

- Google Cloud service accounts used by z/OS batch jobs

- Google Cloud project ids containing resources accessed by z/OS batch jobs

Ideally, Interconnect and VPC-SC will already be configured. The VPC-SC policy may need to be updated to allow access to specific BigQuery, Cloud Storage and Cloud Logging resources from the specified IP ranges. The specific configuration will be based on enterprise policy.

A few simplified examples of VPC-SC policy:

- Allow z/OS IP subnets to interact with any Google Cloud service, limited to resources within specified projects only

- Allow z/OS cloud service accounts to interact with only Cloud Storage, BigQuery, Cloud Logging APIs within specified projects only

Network configuration required to enforce VPC-SC:

- On-prem routers are configured to route z/OS outbound traffic to destination subnets within VPC networks and restricted.googleapis.com special domain via Interconnect or VPN.

- On-prem firewalls are configured to allow outbound traffic to VPC subnets (VMs) and restricted.googleapis.com 199.36.153.4/30 (Google API endpoints)

- On-prem firewalls are configured to deny all other outbound traffic to prevent bypass of VPC-SC.

Firewalls, Routers, DSNs

Tailor the mainframe IP files, and include rules in firewalls, routers, and DNS to allow traffic of the following.

Test the IP addresses below to ensure that traffic can go to Google Cloud.

ETC.IPNODES

- 199.36.153.4 www.googleapis.com

- 199.36.153.5 www.googleapis.com

- 199.36.153.6 www.googleapis.com

- 199.36.153.7 www.googleapis.com

- 199.36.153.4 oauth2.googleapis.com

- 199.36.153.5 oauth2.googleapis.com

- 199.36.153.6 oauth2.googleapis.com

- 199.36.153.7 oauth2.googleapis.com

- 127.0.0.1 LPAR1 (depending on LPAR configuration)

- 127.0.0.1 LPAR2

- 127.0.0.1 LPAR3

HOSTS.LOCAL

- HOST : 199.36.153.4, 199.36.153.5, 199.36.153.6, 199.36.153.7 : WWW.GOOGLEAPIS.COM ::::

- HOST : 199.36.153.4, 199.36.153.5, 199.36.153.6, 199.36.153.7 : OAUTH2.GOOGLEAPIS.COM ::::

TCPIP.DATA

- LOOKUP LOCAL DNS

How to use

After adding the PDS containing BQSH to your JCL’s library search path, you can write job steps using EXEC BQSH to invoke the Mainframe Connector. The simplest test is to use the scp command to upload a file to Cloud Storage. Another test is to use the gsutil cp command with a copybook to convert a file to ORC and bq load to load the ORC file into a BigQuery table.

This example has 3 steps:

- Upload COBOL dataset as ORC to a bucket

- Load ORC file to BigQuery table

- Query BigQuery table

//STEP01 EXEC BQSH

//INFILE DD DSN=<HLQ>.DATA.FILENAME,DISP=SHR

//COPYBOOK DD DISP=SHR,DSN=<HLQ>.COPYBOOK.FILENAME

//STDIN DD *

BUCKET=my-long-bucket-name-1234

gsutil cp --replace gs://$BUCKET/tablename.orc

/*

//COND02 IF (STEP01.RC = 0) THEN

//STEP02 EXEC BQSH

//STDIN DD *

PROJECT=my-long-project-name-1234

bq load --project_id=$PROJECT \

myproject:DATASET.TABLE \

gs://bucket/tablename.orc/*

/*

//COND03 IF (STEP02.RC = 0) THEN

//STEP03 EXEC BQSH

//QUERY DD DSN=<HLQ>.QUERY.FILENAME,DISP=SHR

//STDIN DD *

PROJECT=my-long-project-name-1234

bq query --project_id=$PROJECT \

myproject:DATASET.TABLE \

gs://bucket/tablename.orc/*

/*

Variables, such as project ids and bucket names, can also be placed in BQSH proclib and referenced across several JCL as environment variables, thus avoiding specifying them in each JCL.

Furthermore, this approach can provide seamless transition between prod and non-prod since environment specific variables are set it the environments BQSH proclib. In this example standard input is provided as in-stream data to the STDIN DD. Alternatively, users can provide this input via a DSN, making it easier to manage symbol substitution if needed.

Copybook restrictions

Currently, there are some restrictions on the COPYBOOK that you need to consider before using it.

- No level 66 (ALIAS) or 77 (STANDALONE) to be used

- Only PICTURE fields (no redefines)

- Only COMP is supported (e.g. no ALIGN, no OCCURS)

- All fields justified, no comment and only single level

Usage with BigQuery

Typical flow involves the following steps:

- Reading mainframe dataset,

- Transcoding dataset to ORC,

- Registering as an external table*

- Running a MERGE DML statement to load new incremental of data into target table

Note: If the dataset doesn't require further modifications after loading, then loading into a native table is a better option than loading into an external table.

bq query

Example JCL

//STEP03 EXEC BQSH

//QUERY DD DSN=<HLQ>.QUERY.FILENAME,DISP=SHR

//STDIN DD *

bq query --project_id=myproject \

myproject:DATASET.TABLE \

gs://bucket/tablename.orc/*

/*

Help text

query (gszutil-1.0.0)

Usage: query [options]

--help prints this usage text

--parameters_from_file <value>

Comma-separated query parameters in the form [NAME]:[TYPE]:[DDNAME]. An empty name creates a positional parameter. [TYPE] may be omitted to assume a STRING value in the form: name::ddname or ::ddname. NULL produces a null value.

--create_if_needed When specified, create a destination table. The default value is false.

-m, --allow_multiple_queries

When specified, allow multiple queries. The default value is false.

--allow_large_results When specified, enables large destination table sizes for legacy SQL queries.

--append_table When specified, append data to a destination table. The default value is false.

--batch When specified, run the query in batch mode. The default value is false.

--clustering_fields <value>

If specified, a comma-separated list of columns is used to cluster the destination table in a query. This flag must be used with the time partitioning flags to create either an ingestion-time partitioned table or a table partitioned on a DATE or TIMESTAMP column. When specified, the table is first partitioned, and then it is clustered using the supplied columns.

--destination_kms_key <value>

The Cloud KMS key used to encrypt the destination table data.

--destination_schema <value>

The path to a local JSON schema file or a comma-separated list of column definitions in the form [FIELD]:[DATA_TYPE],[FIELD]:[DATA_TYPE]. The default value is ''.

--destination_table <value>

The name of the destination table for writing query results. The default value is ''

--dryRun When specified, the query is validated but not run.

--external_table_definition <value>

The table name and schema definition used in an external table query. The schema can be a path to a local JSON schema file or a comma-separated list of column definitions in the form [FIELD]:[DATA_TYPE],[FIELD]:[DATA_TYPE]. The format for supplying the table name and schema is: [TABLE]::[PATH_TO_FILE] or [TABLE]::[SCHEMA]@[SOURCE_FORMAT]=[CLOUD_STORAGE_URI]. Repeat this flag to query multiple tables.

--label <value> A label to apply to a query job in the form [KEY]:[VALUE]. Repeat this flag to specify multiple labels.

--maximum_bytes_billed <value>

An integer that limits the bytes billed for the query. If the query goes beyond the limit, it fails (without incurring a charge). If not specified, the bytes billed are set to the project default.

--parameters <value> comma-separated query parameters in the form [NAME]:[TYPE]:[VALUE]. An empty name creates a positional parameter. [TYPE] may be omitted to assume a STRING value in the form: name::value or ::value. NULL produces a null value.

--replace If specified, overwrite the destination table with the query results. The default value is false.

--require_cache If specified, run the query only if results can be retrieved from the cache.

--require_partition_filter <value>

If specified, a partition filter is required for queries over the supplied table. This flag can only be used with a partitioned table.

--schema_update_option <value>

When appending data to a table (in a load job or a query job), or when overwriting a table partition, specifies how to update the schema of the destination table. Possible values include:

ALLOW_FIELD_ADDITION: Allow

new fields to be added

ALLOW_FIELD_RELAXATION: Allow relaxing REQUIRED fields to NULLABLE

--time_partitioning_expiration <value>

An integer that specifies (in seconds) when a time-based partition should be deleted. The expiration time evaluates to the partition's UTC date plus the integer value. A negative number indicates no expiration.

--time_partitioning_field <value>

The field used to determine how to create a time-based partition. If time-based partitioning is enabled without this value, the table is partitioned based on the load time.

--time_partitioning_type <value>

Enables time-based partitioning on a table and sets the partition type. Currently, the only possible value is DAY which generates one partition per day.

--use_cache <value> When specified, cache the query results. The default value is true.

--use_legacy_sql When set to false, runs a standard SQL query. The default value is false (uses Standard SQL).

--dataset_id <value> The default dataset to use for requests. This flag is ignored when not applicable. You can set the value to [PROJECT_ID]:[DATASET] or [DATASET]. If [PROJECT_ID] is missing, the default project is used. You can override this setting by specifying the --project_id flag. The default value is ''.

--debug_mode Set logging level to debug. The default value is false.

--job_id <value> The unique job ID to use for the request. If not specified in a job creation request, a job ID is generated. This flag applies only to commands that create jobs: cp, extract, load, and query.

--location <value> A string corresponding to your region or multi-region location.

--project_id <value> The project ID to use for requests. The default value is ''.

--synchronous_mode <value>

If set to true, wait for the command to complete before returning, and use the job completion status as the error code. If set to false, the job is created, and successful completion status is used for the error code. The default value is true.

--sync <value> If set to true, wait for the command to complete before returning, and use the job completion status as the error code. If set to false, the job is created, and successful completion status is used for the error code. The default value is true.

--stats_table <value> tablespec of table to insert stats

Notes

- Queries can be provided in a variety of ways: inline, separate dataset via DD, separate dataset via DSN.

- Custom parameterization tokens can be applied to the query datasets separately from what is provided by the connector. This requires a preceding job step to replace parameter tokens to produce the query dataset.

bq load

Example JCL

//STEP02 EXEC BQSH

//STDIN DD *

bq load --project_id=myproject \

myproject:dataset.table \

gs://mybucket/tablename.orc/*

/*

Help text

load (gszutil-1.0.0)

Usage: load [options] tablespec path [schema]

--help prints this usage text

tablespec Tablespec in format [PROJECT]:[DATASET].[TABLE]

path Comma-separated source URIs in format gs://bucket/path,gs://bucket/path

schema Comma-separated list of column definitions in the form [FIELD]:[DATA_TYPE],[FIELD]:[DATA_TYPE].

--allow_jagged_rows When specified, allow missing trailing optional columns in CSV data.

--allow_quoted_newlines When specified, allow quoted newlines in CSV data.

--autodetect When specified, enable schema auto-detection for CSV and JSON data.

--destination_kms_key <value>

The Cloud KMS key for encryption of the destination table data.

-E, --encoding <value> The character encoding used in the data. Possible values include:

ISO-8859-1 (also known as Latin-1) UTF-8

-F, --field_delimiter <value>

The character that indicates the boundary between columns in the data. Both \t and tab are allowed for tab delimiters.

--ignore_unknown_values When specified, allow and ignore extra, unrecognized values in CSV or JSON data.

--max_bad_records <value>

An integer that specifies the maximum number of bad records allowed before the entire job fails. The default value is 0. At most, five errors of any type are returned regardless of the --max_bad_records value.

--null_marker <value> An optional custom string that represents a NULL value in CSV data.

--clustering_fields <value>

If specified, a comma-separated list of columns is used to cluster the destination table in a query. This flag must be used with the time partitioning flags to create either an ingestion-time partitioned table or a table partitioned on a DATE or TIMESTAMP column. When specified, the table is first partitioned, and then it is clustered using the supplied columns.

--projection_fields <value>

If used with --source_format set to DATASTORE_BACKUP, indicates which entity properties to load from a Cloud Datastore export as a comma-separated list. Property names are case sensitive and must refer to top-level properties. The default value is ''. This flag can also be used with Cloud Firestore exports.

--quote <value> The quote character to use to enclose records. The default value is " which indicates no quote character.

--replace When specified, existing data is erased when new data is loaded. The default value is false.

--append_table When specified, append data to a destination table. The default value is false.

--schema <value> Comma-separated list of column definitions in the form [FIELD]:[DATA_TYPE],[FIELD]:[DATA_TYPE].

--schema_update_option <value>

When appending data to a table (in a load job or a query job), or when overwriting a table partition, specifies how to update the schema of the destination table. Possible values include:

ALLOW_FIELD_ADDITION: Allow new fields to be added

ALLOW_FIELD_RELAXATION: Allow relaxing REQUIRED fields to NULLABLE

Repeat this flag to specify multiple schema update options.

--skip_leading_rows <value>

An integer that specifies the number of rows to skip at the beginning of the source file.

--source_format <value> The format of the source data. Possible values include: CSV NEWLINE_DELIMITED_JSON AVRO DATASTORE_BACKUP PARQUET ORC (Default: ORC)

--time_partitioning_expiration <value>

An integer that specifies (in seconds) when a time-based partition should be deleted. The expiration time evaluates to the partition's UTC date plus the integer value. A negative number indicates no expiration.

--time_partitioning_field <value>

The field used to determine how to create a time-based partition. If time-based partitioning is enabled without this value, the table is partitioned based on the load time.

--time_partitioning_type <value>

Enables time-based partitioning on a table and sets the partition type. Currently, the only possible value is DAY which generates one partition per day.

--require_partition_filter <value>

If specified, a partition filter is required for queries over the supplied table. This flag can only be used with a partitioned table.

--use_avro_logical_types <value>

If sourceFormat is set to AVRO, indicates whether to convert logical types into their corresponding types (such as TIMESTAMP) instead of only using their raw types (such as INTEGER).

--dataset_id <value> The default dataset to use for requests. This flag is ignored when not applicable. You can set the value to [PROJECT_ID]:[DATASET] or [DATASET]. If [PROJECT_ID] is missing, the default project is used. You can override this setting by specifying the --project_id flag. The default value is ''.

--debug_mode <value> Set logging level to debug. The default value is false.

--job_id <value> The unique job ID to use for the request. If not specified in a job creation request, a job ID is generated. This flag applies only to commands that create jobs: cp, extract, load, and query.

--location <value> A string corresponding to your region or multi-region location.

--project_id <value> The project ID to use for requests. The default value is ''.

--synchronous_mode <value>

If set to true, wait for the command to complete before returning, and use the job completion status as the error code. If set to false, the job is created, and successful completion status is used for the error code. The default value is true.

--sync <value> If set to true, wait for the command to complete before returning, and use the job completion status as the error code. If set to false, the job is created, and successful completion status is used for the error code. The default value is true.

--stats_table <value> tablespec of table to insert stats

bq mk

This command is most commonly used to create external tables or native tables that need partitioning and clustering to be set up.

Help text

mk (gszutil-1.0.0)

Usage: mk [options] tablespec

--help prints this usage text

tablespec [PROJECT_ID]:[DATASET].[TABLE]

--clustering_fields <value>

A comma-separated list of column names used to cluster a table. This flag is currently available only for partitioned tables. When specified, the table is partitioned and then clustered using these columns.

-d, --dataset When specified, creates a dataset. The default value is false.

--default_partition_expiration <value>

An integer that specifies the default expiration time, in seconds, for all partitions in newly-created partitioned tables in the dataset. A partition's expiration time is set to the partition's UTC date plus the integer value. If this property is set, it overrides the dataset-level default table expiration if it exists. If you supply the --time_partitioning_expiration flag when you create or update a partitioned table, the table-level partition expiration takes precedence over the dataset-level default partition expiration.

--default_table_expiration <value>

An integer that specifies the default lifetime, in seconds, for newly-created tables in a dataset. The expiration time is set to the current UTC time plus this value.

--description <value> The description of the dataset or table.

--destination_kms_key <value>

The Cloud KMS key used to encrypt the table data.

--display_name <value> The display name for the transfer configuration. The default value is ''.

--expiration <value> An integer that specifies the table or view's lifetime in milliseconds. The expiration time is set to the current UTC time plus this value.

-e, --external_table_definition <value>

Specifies a table definition to used to create an external table. The format of an inline definition is format=uri. Example: ORC=gs://bucket/table_part1.orc/*,gs://bucket/table_part2.orc/*

-f, --force When specified, if a resource already exists, the exit code is 0. The default value is false.

--label <value> A label to set on the table. The format is [KEY]:[VALUE]. Repeat this flag to specify multiple labels.

--require_partition_filter <value>

When specified, this flag determines whether to require a partition filter for queries over the supplied table. This flag only applies to partitioned tables. The default value is true.

--schema <value> The path to a local JSON schema file or a comma-separated list of column definitions in the form [FIELD]:[DATA_TYPE],[FIELD]:[DATA_TYPE]. The default value is ''.

-t, --table When specified, create a table. The default value is false.

--time_partitioning_expiration <value>

An integer that specifies (in seconds) when a time-based partition should be deleted. The expiration time evaluates to the partition's UTC date plus the integer value. A negative number indicates no expiration.

--time_partitioning_field <value>

The field used to determine how to create a time-based partition. If time-based partitioning is enabled without this value, the table is partitioned based on the load time.

--time_partitioning_type <value>

Enables time-based partitioning on a table and sets the partition type. Currently, the only possible value is DAY which generates one partition per day.

--use_legacy_sql When set to false, uses a standard SQL query to create a view. The default value is false (uses Standard SQL).

--view When specified, creates a view. The default value is false.

--dataset_id <value> The default dataset to use for requests. This flag is ignored when not applicable. You can set the value to [PROJECT_ID]:[DATASET] or [DATASET]. If [PROJECT_ID] is missing, the default project is used. You can override this setting by specifying the --project_id flag. The default value is ''.

--debug_mode Set logging level to debug. The default value is false.

--job_id <value> The unique job ID to use for the request. If not specified in a job creation request, a job ID is generated. This flag applies only to commands that create jobs: cp, extract, load, and query.

--location <value> A string corresponding to your region or multi-region location.

--project_id <value> The project ID to use for requests. The default value is ''.

--synchronous_mode <value>

If set to true, wait for the command to complete before returning, and use the job completion status as the error code. If set to false, the job is created, and successful completion status is used for the error code. The default value is true.

--sync <value> If set to true, wait for the command to complete before returning, and use the job completion status as the error code. If set to false, the job is created, and successful completion status is used for the error code. The default value is true.

--stats_table <value> tablespec of table to insert stats

bq rm

This is used to permanently delete resources. It’s a powerful command, so it’s important to use it with caution and be aware of its implications.

Help text

rm (gszutil-1.0.0)

Usage: rm [options] tablespec

--help prints this usage text

tablespec [PROJECT_ID]:[DATASET].[TABLE]

-d, --dataset When specified, deletes a dataset. The default value is false.

-f, --force When specified, deletes a table, view, model, or dataset without prompting. The default value is false.

-m, --model <value> When specified, deletes a BigQuery ML model.

-r, --recursive When specified, deletes a dataset and any tables, table data, or models in it. The default value is false.

-t, --table When specified, deletes a table. The default value is false.

--dataset_id <value> The default dataset to use for requests. This flag is ignored when not applicable. You can set the value to [PROJECT_ID]:[DATASET] or [DATASET]. If [PROJECT_ID] is missing, the default project is used. You can override this setting by specifying the --project_id flag. The default value is ''.

--debug_mode Set logging level to debug. The default value is false.

--job_id <value> The unique job ID to use for the request. If not specified in a job creation request, a job ID is generated. This flag applies only to commands that create jobs: cp, extract, load, and query.

--location <value> A string corresponding to your region or multi-region location.

--project_id <value> The project ID to use for requests. The default value is ''.

--synchronous_mode <value>

If set to true, wait for the command to complete before returning, and use the job completion status as the error code. If set to false, the job is created, and successful completion status is used for the error code. The default value is true.

--sync <value> If set to true, wait for the command to complete before returning, and use the job completion status as the error code. If set to false, the job is created, and successful completion status is used for the error code. The default value is true.

Usage with Cloud Storage

The primary use case is copying a mainframe dataset directly to GCS, then invoking the associated gRPC server to transcode the mainframe dataset to ORC.

The gRPC server allows the connector to run on a server the transcoding of binary files to ORC. This significantly reduces the level of MIPs consumed by this tool.

gsutil cp

The implementation of gsutil cp included with the BQSH interpreter requires a COBOL copybook to be provided with the COPYBOOK DD and a COBOL data file to be provided via the INFILE DD. The utility will open a configurable number of parallel connections to the Cloud Storage API and transcode the COBOL dataset to the columnar and GZIP compressed ORC file format on the fly. Users can expect about a 35% compression ratio.

Example JCL

//STEP01 EXEC BQSH

//INFILE DD DSN=<HLQ>.DATA.FILENAME,DISP=SHR

//COPYBOOK DD DISP=SHR,DSN=<HLQ>.COPYBOOK.FILENAME

//STDIN DD *

BUCKET=my-long-bucket-name-1234

gsutil cp --replace gs://$BUCKET/tablename.orc

/*

Help text from gsutil cp

gsutil (gszutil-1.0.0)

Usage: gsutil [cp|rm] [options] destinationUri

--help prints this usage text

Command: cp [options]

Upload Binary MVS Dataset to GCS

--replace delete before uploading

--partSizeMB <value> target part size in megabytes (default: 256)

--batchSize <value> blocks per batch (default: 1000)

-p, --parallelism <value>

number of concurrent writers (default: 6)

--timeOutMinutes <value>

timeout in minutes (default: 60)

gsutil rm

Example JCL

//STEP01 EXEC BQSH

//INFILE DD DSN=<HLQ>.DATA.FILENAME,DISP=SHR

//COPYBOOK DD DISP=SHR,DSN=<HLQ>.COPYBOOK.FILENAME

//STDIN DD *

BUCKET=my-long-bucket-name-1234

gsutil cp --replace gs://$BUCKET/tablename.orc

/*

//COND02 IF (STEP01.RC = 0) THEN

//STEP02 EXEC BQSH

//STDIN DD *

PROJECT=my-long-project-name-1234

bq load --project_id=$PROJECT \

myproject:DATASET.TABLE \

gs://bucket/tablename.orc/*

/*

//COND03 IF (STEP02.RC = 0) THEN

//STEP03 EXEC BQSH

//QUERY DD DSN=<HLQ>.QUERY.FILENAME,DISP=SHR

//STDIN DD *

PROJECT=my-long-project-name-1234

bq query --project_id=$PROJECT \

myproject:DATASET.TABLE \

gs://bucket/tablename.orc/*

/*

Help text from gsutil rm

Usage: gsutil [cp|rm] [options] destinationUri

--help prints this usage text

Command: rm [options]

Delete objects in GCS

-r, --recursive delete directory

-f, --force delete without use interaction (always true)

destinationUri Destination URI (gs://bucket/path)

--dataset_id <value> The default dataset to use for requests. This flag is ignored when not applicable. You can set the value to [PROJECT_ID]:[DATASET] or [DATASET]. If [PROJECT_ID] is missing, the default project is used. You can override this setting by specifying the --project_id flag. The default value is ''.

--location <value> A string corresponding to your region or multi-region location.

--project_id <value> The project ID to use for requests. The default value is ''.

--allow_non_ascii allow non ascii characters

--stats_table <value> tablespec of table to insert stats

--max_error_pct <value> job failure threshold for row decoding errors (default: 0.0)

gszutil

gszutil assumes the gRPC server is used. MIPS consumption is significantly lower when using a gRPC server, therefore we recommend using this command prod environment to convert binary files located in GCS into ORC format.

Example JCL

//STEP01 EXEC BQSH

//INFILE DD DSN=<HLQ>.DATA.FILENAME,DISP=SHR

//COPYBOOK DD DISP=SHR,DSN=<HLQ>.COPYBOOK.FILENAME

//STDIN DD *

gszutil --project_id foo --location US gs://bucket/bar.orc

/*

In this example --inDsn is assumed to be INFILE DD and --cobDsn is assumed to be COPYBOOK DD.

Options --remoteHost and --remotePort default to SRVHOSTNAME and SRVPORT. environment variables respectively.

Help text from gszutil

gszutil (gszutil-1.0.0)

Usage: gszutil [options] gcsOutUri

--help prints this usage text

--project_id <value> (required) The project ID to use for requests. The default value is ''.

--dataset_id <value> The default dataset used for the stats table. This flag is ignored when not applicable. You can set the value to [PROJECT_ID]:[DATASET] or [DATASET]. If [PROJECT_ID] is missing, the default project is used. You can override this setting by specifying the --project_id flag. The default value is ''.

--location <value> (required) A string corresponding to your region or multi-region location.

--cobDsn<value> DSN to read copybook from. If not provided, copybook will be read from DD:COPYBOOK

--inDsn<value> DSN of data set to be transcoded to ORC. Defaults to INFILE DD

--gcsOutUri<value> Cloud Storage path for output ORC files (format: gs://BUCKET/PREFIX)

--remoteHost<value> Remote host or ip address (defaults to env var: SRVHOSTNAME)

--remotePort<value> Remote port (default to env var: SRVPORT)

--pic_t_charset<value> Charset used for encoding and decoding international strings, used with PIC T copybook type, default is EBCDIC

--timeOutMinutes<value> Timeout in minutes for GRecvExportGrpc call. (default for GCS: 90 minutes)

--keepAliveTimeInSeconds<value> Keep alive timeout in seconds for http channel. (default: 480 seconds)

--stats_table<value> tablespec of table to insert stats

That’s a lot of information about the Mainframe Connector. I hope you find the blog useful. If you need any help with using the tool, please feel free to leave a comment below. Thanks!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Very comprehensive - thanks for sharing @jigneshrawal @Willy_U!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you @Lauren_vdv.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Nice article @jigneshrawal

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you @maheshgupta

Twitter

Twitter