- Google Cloud

- Articles & Information

- Cloud Product Articles

- Improving API Performance Using Caching with Apige...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Are you looking to improve response times, lower costs, or decrease load on your backend services while still being able to scale? As a request from a client application traverses the various layers involved (e.g., browsers, Content Delivery Networks (CDNs), proxies, services), you can employ various caching solutions to help achieve these goals. In this article, you will learn to use Apigee’s caching capabilities to implement caching within a proxy.

Using HTTP caching headers, you can instruct a browser to cache content locally, or CDNs to cache content at Points of Presence (PoPs). Apigee’s support for caching goes beyond HTTP header based caching and can be thought of as an application caching layer providing a declarative / low-code means of managing encrypted cache entries. With Apigee, you can add caching capabilities in cases that were previously impossible.

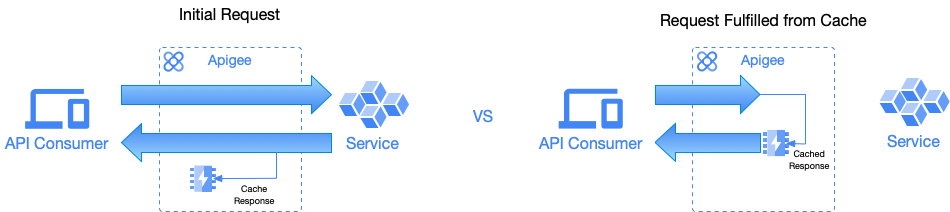

In its simplest form, a cache eliminates the need to let a request flow through to the target backend if it can be fulfilled from a previously cached value, improving response times and reducing load on the backend, and maybe costs too.

Figure 1: Response caching and request fulfillment from cache

In cases where the target backend service is billed per request, fewer requests can mean lower costs. For example, Cloud Functions pricing includes charges based invocations that are charged at a flat rate regardless of the source of the invocation.

Apigee allows you to cache the responses of backend services, or any objects your proxy requires, across multiple requests/responses. The latter is important because an Apigee proxy can execute policies that allow you to retrieve data from external systems, extract data from the request / response, or even execute custom code as part of a request’s flow. For example, a proxy that makes a call to the Google Maps API to convert a city/state combination into geographic coordinates to include as part of the request to the target backend service.

We’ll dive into the following use cases in this article:

All the code related to this article can be found in the basic-caching folder of the apigee-samples GitHub repo. The target backend service that Apigee will be proxying traffic to for this example is the Google Books Volume API. We’ll start with a walkthrough of the solution. If you’d rather start by deploying the sample, skip ahead to the Deployment section. Let’s get started.

Caching backend service responses

Response caching is a good technique to use when backend data changes infrequently. It is a three step process in Apigee, using the ResponseCache policy. Two of these steps are attaching the ResponseCache policy as part of the proxy request, and target response flows. This provides the behavior described in Figure 1 above - caching the server response or fulfillment of request from cache.

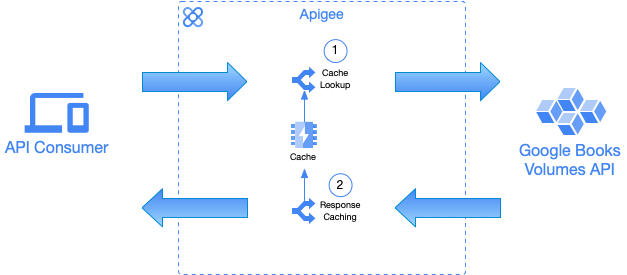

Figure 2: Sample API proxy caching behavior

In this section, we’ll walk through the proxy definition that is available in the basic-caching folder of the accompanying apigee-samples GitHub repo.

To follow along, you can choose to either use the Basic Caching Cloud Shell Tutorial or clone the apigee-samples GitHub repo, using the following command:

git clone https://github.com/GoogleCloudPlatform/apigee-samples.gitLet’s cover the ResponseCache policy first.

<ResponseCache name="RC-CacheVolumes">

<Scope>Exclusive</Scope>

<CacheKey>

<KeyFragment ref="request.uri" type="string"/>

</CacheKey>

<ExcludeErrorResponse>true</ExcludeErrorResponse>

<ExpirySettings>

<TimeoutInSeconds>300</TimeoutInSeconds>

</ExpirySettings>

<SkipCacheLookup>NOT (request.verb JavaRegex "(GET|HEAD)") or request.header.x-bypass-cache = "true"

</SkipCacheLookup>

<SkipCachePopulation>NOT (request.verb JavaRegex "(GET|HEAD)")</SkipCachePopulation>

</ResponseCache>The ResponseCache policy is highly configurable through various elements. We’ll highlight a few here but refer to the ResponseCache policy docs for further details. Each item stored in cache is referenceable via a unique cache key. Care must be taken when determining how the cache key is composed. For example, if the request contains data unique to the user making the request, you should include an attribute that identifies the user as part of the key, avoiding a scenario where two users may end up seeing each other’s data.

Multiple elements are used to determine the resulting cache key. Let’s start with the prefix. This is applied to the final cache key as a means of organizing cache items into namespace, and can be configured one of two ways.

- <Scope> - determines the prefix used for the cache key based on a predefined set of values. In the example above, “Exclusive” is used, which is the most specific, and presents minimal risk of namespace collisions within a given cache. Other values are supported, including, Global (sharing across all API proxies deployed in the environment), Application (proxy name), Proxy (ProxyEndpoint name), or Target (TargetEndpoint name).

- <CacheKey>/<Prefix> - this element overrides Scope with a literal value of your own choosing.

Once the prefix is determined, you can specify the value to concatenate with the prefix using the <CacheKey>/<Fragment> element. It can be either a literal value or a reference to a variable used to create the cache key. For example, in the sample above, we use the request.uri flow variable to use the proxy base path + the remainder of the address, including query parameters as part of the cache key.

If the API supports various media types you can use the UseAcceptHeaders element to prevent a client from getting a media type they did not ask for. The cache key is appended with values from response Accept headers so that a unique key is used based on the media type requested.

We’ve chosen to support caching only for GET/HEAD requests (for some background, see IETF RFC 9110). This is achieved using the SkipCacheLookup and SkipCachePopulation elements. Additionally, support for using a custom header (X-Bypass-Cache) as a means of skipping the cache lookup, and only caching non-error responses, using the ExcludeErrorResponse element, are also demonstrated.

The last element we’ll cover is the UseResponseCacheHeaders element. When used, the HTTP response headers from the target backend are considered when setting the "time to live" (TTL) of the response in the cache. When this is true, Apigee considers the values of the response headers, comparing the values with those set by <ExpirySettings> when setting time to live. Refer to Setting cache entry expiration for more details.

Now that the ResponseCache policy is configured, we need to ensure the policy is executed during the request processing to determine if the item is in the cache already. This will short circuit any additional request processing. This is accomplished by attaching the ResponseCache policy into the ProxyEndpoint’s Preflow phase.

<ProxyEndpoint name="default">

<PreFlow name="PreFlow">

<Request>

<Step>

<Name>RC-CacheVolumes</Name>

</Step>

</Request>

<Response/>

</PreFlow>

<Flows/>

<PostFlow/>

<HTTPProxyConnection>

<BasePath>/v1/samples/books</BasePath>

</HTTPProxyConnection>

<RouteRule name="default">

<TargetEndpoint>default</TargetEndpoint>

</RouteRule>

</ProxyEndpoint>The last step is to attach the ResponseCache policy into the Target Response postflow, so that it executes during the response processing to place the target backend’s response into the cache.

<TargetEndpoint name="default">

<PreFlow name="PreFlow">

<Request/>

<Response/>

</PreFlow>

<Flows/>

<PostFlow name="PostFlow">

<Request/>

<Response>

<Step>

<Name>RC-CacheVolumes</Name>

</Step>

</Response>

</PostFlow>

<HTTPTargetConnection>

<URL>https://www.googleapis.com/books/v1/volumes</URL>

</HTTPTargetConnection>

</TargetEndpoint>Now that we have explained how to configure the ResponseCache policy and how its configuration options work, you’re ready to deploy the proxy.

First set the following environment variables:

- PROJECT - the project where your Apigee organization is located

- APIGEE_HOST - the externally reachable hostname of the Apigee environment group that contains APIGEE_ENV

- APIGEE_ENV - the Apigee environment where the demo resources should be created

export PROJECT="<GCP_PROJECT_ID>"

export APIGEE_HOST="<ENVIRONMENT_GROUP_HOSTNAME>"

export APIGEE_ENV="<APIGEE_ENVIRONMENT_NAME>"To deploy the proxy, execute the following commands (remember the sample is in the basic-caching folder):

cd apigee-samples/basic-caching

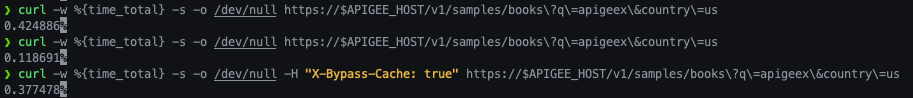

./deploy-basic-caching.shWith the proxy deployed, you are ready to see the performance increase that caching provides. Run the following curl commands to compare performance.

curl -w %{time_total} -s -o /dev/null https://$APIGEE_HOST/v1/samples/books?q=apigee&country=us

curl -w %{time_total} -s -o /dev/null https://$APIGEE_HOST/v1/samples/books?q=apigee&country=us

curl -w %{time_total} -s -o /dev/null -H "X-Bypass-Cache: true" https://$APIGEE_HOST/v1/samples/books?q=apigee&country=us

And as you see below, the response time for the second request (i.e., cached request) is considerably faster than the first request. Performance conclusions should not be a takeaway from one or two transactions, but in the case of caching, there is a clear advantage in reducing response time and in reducing the burden on backend systems and networks.

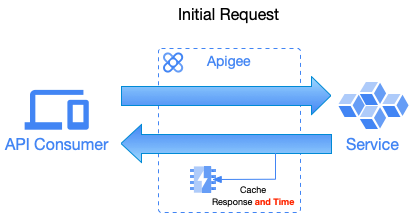

General purpose object caching

General purpose object caching allows you to cache arbitrary values, and gives you fine grained control over removing items from the cache. For example, you may want to cache configuration or profile data that is unrelated to any particular request: A request routing table, an authorization ruleset, or the results of a lookup of dynamic rate limits done by a ServiceCallout. In these types of cases, you can leverage Apigee’s general purpose caching capabilities with the PopulateCache, LookupCache, and InvalidateCache policies. To keep things simple, we’re going to expand upon the above example, and cache the date/time when the cache was populated as an additional value, and return that as a header. Let’s get started!

Figure 3: Caching target backend response and current time

As depicted above, we’re going to change the flow to also add caching the time. To do this, we’re going to use the PopulateCache policy to cache the system.time flow variable.

<PopulateCache name="PC-SetCachedOnDateTime">

<CacheKey>

<KeyFragment ref="request.querystring" type="string"/>

<KeyFragment>volumesCachedOn</KeyFragment>

</CacheKey>

<Scope>Exclusive</Scope>

<ExpirySettings>

<TimeoutInSeconds>300</TimeoutInSeconds>

</ExpirySettings>

<Source>system.time</Source>

</PopulateCache>The behavior of the CacheKey, Scope, and ExpirySettings elements are the same as previously covered, so we won’t cover them again here. Only item of note is that we’re using multiple KeyFragments to make the key unique to the request and value being cached. The element you haven’t been introduced to yet is Source, which specifies the value to cache; here, it is the builtin flow variable 'system.time' which returns a string representing the current time.

OK, that covers the policy that sets the value into the cache; next, we’ll use the LookupCache policy to read the value into a flow variable.

<LookupCache name="LC-LookupCachedOnDateTime">

<CacheKey>

<KeyFragment ref="request.querystring" type="string"/>

<KeyFragment>volumesCachedOn</KeyFragment>

</CacheKey>

<Scope>Exclusive</Scope>

<AssignTo>volumesCachedOn</AssignTo>

</LookupCache>And finally, we will use the AssignMessage policy to output the cached time value as a response header.

<AssignMessage name="AM-SetCachedOnHeader">

<Add>

<Headers>

<Header name="cached-on">{volumesCachedOn}</Header>

</Headers>

</Add>

<IgnoreUnresolvedVariables>true</IgnoreUnresolvedVariables>

</AssignMessage>With the policies configured, let’s walk through where those policies need to be attached in the proxy flows. We’ll use some conditions to only insert the date/time into the cache, if the response has been inserted into the cache, and inject the "cached-on" response header only if we’re returning the response payload from cache.

<ProxyEndpoint name="default">

<PreFlow name="PreFlow">

<Request>

<Step>

<Name>RC-CacheVolumes</Name>

</Step>

</Request>

<Response/>

</PreFlow>

<Flows/>

<PostFlow>

<Response>

<Step>

<Name>AM-SetCacheHitHeader</Name>

</Step>

<Step>

<Name>AM-SetCachedOnHeader</Name>

<Condition>(request.header.x-bypass-cache != "true") and (responsecache.RC-CacheVolumes.cachehit == true)</Condition>

</Step>

</Response>

</PostFlow>

<HTTPProxyConnection>

<BasePath>/v1/samples/books</BasePath>

</HTTPProxyConnection>

<RouteRule name="default">

<TargetEndpoint>default</TargetEndpoint>

</RouteRule>

</ProxyEndpoint>

<TargetEndpoint name="default">

<PreFlow/>

<Flows/>

<PostFlow name="PostFlow">

<Request/>

<Response>

<Step>

<Name>RC-CacheVolumes</Name>

</Step>

<Step>

<Name>PC-SetCachedOnDateTime</Name>

<Condition>(responsecache.RC-CacheVolumes.cachehit == false)</Condition>

</Step>

<Step>

<Name>LC-LookupCachedOnDateTime</Name>

<Condition>(request.header.x-bypass-cache != "true")</Condition>

</Step>

</Response>

</PostFlow>

<HTTPTargetConnection>

<URL>https://www.googleapis.com/books/v1/volumes</URL>

</HTTPTargetConnection>

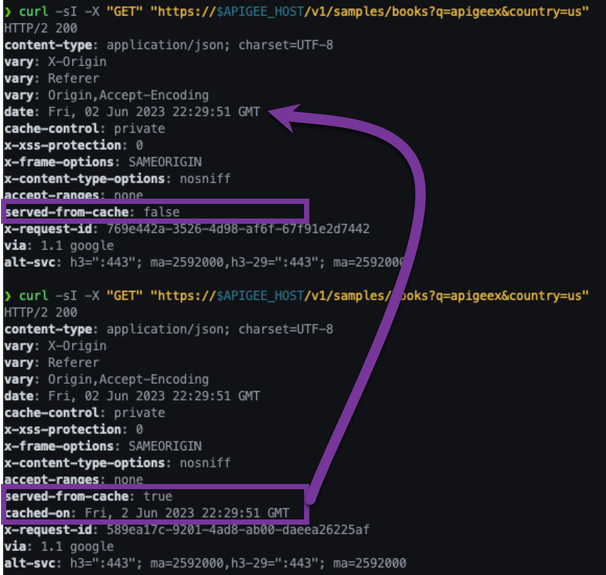

</TargetEndpoint>This time to test out the changes, we’re only interested in seeing the response headers to see that the cached-on header is the date/time of the original non-cached request.

curl -sI -X "GET" "https://$APIGEE_HOST/v1/samples/books?q=apigeex&country=us"

curl -sI -X "GET" "https://$APIGEE_HOST/v1/samples/books?q=apigeex&country=us"The following is the output of running those commands, and shows the second request returning the date/time of the original request in the cached-on header.

If you wait beyond the 300 seconds used in the PopulateCache policy’s ExpirySettings and make the request again, you will see this cycle repeated again. A first non-cached request, and a subsequent request fulfilled from the cache with a different cached-on value returned.

Cleaning up resources

To cleanup, you can delete the proxy by running the following command.

./clean-up-basic-caching.shConclusion

In this post, you learned how to use Apigee’s caching capabilities and the performance impact it can have on your APIs. Caching is beneficial from a performance perspective and can also help you reduce costs by reducing the number of requests that make it to your backend systems.

I encourage you to dive deeper into Apigee’s caching with the following resources:

- Apigee Cache Internals - great article covering the details of caching in Apigee

- Apigee Caching Related Videos

Twitter

Twitter