- Google Cloud

- Articles & Information

- Cloud Product Articles

- GenAI and API Management: Security, Scaling, and D...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

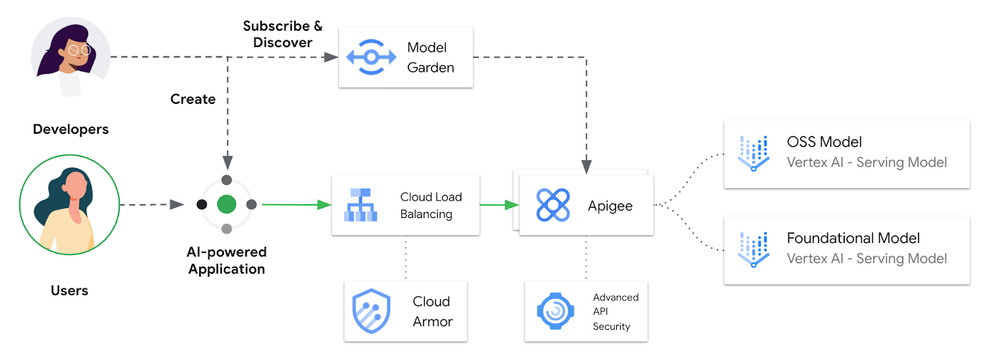

This multipart series will dive into the crucial aspects of making Generative AI (GenAI) applications robust and ready for real-world use. API Management plays a central role in controlling access to LLMs, ensuring security, and managing performance within a GenAI ecosystem.

Part 1: Why Apigee is Key for Vertex AI Endpoint Security

Modern platforms thrive on delivering lightning-fast, personalized experiences. For ecommerce features like search and recommendations, Google Cloud's Vertex AI provides powerful machine learning capabilities. But to handle demanding production workloads, seamlessly scale, and ensure high availability, you need a robust API management solution. It is a nightmare for platform administrators to keep away abusive users querying a model. This is where the API Management platform can help.

GCP Load Balancers and Cloud Armor provide a strong foundation for Vertex AI endpoint network access and WAF security, but this alone might not be sufficient for comprehensive defense.

Why Load Balancer and Cloud Armor alone are not enough to protect Vertex AI endpoints?

Lets recap what GCP Load Balancer and Cloud Armor do:

- GCP Load Balancers: Distribute incoming traffic across multiple Vertex AI instances for scalability and availability. They provide basic network-level (Layer 3/4) security.

- Cloud Armor: A Web Application Firewall (WAF) that protects against Layer 7 attacks like DDoS, SQL injection, cross-site scripting (XSS). It integrates with GCP Load Balancers.

The Gaps: Why It's Not Enough

- API-Specific Threats: Load Balancers and Cloud Armor are not specialized in understanding the nuances of API vulnerabilities. Attackers can exploit the logic and structure of APIs (e.g., broken object-level authorization, mass assignment) that general network-level protection may miss.

- Data/Model Security: Vertex AI endpoints may expose sensitive data or models. Cloud Armor won't protect against:

- Data exfiltration at the API level

- Unauthorized access to model inference endpoints

- Attacks on model integrity (model poisoning), models can be manipulated by sending incorrect or malicious data to the training data or learning process.

- Business Logic Flaws: Load Balancers and Cloud Armor don't understand your application's business logic. Attackers might manipulate API calls in ways that seem legitimate but abuse the underlying business processes.

Apigee: The Missing Piece

Apigee is the API Management solution that complements GCP Load Balancer and Cloud Armor, adding essential layers.

1. API-Centric Security

- API Gateway Dedicated for Vertex AI: Consider an API gateway like Apigee that sits in front of your Vertex AI endpoints. This allows for specialized security policies like OAuth, API key/token validation, schema validation, and threat detection tailored to your API's structure.

- Positive Security Model: A positive security model explicitly defines what is allowed rather than just relying on detecting abnormalities.

- Traffic Control: Rate limits and quotas protect your Vertex AI resources from abuse and uncontrolled usage spikes.

API-Centric Security

API-Centric Security

2. Apigee as a Load Balancer for Vertex AI Endpoints

- Load Balancing for Regional Resiliency: Distribute traffic intelligently across Vertex AI endpoints deployed in multiple regions using Apigee's load balancing capabilities.

- Centralized Traffic Management: Apigee acts as a single entry point for your API traffic. This simplifies client interactions, allowing them to work with a single API endpoint instead of managing multiple regional Vertex AI Endpoints.

- Intelligent Load Balancing: Apigee offers various load balancing algorithms (round-robin, weighted, etc.) to distribute requests across your Vertex AI Endpoints based on factors like region, endpoint health, and load.

- Failover and Resilience: Apigee can detect if a regional Vertex AI Endpoint is unavailable and automatically redirect traffic to healthy endpoints in other regions, improving your application's reliability.

- A/B Testing: Apigee can provide a stable interface for application developers to seamlessly transition across multiple versions of deployed models without disrupting existing applications. Moreover, AI practitioners can also perform granular releases minimizing business disruptions.

Let's see how this works:

Code Sample: Vertex AI Load Balancing (Target Endpoint)

<TargetEndpoint name="vertex-endpoint-us-east1">

<HTTPTargetConnection>

<URL>https://us-east1-my-project.cloud.google.com/aiplatform/v1/endpoints/1234567890:predict</URL>

</HTTPTargetConnection>

</TargetEndpoint>

<TargetEndpoint name="vertex-endpoint-us-west1">

<HTTPTargetConnection>

<URL>https://us-west1-my-project.cloud.google.com/aiplatform/v1/endpoints/1234567890:predict</URL>

</HTTPTargetConnection>

</TargetEndpoint>

<RouteRule name="default">

<TargetEndpoint>vertex-endpoint-us-east1</TargetEndpoint>

<TargetEndpoint>vertex-endpoint-us-west1</TargetEndpoint>

</RouteRule>

This Apigee Target Endpoint definition routes requests across Vertex AI endpoints in different regions (e.g., 'us-east1' and 'us-west1') with a round-robin algorithm. Apigee handles health checks and failover, ensuring your users always receive responses from available Vertex AI endpoints.

Apigee's Health Check and Failover Process

Let's explore how Apigee incorporates health checks and failover mechanisms, and how to reflect those in code.

- Health Probes: Apigee actively sends probes (e.g HTTP or TCP) to your backend Vertex AI endpoints at regular intervals. These probes can be simple endpoint checks or more elaborate tests.

- Failure Detection: Apigee marks a Vertex AI endpoint as unhealthy if:

- It fails to respond to a probe within a designated timeout period.

- It consistently returns error responses (e.g., HTTP 500 errors).

- Traffic Exclusion: When a Vertex AI endpoint is deemed unhealthy, Apigee automatically stops routing traffic to it.

- Failover: Apigee redirects traffic to remaining healthy Vertex AI endpoints based on your load-balancing algorithm. This ensures continuous service availability.

- Recovery: Apigee continues to monitor failed endpoints and automatically brings them back into rotation when they become healthy again.

Within your Target Endpoint configuration, you'd include a HealthMonitor section.

Code Sample: Health Monitor

<TargetEndpoint name="vertex-predictions">

...

<HealthMonitor>

<IsEnabled>true</IsEnabled>

<IntervalInSec>10</IntervalInSec>

<TimeoutInSec>5</TimeoutInSec>

</HealthMonitor>

</TargetEndpoint>Apigee supports more advanced health checks:

- Custom Probes: Define health check requests within the model code and expected response codes for your target endpoints.

- SuccessThreshold: Configure how many consecutive successful probes are required before an endpoint is considered healthy.

- UnhealthyThreshold: Configure how many consecutive failures trigger an endpoint to be marked as unhealthy.

Apigee's health checks work seamlessly with its load-balancing mechanisms. You can view real-time health status within the Apigee UI or integrate health check data with external monitoring tools.

Limitations

It's important to understand that Apigee's health checks may not immediately detect failures deep within your Vertex AI model. It's crucial to have robust monitoring of your Vertex AI model itself for more granular health assessments.

3. Apigee Caching and Vertex AI Predictions

A sluggish experience can lead to frustrated users and lost sales. That's where prefetching and predictive search, powered by Apigee caching and Vertex AI, will help. By anticipating user queries and caching results, you can dramatically boost the responsiveness of your queries.

The Prefetching and Predictive Search Workflow

- Initial User Input: As a user starts typing in your e-commerce search bar, frontend JavaScript subtly triggers background requests to fetch potential search results.

- Apigee's Cache Advantage: Apigee first attempts to serve prefetched results directly from its high-performance cache, offering near-instant responses.

- Cache Population: Apigee intelligently stores the results predicted by Vertex AI, optimizing future requests.

- Seamless Frontend Updates: Your frontend asynchronously receives results (from cache or Vertex AI) and gracefully displays suggestions or previews to the user.

Code Sample: Apigee's caching policies

<TargetEndpoint name="search-predictions">

... <HTTPTargetConnection> ... </HTTPTargetConnection>

<PreFlow name="PreFlow">

<Request>

<Step>

<Name>CacheLookup</Name>

<KeyFragment ref="request.queryparam.q"/>

</Step>

</Request>

<Response/>

</PreFlow>

<PostFlow name="PostFlow">

<Request/>

<Response>

<Step>

<Name>CachePopulate</Name>

<KeyFragment ref="request.queryparam.q"/>

</Step>

</Response>

</PostFlow>

</TargetEndpoint>In the code snippet above:

- CacheLookup (PreFlow): Apigee checks its cache for results associated with the partial search query (request.queryparam.q)

- CachePopulate (PostFlow): Apigee stores the Vertex AI prediction in its cache for subsequent lookups.

Benefits of Apigee Caching with Vertex AI

- Lightning-Fast Suggestions: Pre-cached results lead to near-instantaneous search suggestions or product previews.

- Reduced Backend Load: Caching lessens the strain on your Vertex AI endpoints, handling repetitive prediction requests efficiently.

- Scalability: Apigee's distributed cache architecture gracefully handles surges in search traffic.

4. Self Service of Vertex AI endpoints using Developer Portal

Apigee Developer Portal is a game-changer for managing and consuming Vertex AI endpoints. It streamlines processes, fosters collaboration, and drives innovation within your organization.

The Problem with Non-Self Service Approaches

- Bottlenecks: Without self-service, developers depend on central teams or manual processes to access Vertex AI endpoints. This leads to slow onboarding, delays, and frustration.

- Governance Challenges: Controlling access and managing usage of powerful Vertex AI models becomes difficult without a centralized, standardized method.

- Lack of Visibility: Developers may have limited knowledge of existing Vertex AI capabilities, hindering innovation and potential reuse of powerful machine learning models.

How an Apigee Developer Portal Helps

- Marketplace as a Model Garden: The portal becomes a well-organized catalog of your Vertex AI-powered APIs. Developers can easily discover models relevant to their projects.

- Documentation and Guidance: The portal provides clear documentation, API specifications, code examples, and possibly even interactive sandboxes for testing. This speeds up developer understanding and onboarding.

- Streamlined Onboarding: Developers can self-register, request access to APIs, and automatically receive the necessary credentials (API keys or OAuth tokens ) through the portal.

- Usage Monitoring and Controls: The portal gives developers visibility into their API usage patterns and quotas. Administrators can manage these controls centrally, ensuring fair and responsible use of Vertex AI resources.

- Feedback Loop: The portal can facilitate communication between API developers (those publishing Vertex AI models as APIs) and API consumers (developers building applications), fostering a collaborative improvement cycle.

Summary - The Winning Combination

The Best Defense is Layered. Think of it this way:

- GCP Load Balancer: The robust traffic director.

- Cloud Armor: The frontline shield against web-based attacks.

- Apigee: The intelligent guardian of your APIs and the gateway to your Vertex AI models.

With Apigee as your API management layer and Vertex AI handling the machine learning intelligence, you build a platform designed for scale, performance, and the personalized experiences that drive customer satisfaction and loyalty.

Start Your Journey

Ready to unlock the full potential of Apigee and Vertex AI in your environment? Explore Google Cloud's documentation and experiment with Apigee’s capabilities that best suit your unique business needs.

Twitter

Twitter