- Google Cloud

- Articles & Information

- Cloud Product Articles

- Flexible Apigee Analytics in BigQuery and Data Stu...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In this article we want to explore how we can reinforce the already rich analytics capabilities of Apigee by bringing them to BigQuery. BigQuery is Google Cloud’s fully-managed and highly scalable data warehouse and integrates nicely with a number of common enterprise data analytics tools as well as a large number of data sources. Bringing Apigee analytics data to BigQuery allows users to leverage the full feature set of the BigQuery engine and even build and operationalize machine learning models using BigQuery ML.

This article explains the following three concepts:

- How to export Apigee analytics data to BigQuery

- How to query Apigee analytics data from within BigQuery

- How to build intelligent dashboards for Apigee analytics data using Data Studio

Exporting Apigee Analytics Data to BigQuery

The Apigee documentation lists the prerequisites and explains how to export Apigee analytics to either Google Cloud Storage or BigQuery. In this article we focus on how to export data to BigQuery because it allows us to perform subsequent analytics tasks.

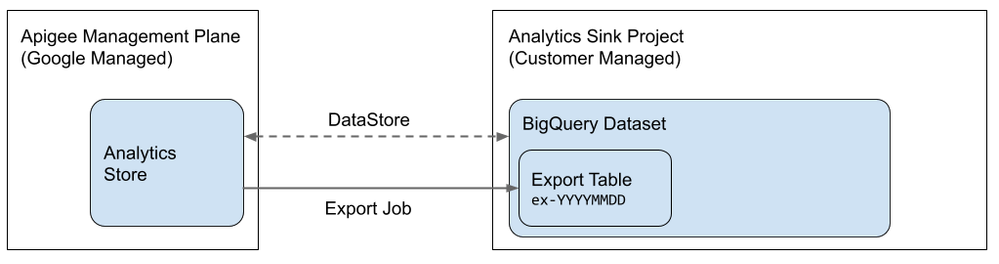

The following illustration captures all the required components at a high level. In every Apigee organization the management plane captures Analytics data for a specific as per the Apigee subscription terms. Users can then choose to create a data store in an arbitrary GCP project. In the case of a BigQuery export this data store points to a BigQuery data set. Customers can then create export jobs to export slices of the analytics data as separate tables within the BigQuery dataset.

RBAC permission for the Apigee Service Account

To allow the Google Managed Apigee Management Plane to create new tables within a BigQuery data set it requires a set of permissions. The following snippet can be used to assign the BigQuery JobUser and Data Editor roles to the Management Plane’s service account:

PROJECT_ID=<>

PROJECT_NUMBER=$(gcloud projects describe "${PROJECT_ID}" --format="value(projectNumber)")

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member=serviceAccount:service-$PROJECT_NUMBER@gcp-sa-apigee.iam.gserviceaccount.com --role=roles/bigquery.jobUser

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member=serviceAccount:service-$PROJECT_NUMBER@gcp-sa-apigee.iam.gserviceaccount.com --role=roles/bigquery.dataEditorCreate a BigQuery Data Set

Now that the service account is authorized to manipulate the BigQuery data we need to create a BigQuery data set.

Note that the dataset needs to be created within a specific location. If your analytics region is in the US then you’ll have to change the BQ_LOCATION to “us” as well.

BQ_LOCATION="eu"

DATASET_NAME="apigeeexport_emea"

bq mk --project_id $PROJECT_ID --location=$BQ_LOCATION apigeeexport_emeaCreate Apigee Datastore

The datastore resource is the Apigee object that contains the necessary configuration for the sink of an export job.

Pro Tip: If you have large amounts of data, it makes sense to create dedicated datastores for each environment and use the environment name as the table prefix to make querying it more efficient.

DATASTORE_NAME="bqDatastoreTest"

ENV="test"

TOKEN=$(gcloud auth print-access-token)

curl "https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/analytics/datastores" \

-X POST \

-H "Content-type:application/json" \

-H "Authorization: Bearer $TOKEN" \

-d \

"{

\"displayName\": \"$DATASTORE_NAME\",

\"targetType\": \"bigquery\",

\"datastoreConfig\": {

\"projectId\": \"$PROJECT_ID\",

\"datasetName\": \"$DATASET_NAME\",

\"tablePrefix\": \"apigee-$ENV\"

}

}"

Submit your first Apigee Analytics Export Job

Apigee analytics export jobs are issued per environment and per day that the data is captured.

Note that there are analytics export limits enforced per organization as documented here.

The following snippet creates a data export job for the “test” environment for August 30th (starting at 2021-08-30 at 00:00:00 UTC and ending at 2021-08-31 at 00:00:00 UTC):

START_DATE_INCL="2021-08-30"

END_DATE_EXCL="2021-08-31"

curl "https://apigee.googleapis.com/v1/organizations/$PROJECT_ID/environments/$ENV/analytics/exports" \

-X POST \

-H "Content-type:application/json" \

-H "Authorization: Bearer $TOKEN" \

-d "{

\"name\": \"Export query results to BigQuery\",

\"description\": \"BQ Export Job\",

\"dateRange\": {

\"start\": \"$START_DATE_INCL\",

\"end\": \"$END_DATE_EXCL\"

},

\"outputFormat\": \"csv\",

\"csvDelimiter\": \",\",

\"datastoreName\": \"$DATASTORE_NAME\"

}"

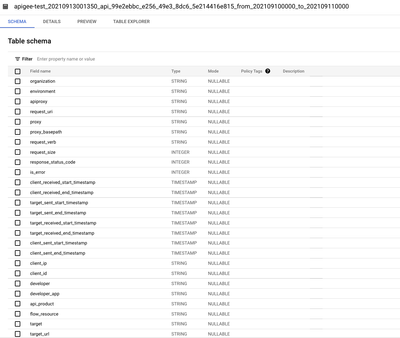

In the BigQuery UI you should now see a new table in the dataset that you created before that has a schema similar to the one shown in the screenshot below:

Querying Analytics Data in BigQuery

Now that you have your analytics data in BigQuery you can start querying it. For example, to get a summary of the number of API calls per developer you can run the following query against your data.

Note that if you have exported multiple days and want to query across multiple tables you can use the *-operator to query all tables with a common prefix.

SELECT developer, COUNT(gateway_flow_id) as calls

FROM `my_project.apigeeexport_emea.apigee-test_2021*`

GROUP BY developerWhich should give you a result similar to the following JSON array:

[

{

"developer": "(not set)",

"calls": "22"

},

{

"developer": "my-project@@@e31dfcef-022d-475e-9044-fd286e1889b2",

"calls": "579"

},

{

"developer": "my-project@@@077794c5-ec39-4e8e-baa0-c5f280e256d3",

"calls": "491"

},

{

"developer": "my-project@@@27cdb9e0-b38b-4fd4-b86e-a4985afbbba5",

"calls": "953"

}

]Displaying Apigee Analytics in a Data Studio Dashboard

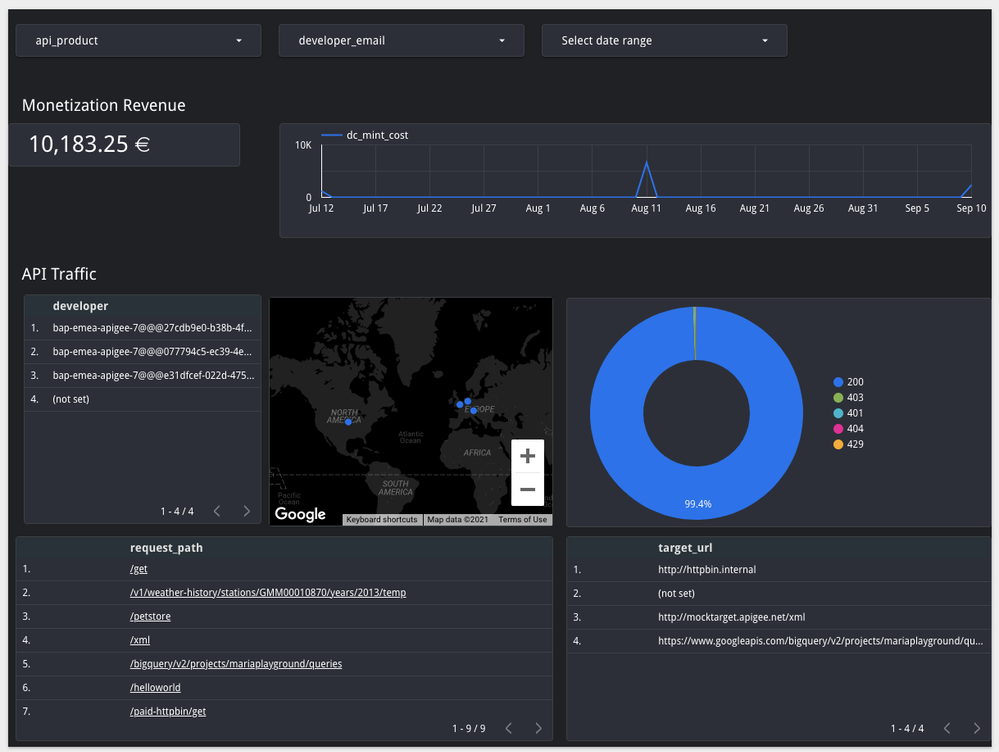

After we explored our dataset and maybe also discovered interesting correlations to other enterprise data held in BigQuery or another data store, we might want to generate dashboards that visualize key enterprise data with respect to the API program. For this, you are free to use any BI tool of your choice or assemble a simple dashboard based on Google Data Studio.

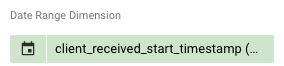

To use BigQuery as a Data Studio data source you can follow the steps described here. To allow for dynamic display using the date picker element of Data Studio you need to set the page’s “Date Range Dimension” value to a sensible field such as the client_received_start_timestamp.

With that out of the way we can then add any interesting visualization based on the available data in BigQuery or any other supported datasource.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Is possible execute all exports from one datastore in the same Big Query table? I'd like to have a partitioned table with the exported data.

Thank you,

Regards.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

The Apigee export only allows you to specify the "tablePrefix" and creates a new table for every export. You'd have to merge the tables in BQ itself.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, thanks for sharing this.

Is this article valid for both, Apigee Edge and Apigee X (both cloud managed)?

Twitter

Twitter