- Google Cloud

- Articles & Information

- Cloud Product Articles

- Build Effective DR Strategy for Apigee Hybrid

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Build Effective DR Strategy for Apigee Hybrid

Goal

To ensure that Apigee can be restored to a fully operational state in the event of a disaster. The goal is to guide our Apigee Practitioners to be operationally ready.

Scope

This strategy covers the following areas:

- Data backup & replication: This will ensure that data is backed up, so that it can be restored in the event of a disaster.

- Disaster recovery testing: This will ensure that the disaster recovery plan is effective and that Apigee Hybrid can be restored to a fully operational state in a timely manner.

- Disaster recovery plan: This will document the steps that need to be taken to restore Apigee Hybrid in the event of a disaster.

Risks

If DR strategy is not properly implemented, organizations risks following:

- Data loss: This could occur if data is not backed up properly or if the data backup is lost or corrupted.

- Service disruption: This could occur if Apigee Hybrid is not able to be restored to a fully operational state in a timely manner.

- Financial loss: This could occur if Apigee Hybrid is not able to be restored to a fully operational state and the company loses revenue as a result.

Background

One of the most commonly asked questions by Apigee IT administrators is how to build an DR strategy for the Apigee Hybrid platform. Apigee is different from traditional applications or services as it is designed for scalability and availability.

The fundamental shift in how we think about DR in Apigee is in terms of data replication and restoration. In the traditional approach, there is always an Active site and there is a passive site. Traffic is switched from active site to passive site to serve the traffic Apigee takes a different approach to building DR. In Apigee, you set up DR as a new Active region so that cross-region data synchronization happens in real time. The proposed design ensures that in case of disaster, there is an active site that is serving traffic and a bad site can restore and replicate data from a good active site.

To elaborate further, let's understand what is defined as disaster and what are the different scenarios that can trigger a disaster in Apigee.

Disaster is defined as a situation that is triggered by catastrophic failure in a system causing non availability of applications leading to loss of Business. In the case of Apigee, we define disaster as a situation where API traffic is disrupted when there is a failure of infrastructure/systems. Lets understand how we can categorize the HA and disaster scenario in the realm of Apigee.

HA Scenarios

- Network failure

- Disk failures on Apigee nodes

- Apigee node failure

- Single site failure in a multi-site deployment

Disaster Scenarios

- Data corruption in Apigee Datastore

- Other irrecoverable infrastructure failures impacting all Apigee sites of an organization

If any of the above conditions triggers disruption in API traffic we can call it a disaster. Some of Apigee's common failure scenarios are listed below.

- Single Node or Disk Failure in a Site [Take Action]

- Site Failure for Single Site Apigee Installation [Take Action]

- Single/more Site Failure for Multi Site Apigee Installation with at least one healthy site [Take Action]

- All Site Failure for Multi Site Apigee Installation [Take Action]

HA/Failure Patterns

Single Node Failure

A single node failure in Kubernetes cluster is generally handled gracefully as you always design your K8 cluster for high availability. We recommend at least 3 nodes for the Apigee runtime component and at least 3 nodes for the Apigee data component to ensure high availability of Apigee in the K8 cluster.

Persistent Disk Failure

Decomposing and Bringing Up a New Cassandra Node

Decomposing the Old Node:

- Drain the Node: Use nodetool drain to prevent the node from accepting new writes. This ensures existing tasks are gracefully transferred to other nodes.

- Remove the Node from the Ring: Use nodetool removenode to remove the node from the Cassandra cluster. This updates the ring topology and assigns its data partitions to other nodes.

- Decommission the Node.

Bringing Up the New Node:

- Prepare the New Node and add the node to the apigee-data nodepool of Kubernetes cluster.

- This should automatically rejoin the cluster and bootstrap the data.

*** NFS storage is strictly not supported.

Single Site Failure in Single Site deployment

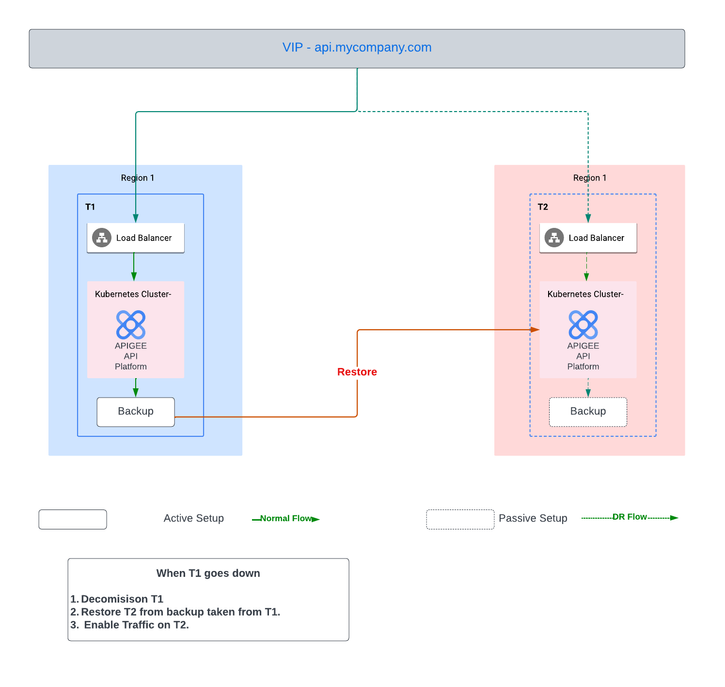

Generally non production clusters are set up in Single region. In a disaster, the site is restored from backup.

Recovering from a Failure:

- Decommission / cleanup the impacted Cluster:

- Assess the situation: Before decommissioning, ensure the bad cluster poses no immediate threat to the remaining infrastructure or ongoing operations.

- Graceful shutdown: If possible, gracefully shut down applications and services running on the bad cluster to minimize data loss and service disruption.

- Isolate resources: Disconnect the bad cluster from the network and other dependencies to prevent further damage or propagation of issues.

- Data salvage (optional): If possible and safe, attempt to salvage any critical data still residing on the bad cluster before complete decommission.

- Restore New Cluster from the Backup:

- Verify backup integrity: Ensure the backup used for restoration is complete, consistent, and hasn't been corrupted.

- Choose deployment method: Decide whether to deploy the new cluster from the backup onto new infrastructure or restore it onto salvaged hardware from the bad cluster (if feasible).

- Configuration and customization: Apply any necessary configuration changes or customizations to the restored cluster to match the original environment.

- Data migration (optional): If data was salvaged from the bad cluster, migrate it to the new cluster.

- Enable Traffic on New Cluster:

- Testing and validation: Thoroughly test the restored cluster functionality and performance before enabling traffic. This includes network connectivity, application deployment, and service validation.

- Monitoring and alerting: Set up appropriate monitoring and alerting systems to detect and address any issues that arise after enabling traffic.

- Documentation and knowledge sharing: Document the entire recovery process for future reference and knowledge sharing within your team.

Additional considerations:

- Communication: Keep stakeholders informed throughout the recovery process, explaining the situation, progress, and expected timeline.

- Security: Ensure the restored environment adheres to your security standards and best practices.

- Post-mortem analysis: Once things stabilize, conduct a post-mortem analysis to identify the root cause of the disaster and implement preventative measures for the future.

By implementing these additional details and considerations, you can ensure a smoother and more efficient recovery process after a disaster. Remember, the specific steps may vary depending on your specific infrastructure, software, and disaster scenario.

Key Takeaways

- Periodic Backups:

- We recommend CSI snapshot based backup wherever applicable. GCS or NFS backup takes a long time to do backup and restore.

- Absolutely crucial! Backups ensure you have a point-in-time snapshot of your data and configuration, allowing you to restore after a disaster.

- Frequency: Consider your tolerance for data loss and choose a backup schedule that balances risk and resource usage. Daily or hourly backups might be appropriate for critical systems.

- Location: Store backups securely in a separate region to avoid being affected by the same disaster. Cloud storage services offer good options.

- Downtime is Inevitable:

- Unfortunately, it's true. Even with backups and automation, there will be some downtime during recovery. The goal is to minimize it as much as possible.

- Focus on recovery time objectives (RTOs): Define how quickly you need Apigee back online after a disaster. This will guide your DR strategy and resource allocation.

- Ample Provisioning Resources:

- This should be identical to the impacted cluster.

- Essential for fast recovery. Ensure you have enough compute, storage, and network capacity available to spin up a new Apigee cluster on demand.

- Pre-provisioning: Consider pre-provisioning some resources to further reduce downtime during disaster recovery.

- Passive Kubernetes Cluster:

- Highly recommended! Having a pre-configured and idle Kubernetes cluster standing by can significantly reduce recovery time. This is more applicable for OnPrem deployments like Anthos, OSE etc.

- Benefits: The cluster is already set up and ready to accept your Apigee backup, eliminating the need to configure everything from scratch during a disaster.

- Automation is Key:

- Automate as much as possible! Use infrastructure-as-code tools to automate provisioning and configuration for both your passive cluster and Apigee deployment.

- Benefits: Automation reduces human error, speeds up recovery, and improves consistency.

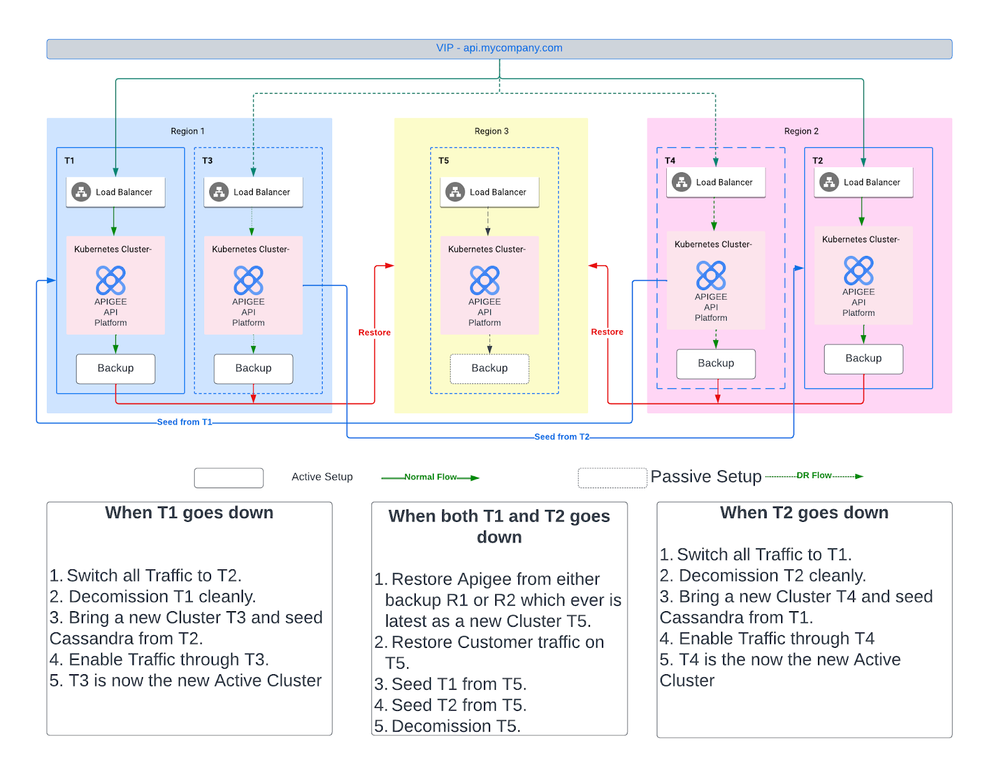

Multi Site Failure

Single Site Failure in Multi Site deployment

Recovering from a Failure:

Switching traffic to Active Site:

- Identify the active sites: Confirm which sites are currently considered active and serving production traffic.

- Drain connections: Depending on your application and network configuration, you may need to gracefully drain connections from the bad site before switching traffic. This could involve directing new connections to the active sites while allowing existing connections on the bad site to finish gracefully.

- Update routing: Modify your routing configuration (e.g., BGP, DNS) to direct traffic away from the bad site and towards the active sites. This ensures new connections are routed to the healthy sites.

Decommission Bad Site:

- Backup data: Before decommissioning, ensure you have a recent and complete backup of the data on the bad site.

- Stop processes: Shut down any running processes or services on the bad site.

- Ensure that the replication strategy of Cassandra doesn’t have any references to the bad site.

- Remove configuration: Update your network and application configurations to reflect the removal of the bad site.

Create a new Site and seed Cassandra data:

- Provision infrastructure: Set hardware and software for the new site. This may involve installing Apigee and configuring it for your specific needs.

- Seed data: Choose one of the active sites as the source for seeding data.

- Validate data integrity: After seeding, verify that the data on the new site is complete and consistent with the active site.

Enable Traffic on new Site:

- Test and validate: Once the new site is ready, thoroughly test and validate its functionality and performance. This could involve running test workloads and comparing them to the active sites.

- Update routing: Once validated, update your routing configuration to allow traffic to be directed to the new site alongside the existing active sites.

All Sites Failures in Multi Site deployment

Recovering from a Failure:

- Restore New Cluster from the Backup:

- Use the latest backup from one of the impacted sites.

- Verify backup integrity: Ensure the backup used for restoration is complete, consistent, and hasn't been corrupted.

- Create a new Cluster from the latest backup on a disaster Site. Ideally the disaster site should be separate from the original active sites.

- Configuration and customization: Apply any necessary configuration changes or customizations to the newly restored cluster to match the original environment.

- Enable Traffic on New Disaster Site.

- Decommission / cleanup the original impacted Clusters.

- Restore the impacted sites one by one by seeding from the new disaster site.

- Once all sites have been restored and the platform is in desired state, decommission the disaster site.

Key Takeaways:

- Periodic backups: This is crucial for any system, and Apigee is no exception. Taking routine backups ensures you have a recent version to restore from in case of a failure.

- Single Active Region: Having one active region ensures continuous traffic flow even if another region experiences issues.

- Seeding from Active Region: When a region fails, seeding the new cluster from an existing active region is faster and more reliable than restoring from a backup.

- Last Active Region Backup: If all active regions are down, restoring from the latest backup from the last active region is the best option.

- Automation for Recovery: Utilizing automation for infrastructure provisioning and Apigee installation significantly reduces downtime during recovery.

Additional considerations:

- Use Backup to restore in one region - Many customers have non cloud and cloud regions. Cloud region has csi backup (snapshot of disk) and non-cloud region gs bucket (slow). Preferred to use csi backup.

- Backup frequency: Determine the appropriate backup frequency based on your risk tolerance and acceptable data loss. Daily backups are common, but you might need more frequent backups for critical systems.

- Backup locations: Store backups in multiple geographically dispersed locations to protect against localized disasters. Consider cloud storage or secondary data centers.

- Testing and recovery drills: Regularly test your recovery process to ensure it works as expected. Practice makes perfect!

- Monitoring and alerting: Implement monitoring systems to detect potential issues early and trigger automated recovery processes if needed.

- Security: Ensure backups are encrypted and stored securely to protect against unauthorized access.

Testing Recovery Scenario

Testing your recovery capabilities for both single and multi-region failures is crucial for ensuring business continuity and data resilience. Here are some specific approaches for each scenario:

Testing Recovery from Single Region Failure:

- Simulate the Failure: Trigger a controlled outage or failover of the entire single region. This could involve shutting down servers, network connections, or even the entire regional infrastructure.

- Validate Recovery Time Objective (RTO) and Recovery Point Objective (RPO): Measure the time it takes for critical applications and services to be available again in the secondary region (RTO) and the amount of data loss incurred during the outage (RPO). Compare these to your set objectives to assess performance.

- Test Data Replication: Verify that data replication between regions is functioning correctly and that data is consistently updated across all regions.

- Application Functionality and Performance: Test the functionality and performance of critical applications in the secondary region. Look for any errors, degraded performance, or unexpected behavior.

- Failback Testing: After restoring service in the secondary region, test failback to the primary region once the outage is resolved. This ensures smooth switchover back to the original configuration.

Testing Recovery from All Regions Failure:

- Simulate a Cascading Failure: If your multi-region setup is geographically dispersed, consider a scenario where a major event like a natural disaster disrupts all regions simultaneously.

- Tertiary Region or Global Recovery Site: Assess your recovery options in the absence of all primary and secondary regions. This could involve activating a tertiary region, utilizing cloud-based disaster recovery services, or relying on manual recovery processes.

- Prioritization and Resource Allocation: Test your ability to prioritize critical applications and services for recovery based on their business impact. Allocate resources efficiently to ensure the fastest recovery for essential functions.

- Communication and Coordination: Practice communication protocols and coordination between teams during a widespread outage. Ensure clear escalation paths and effective decision-making under pressure.

- Post-Mortem Analysis: After any recovery test, conduct a thorough post-mortem analysis to identify areas for improvement. Document lessons learned, update recovery plans, and refine your testing strategy for future iterations.

Additional Tips:

- Use Automation Tools: Leverage automation tools to trigger failures, monitor recovery progress, and collect data for analysis.

- Involve Stakeholders: Include representatives from various departments like IT, operations, and business continuity in the testing process.

- Regular Testing: Schedule regular recovery testing exercises to ensure your plans remain effective and your team stays prepared.

- Adapt to Changes: Update your recovery plans and testing procedures as your infrastructure and applications evolve.

By thoroughly testing your recovery capabilities in various multi-region failure scenarios, you can build confidence in your disaster preparedness and minimize downtime in case of real-world disruptions.

Final thoughts on Disaster Recovery strategy:

Disaster Recovery (DR) strategy hinges on two crucial metrics:

- Recovery Time Objective (RTO): The acceptable amount of downtime after a disaster before critical operations resume.

- Recovery Point Objective (RPO): The maximum amount of data loss acceptable after a disaster.

The ideal DR strategy minimizes both RTO and RPO. Real-time data replication and active-active multi-site setups in Apigee's can significantly reduce RPO, while efficient recovery procedures and redundant infrastructure can bring down RTO.

Here are some additional points to consider:

- Regular backups: As you mentioned, regular backups are essential for minimizing data loss and achieving lower RPOs. Automate backups and store them securely off-site for maximum protection.

- Testing and validation: Regularly test your DR plan to ensure its effectiveness. Simulate disaster scenarios and identify areas for improvement.

- Communication: Clearly communicate the DR plan to all stakeholders so everyone knows their roles and responsibilities during an actual disaster.

- Continuous improvement: DR is an ongoing process. Regularly review your strategy, adapt to new threats and technologies, and keep your plan up-to-date.

Remember, a well-defined and tested DR strategy is crucial for business continuity and minimizing the impact of unforeseen events.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

may be yes you are right

Twitter

Twitter