- Google Cloud

- Articles & Information

- Cloud Product Articles

- Apigee Hybrid - How to Set Up Apigee hybrid on Ope...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Introduction

- Prerequisites for OpenShift IPI (Installer Provisioned Infrastructure)

- Step 1 - Create an OpenShift Account

- Step 2 - Under Run it yourself, select the Google Cloud option

- Step 3 - Download and install the OpenShift installer and CLI

- Step 4 - Create a service account in GCP and give it a number of permissions

- Step 5 - Set the Google Credentials variable

- Step 6 - Create the OpenShift cluster

- Step 7 - Create node pools (Machine-sets in OpenShift)

- Validation

- References

Introduction

This write-up describes how to set up Apigee hybrid on Red Hat OpenShift version 4.7 and is applicable for Apigee hybrid versions 1.6 and later. This applies to setting up Apigee hybrid on Openshift virtual machines in Google Cloud.

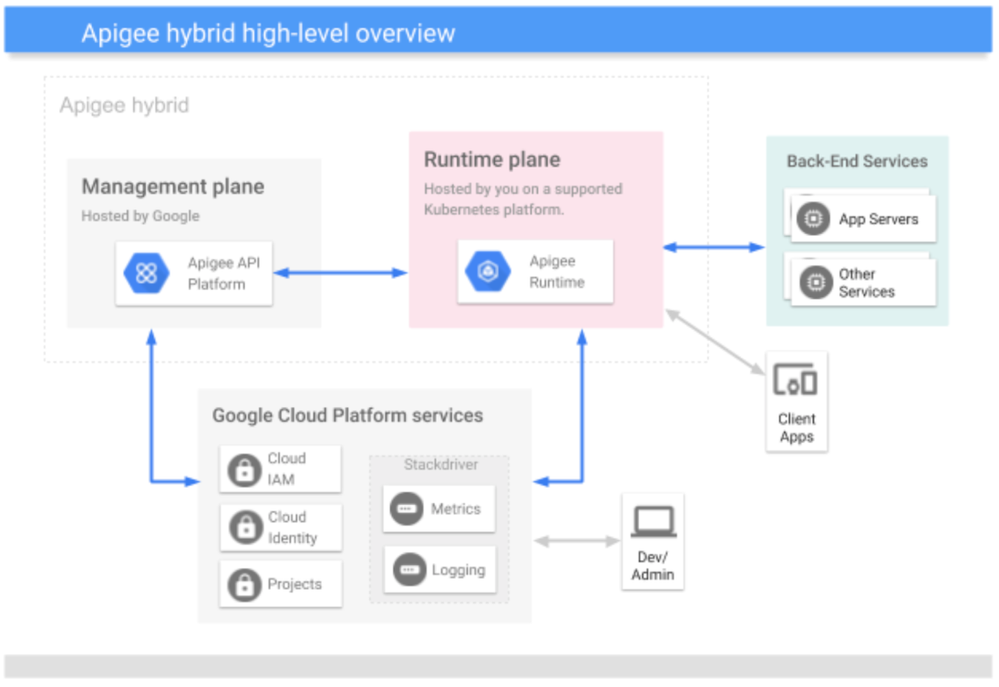

The diagram below shows a high-level layout of the components in Apigee hybrid.

As shown in the diagram above, Apigee hybrid consists of the following primary components:

Apigee-run management plane: A set of services hosted in Google Cloud and maintained by Google. These services include the UI, management API, and analytics.

Customer-managed runtime plane: A set of containerized runtime services that you set up and maintain in your own Kubernetes cluster. All API traffic passes through and is processed within the runtime plane.

You manage the containerized runtime on your Kubernetes cluster for greater agility with staged rollouts, auto-scaling, and other operational benefits of containers.

One key thing to know about Apigee hybrid is that all API traffic is processed within the boundaries of your network and under your control, while management services such as the UI and API analytics run in the cloud and are maintained by Google.

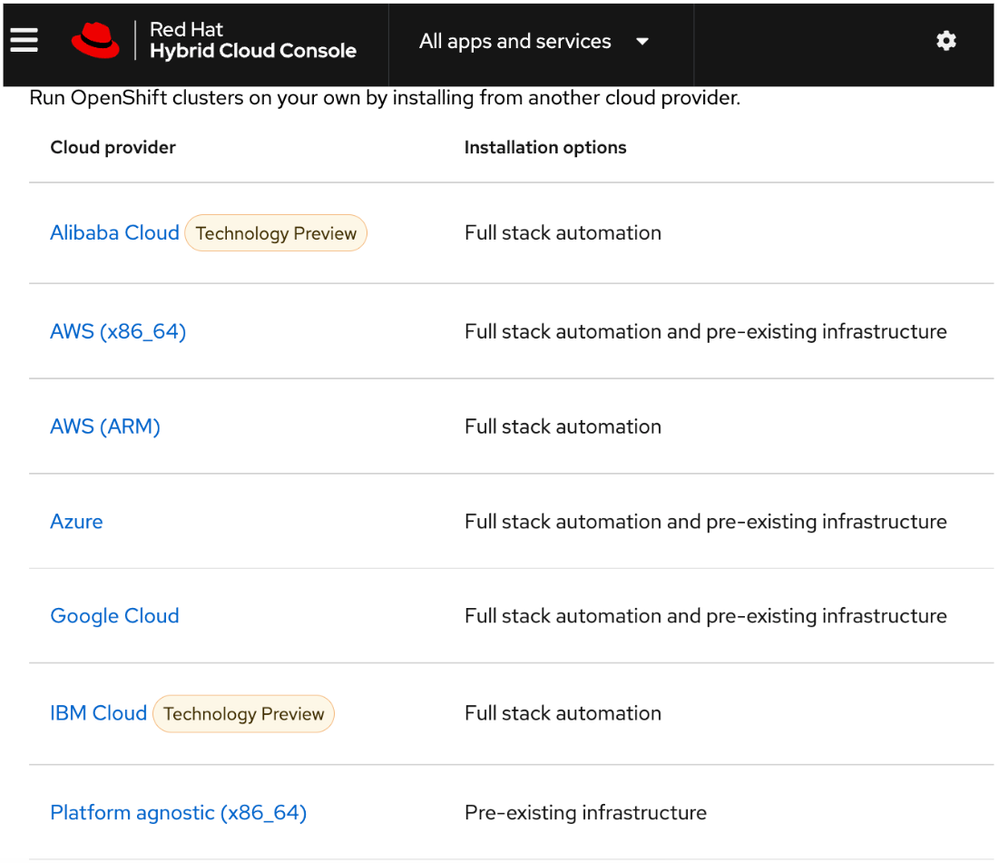

In the case described in this article, you will deploy the runtime plane on the OpenShift platform. OpenShift is Red Hat’s service for deploying Kubernetes clusters on-premise or in the cloud. As shown here, it can be deployed in various cloud solutions:

Prerequisites for OpenShift IPI (Installer Provisioned Infrastructure)

Before starting the OpenShift installation steps, complete these prerequisites:

- Create a Public zone in Cloud DNS. Note the domain used; it will be needed later in these steps.

- Ensure these Google Cloud organization-level constraints are removed: Add storage uniform bucket-level access and increase the compute quota to 200.

- Define an environment variable for your hybrid project's ID:

$ export PROJECT_ID=<YOUR_PROJECT_ID>

- Ensure these APIs are enabled in Google Cloud for your hybrid project:

$ gcloud services enable \

dns.googleapis.com \

compute.googleapis.com \

cloudresourcemanager.googleapis.com \

cloudapis.googleapis.com \

iam.googleapis.com \

iamcredentials.googleapis.com \

servicemanagement.googleapis.com \

serviceusage.googleapis.com \

storage-api.googleapis.com \

storage-component.googleapis.com --project=$PROJECT_ID

Step 1 - Create an OpenShift Account

The first step is to set up an account on openshift.redhat.com. Start a trial and select the self-managed option.

Step 2 - Under Run it yourself, select the Google Cloud option

Find the Run it yourself option in the OpenShift interface and select Google Cloud.

Step 3 - Download and install the OpenShift installer and CLI

You'll need both the OpenShift installer and CLI on your runtime plane.

- Download both files (installer and CLI) from here and untar the tar files: https://mirror.openshift.com/pub/openshift-v4/x86_64/clients/ocp/. (Go to the folder matching the version you're installing.)

- Follow the instructions in Red Hat OpenShift installation instructions, section 4.3 to untar the files and add the folder to your OpenShift CLI to your PATH.

Step 4 - Create a service account in GCP and give it a number of permissions

Create and download the service account JSON key file (permissions found at table 4.3 at this link).

Step 5 - Set the Google Credentials variable

Set an environment variable for the Google credentials with the full path to your service account private key file:

$ export GOOGLE_APPLICATION_CREDENTIALS="<YOUR_SERVICE_ACCOUNT_FULL_PATH>"

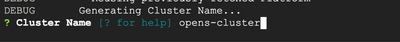

Step 6 - Create the OpenShift cluster

Create your OpenShift cluster by running this command:

$ ./openshift-install create cluster –dir <installation_directory> --log-level=debug

Use these values:

<installation_directory:> specify the location of your customized ./install-config.yaml file.

--log-level=debug: To view different installation details, specify warn, debug, or error instead of info.

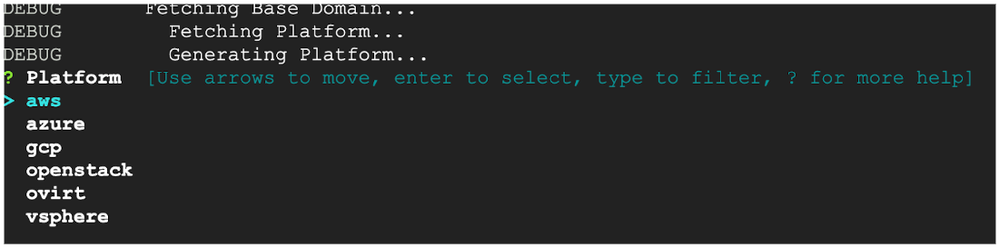

When you run the above command, select these options:

- Select SSH Public Key. This key will be permitted to SSH to OpenShift host machines. Select the openshift.pub key and continue.

2. Select the Platform. Select GCP from the list and continue.

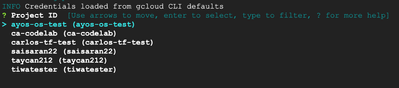

3. Select the GCP Project ID for this hybrid project that you want to set up with OpenShift.

4. Select the GCP region.

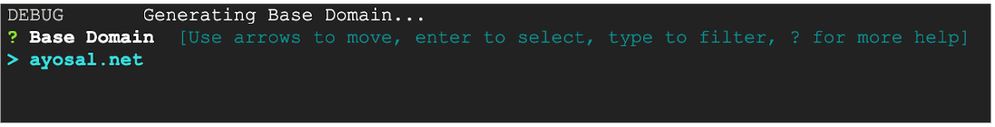

5. Select the domain you created as the as public hosted zone in the Google Cloud DNS.

6. Select the name of your OpenShift cluster.

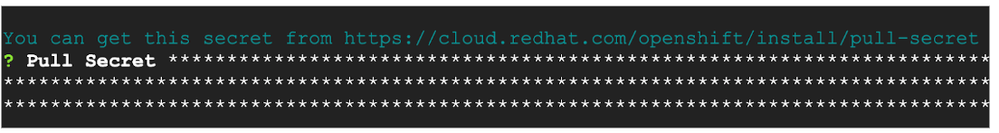

7. Retrieve your pull secret from your OpenShift account. (You must be logged to Red Hat for this link to work.) Copy your pull secret to your OpenShift installation.

8. Once you've selected all options, the cluster resources are created using Terraform bootstrapping. The process generates a username, password, and login URL for your OpenShift cluster UI, which are returned to you. (Note that the cluster creation process can take 35 - 45 minutes to complete.)

When the cluster creation process completes, log in to your OpenShift UI with the credentials provided.

Step 7 - Create node pools (Machine-sets in OpenShift)

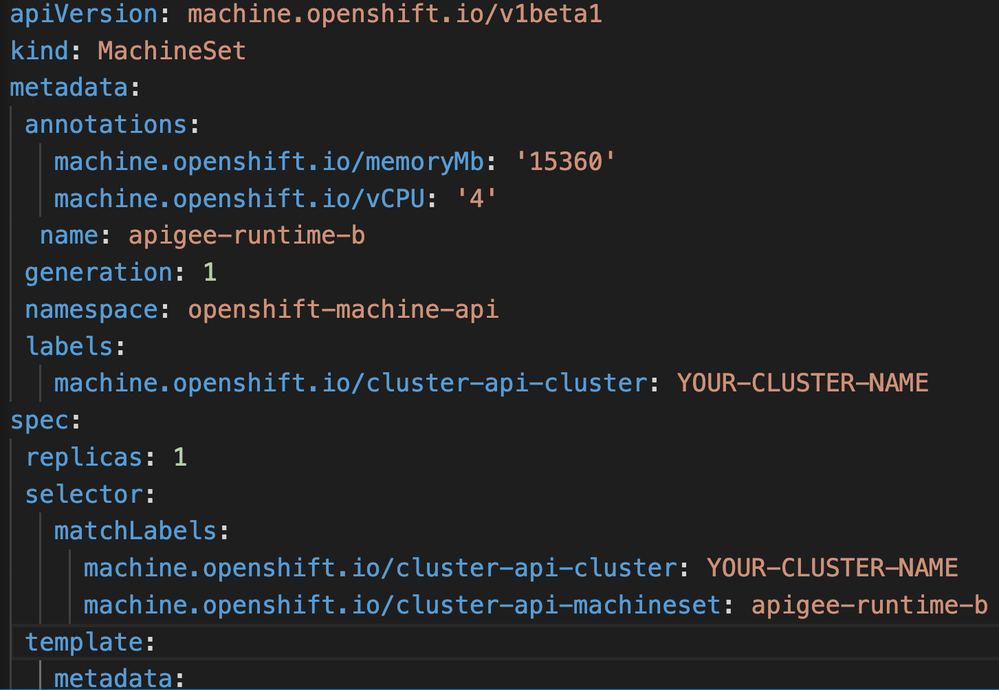

After logging in to the UI you create node pools, which are machine sets in OpenShift.

The Apigee hybrid cluster creation instructions describe creation of node pools: one for Apigee-data and one for Apigee-runtime.

However, for OpenShift we need to create new machine sets for each of the nodes in the node pool.

Create a new machine set for each of the three nodes required in the nodepool (3 for apigee-data and 3 for apigee-runtime)

Note: Create each machine set in a different Availability Zone.

- From the OpenShift GUI, go to compute on the left panel. Select machine sets and select any existing machine set.

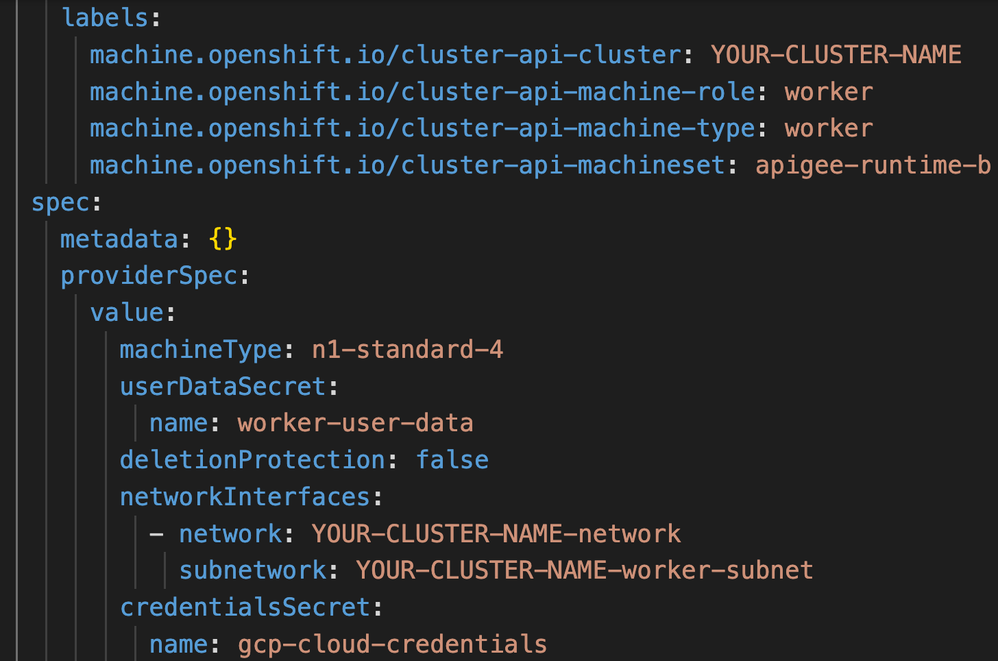

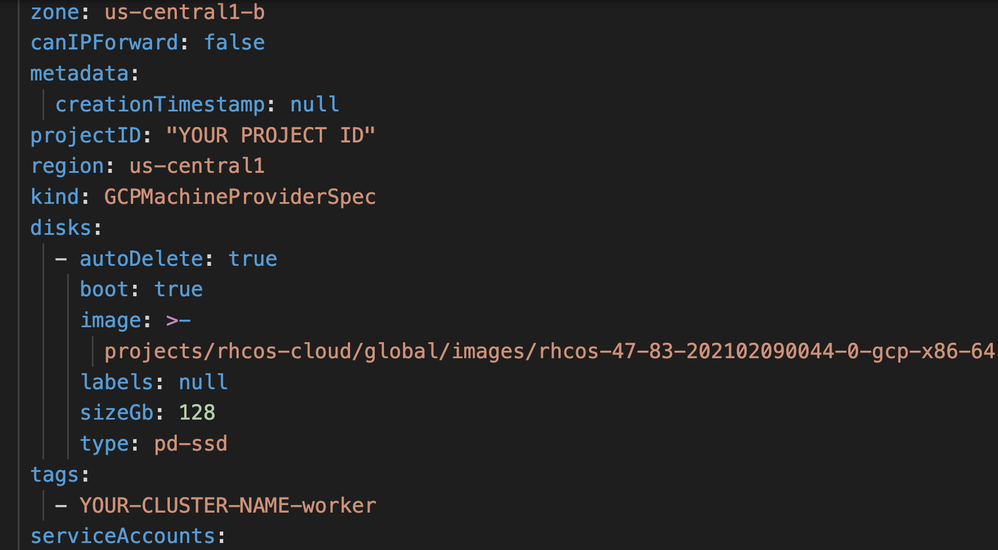

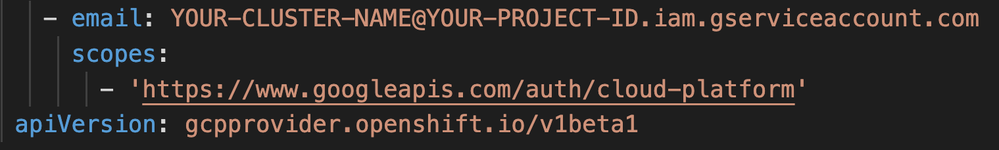

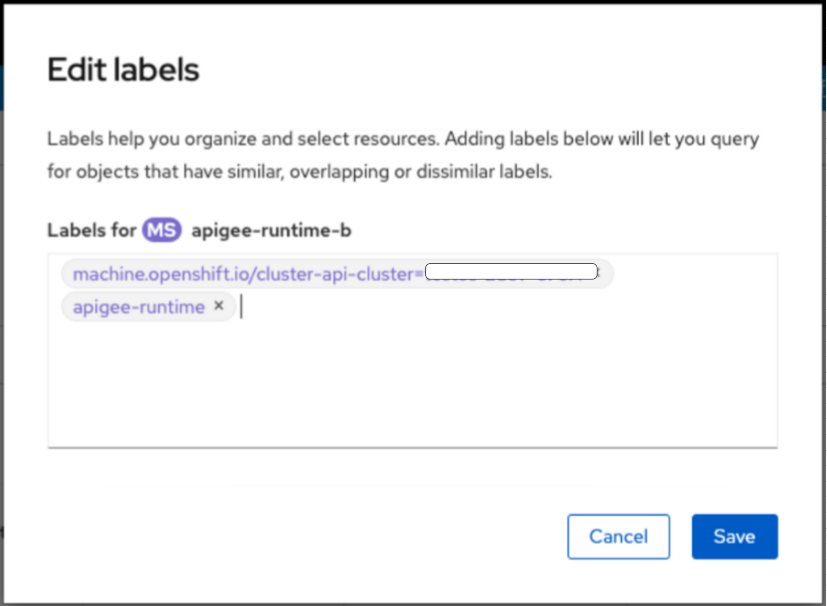

- Edit the YAML for the machine set to reflect the name, apply the label (apigee-data or apigee-runtime), set the node type (e.g., n1-standard-4), and set the compute zone (e.g., us-east-1a).

3. Edit the labels for each machine set and label them accordingly as apigee-runtime and apigee-data as shown in below the machine set YAML. (For example, edit the name and the machineset names to be apigee-runtime-b, where apigee-runtime is the name of the label being used and b is the name of the availability zone in the region being selected. Also configure the region and availability zone as well as highlighted below).

Ensure the machines are created, provisioned, and in a running state with the labels

before proceeding, as shown below

Validation

Once this is completed you can validate the nodes have been created and are in running state.

kubectl get nodes

You can now proceed to run the steps to install Apigee hybrid on your new Openshift cluster on GCP VMs at this link

References

below are links to some useful and related topics to Apigee hybrid

- Apigee Hybrid Install Big Picture

- GCP Onboarding Checklist

- Apigee Hybrid Prereq

- Downloading Hybrid Images

- Understanding Peering Ranges

- Ports and Setting up firewalls

- GCP URL's for Hybrid Install

- Apigee Limits

- Apigee Hybrid Cloud Training

- Develop and Secure APIs with Apigee X

- Apigee Hybrid Multiregion Deployment

- Apigee Hybrid Ports

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Ayos

I am provisioning my DRP cluster with Openshift (apigeectl 1.11 was used to provision both clusters), everything is correct up to the point of performing Cassandra replication

Implementación multirregional | Apigee | Google Cloud

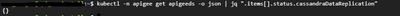

When I apply my datareplication.yaml file, I don't have any errors, but when I execute the command to check the status:

kubectl -n apigee get apigeeds -o json | jq ".items[].status.cassandraDataReplication"

I get an empty json

I understand that I should return a json with data, according to the documentation.

I want to update the charts to Helm and upgrade to 1.12 but I don't want to proceed given this scenario

Is there anything additional that needs to be configured in the clusters?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out.

Do you see any errors in the logs of the cassandra pods or the controller manager pod ?

Did you validate network connectivity between both regions ?

Here is a playbooks on data replication issues- here .

Also here is the Cassandra troubleshooting guide

If these dont help I would suggest opening a support ticket for someone to look into the issue. You can also drop the ticket here so i can have a look later on.

Attached here is the considerations before upgrading a multi-region installation

Twitter

Twitter