- Google Cloud

- Cloud Forums

- Apigee

- "404 Request couldn't be served" when calling BigQ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting the following response from calling BigQuery REST API from Apigee:

{"error":{"code":404,"message":"Request couldn't be served.",

"errors":[{"message":"Request couldn't be served.","domain":"global","reason":"notFound"}],"status":"NOT_FOUND"}}This is what I have to build the query payload and target endpoint flow:

Assign message policy: AM-BuildCalendarQuery

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<AssignMessage async="false" continueOnError="false" enabled="true" name="AM-BuildCalendarQuery">

<DisplayName>AM-BuildCalendarQuery</DisplayName>

<Properties/>

<Set>

<Verb>GET</Verb>

<Payload contentType="application/json">

{

"kind": "bigquery#queryRequest",

"query": "SELECT SUBSTR(CAL_ID,6,3) as state,formatted_date, FORMAT_DATE('%Y', formatted_date) as year ,

FORMAT_DATE('%Q', formatted_date) as quarter

FROM `<my-project-id>.TestDataset.calendar_vw` where CAL_ID like 'A24AU%'

and FORMAT_DATE('%Y', formatted_date) = '2024' order by state, formatted_date asc",

"defaultDataset": {

"datasetId": "TestDataset",

"projectId": "<my-project-id>"

},

"useQueryCache": true,

"useLegacySql": false,

"location": "australia-southeast1",

"timeoutMs": 60000

}

</Payload>

</Set>

<IgnoreUnresolvedVariables>true</IgnoreUnresolvedVariables>

<AssignTo createNew="false" transport="http" type="request"/>

</AssignMessage>

And the target endpoint flow:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<TargetEndpoint name="default">

<PreFlow name="PreFlow">

<Request/>

<Response/>

</PreFlow>

<Flows/>

<PostFlow name="PostFlow">

<Request/>

<Response/>

</PostFlow>

<HTTPTargetConnection>

<Authentication>

<GoogleAccessToken>

<Scopes>

<Scope>https://www.googleapis.com/auth/cloud-platform</Scope>

</Scopes>

<LifetimeInSeconds ref="{variable}">3600</LifetimeInSeconds>

</GoogleAccessToken>

</Authentication>

<SSLInfo>

<Enabled>true</Enabled>

<IgnoreValidationErrors>true</IgnoreValidationErrors>

</SSLInfo>

<URL>https://bigquery.googleapis.com/bigquery/v2/projects/<my-project-id>/queries</URL>

</HTTPTargetConnection>

</TargetEndpoint>

I ran the same request payload and target endpoint URL on https://cloud.google.com/bigquery/docs/reference/rest/v2/jobs/query and it returns the resultset successfully.

So what does this "404 Request couldn't be served" mean? I couldn't find any info from the google site. Could it be a permission issue?

- Labels:

-

Apigee X

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

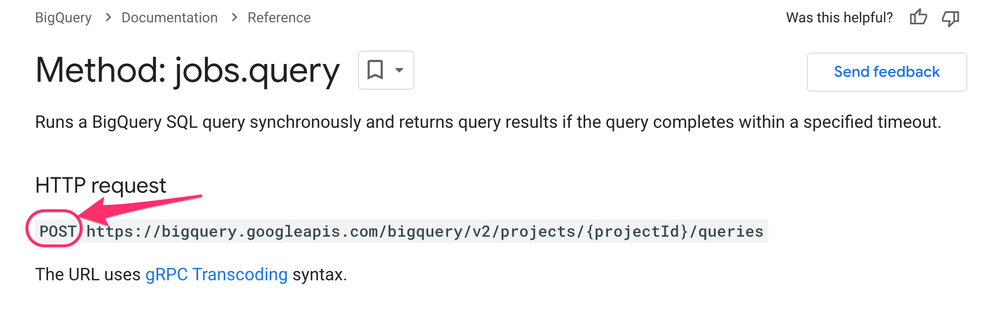

Could be permissions? But

the first thing that jumps out to me is, in your policy AM-BuildCalendarQuery, you are setting the verb to GET. The documentation for the BQ API you cited, states that the request should be POST.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, @dchiesa1 . I spotted that too and changed it to POST but still got the same 404 response. I also tried putting the access_token generated with a personal account with bigquery access to the datasource like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<AssignMessage continueOnError="false" enabled="true" name="AM-BuildCalendarQuery">

<DisplayName>AM-BuildCalendarQuery</DisplayName>

<Properties/>

<Set>

<Verb>POST</Verb>

<Headers>

<Header name="authorization">Bearer [access_token]</Header>

</Headers>

<Payload contentType="application/json">

{

"kind": "bigquery#queryRequest",

"query": "SELECT SUBSTR(CAL_ID,6,3) as state,formatted_date, FORMAT_DATE('%Y', formatted_date) as year , FORMAT_DATE('%Q', formatted_date) as quarter FROM `<project_id>.TestDataset.calendar_vw` where CAL_ID like 'A24AU%' and FORMAT_DATE('%Y', formatted_date) = '2024' order by state, formatted_date asc",

"defaultDataset": {

"datasetId": "TestDataset",

"projectId": "<project_id>"

},

"useQueryCache": true,

"useLegacySql": false,

"location": "australia-southeast1",

"timeoutMs": 60000

}

</Payload>

</Set>

<IgnoreUnresolvedVariables>true</IgnoreUnresolvedVariables>

<AssignTo createNew="true" transport="http" type="request"/>

</AssignMessage>

It also returned with same 404 error.

My question is

1. if this is actually a permission issue or something else? Do I have to grant bigquery job create and viewer to the service account that runs this proxy in order to access the REST API from Apigee?

2. where can I find additional information about the error responded from these REST API? I couldn't seem to find it with the API docs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not a BQ expert, but I think you need to grant the role bigquery.jobUser to the service account:

gcloud projects add-iam-policy-binding "${PROJECT_ID}" \

--member="serviceAccount:${SVCACCT_EMAIL}" \

--role="roles/bigquery.jobUser"

But when I try this with a service account that does not have the jobUser role, I get this:

{

"error": {

"code": 403,

"message": "Access Denied: Project PROJECT: User does not have bigquery.jobs.create permission in project PROJECT.",

...

So it seems unlikely that your 404 is due to a permissions issue.

Some other questions

- is formatted_date a column? It seems like your other date fields (year, quarter) are all the result of the FORMAT_DATE() function.

-

Are you actually setting the token correctly? This part looks suspect:

<Headers> <Header name="authorization">Bearer [access_token]</Header> </Headers>If you want variable substitution, you need to use curlies, not square brackets:

Bearer {access_token}. But again, that seems less likely, because I get a 401, not a 404, when I send an access token of exactly[access_token]. -

Why wouldn't you just use the builtin auto-authentication that Apigee provides? You can follow this guide.

... <HTTPTargetConnection> <!-- tell Apigee to invoke this with a Google Access Token --> <Authentication> <GoogleAccessToken> <Scopes> <Scope>https://www.googleapis.com/auth/cloud-platform</Scope> </Scopes> </GoogleAccessToken> </Authentication> -

When I tried this, I added an Accept header. Not sure if this would affect you.

<Headers> <Header name="accept">application/json</Header> </Headers>

The other references I found to "Request couldn't be served" that were BigQuery things, didn't provide a clear resolution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- is formatted_date a column? It seems like your other date fields (year, quarter) are all the result of the FORMAT_DATE() function.

- Are you actually setting the token correctly? This part looks suspect:

- Why wouldn't you just use the builtin auto-authentication that Apigee provides? You can follow this guide.

...

<HTTPTargetConnection>

<!-- tell Apigee to invoke this with a Google Access Token -->

<Authentication>

<GoogleAccessToken>

<Scopes>

<Scope>https://www.googleapis.com/auth/cloud-platform</Scope>

</Scopes>

</GoogleAccessToken>

</Authentication>

<Headers>

<Header name="accept">application/json</Header>

</Headers>

- The other references I found to "Request couldn't be served" that were BigQuery things, didn't provide a clear resolution.

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<ServiceCallout continueOnError="false" enabled="true" name="SC-TestCallout">

<DisplayName>SC-TestCallout</DisplayName>

<Properties/>

<Request>

<Set>

<Headers>

<Header name="authorization">Bearer [access_code]</Header>

</Headers>

<Verb>POST</Verb>

<Payload contentType="application/json">

{

"kind": "bigquery#queryRequest",

"query": "SELECT SUBSTR(CAL_ID,6,3) as state,formatted_date, FORMAT_DATE('%Y', formatted_date) as year , FORMAT_DATE('%Q', formatted_date) as quarter FROM `<project_id>.TestDataset.calendar_vw` where CAL_ID like 'A24AU%' and FORMAT_DATE('%Y', formatted_date) = '2024' order by state, formatted_date asc",

"defaultDataset": {

"datasetId": "TestDataset",

"projectId": "<project_id>"

},

"useQueryCache": true,

"useLegacySql": false,

"location": "australia-southeast1",

"timeoutMs": 60000

}

</Payload>

</Set>

<IgnoreUnresolvedVariables>false</IgnoreUnresolvedVariables>

</Request>

<Response>calloutResponse</Response>

<HTTPTargetConnection>

<Properties/>

<URL>https://bigquery.googleapis.com/bigquery/v2/projects/<project_id>/queries</URL>

</HTTPTargetConnection>

</ServiceCallout>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know what the problem could be. If this were my problem, I would break it down, simplify it, until I can get a request that returns successfully. You have started to do that, by hard-coding the projectid and the token value into the Apigee policies. But maybe you can continue along that path, and simplify even more. For example, use a simpler query, use a public dataset, etc etc.

Maybe the query with %Y and etc is somehow not getting formatted properly. Maybe you need to surround the Payload content with a cdata section. Like this:

<Request>

<Set>

<Headers>

<Header name="authorization">Bearer [access_code]</Header>

</Headers>

<Verb>POST</Verb>

<Payload contentType="application/json"><![CDATA[

{

"kind": "bigquery#queryRequest",

"query": "SELECT SUBSTR(CAL_ID,6,3) as state,formatted_date, FORMAT_DATE('%Y', formatted_date) as year , FORMAT_DATE('%Q', formatted_date) as quarter FROM `<project_id>.TestDataset.calendar_vw` where CAL_ID like 'A24AU%' and FORMAT_DATE('%Y', formatted_date) = '2024' order by state, formatted_date asc",

"defaultDataset": {

"datasetId": "TestDataset",

"projectId": "<project_id>"

},

"useQueryCache": true,

"useLegacySql": false,

"location": "australia-southeast1",

"timeoutMs": 60000

}

]]></Payload>Here is a repo with a generator tool, that generates API proxy bundles from templates. The example templates call into BQ, using parameterized queries. The tool is not the interesting part for you; the proxy that the tool generates might be interesting, because it might provide a working example of an API proxy that connects to BigQuery, something you can deploy into your own Apigee environment, test and run, and then see if it works. If that one works, then you can modify it in steps so that eventually it matches what you want.

The queries in the templates don't use date formatting; an example of the payload that the proxy sends to BQ looks like this:

{

"query": "SELECT airline, count(*) AS total_count FROM `bigquery-samples.airline_ontime_data.flights` WHERE departure_airport = @airport AND date = @departure_date GROUP BY airline",

"queryParameters": [

{

"parameterType": {

"type": "STRING"

},

"parameterValue": {

"value": "EWR"

},

"name": "airport"

},

{

"parameterType": {

"type": "STRING"

},

"parameterValue": {

"value": "2010-02-14"

},

"name": "departure_date"

}

],

"useLegacySql": false,

"parameterMode": "NAMED"

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply.

I went back to create a trial GCP account and start everything over again with my own GCP environment. It works!

Here are the steps I follow:

1. In the Apigee project (e.g. custom-altar-408201), create a service account (e.g. apigee-sa@custom-altar-408201.iam.gserviceaccount.com) .

2. In the BigQuery project (e.g. glass-scanner-408200), grant the service account (e.g. apigee-sa@custom-altar-408201.iam.gserviceaccount.com) with the following roles:

BigQuery Data Viewer

BigQuery Job User

3. Create a Apigee proxy with AssignMessage to build the request message (simple SQL):

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<AssignMessage continueOnError="false" enabled="true" name="AM-BuildCalendarQuery">

<DisplayName>AM-BuildCalendarQuery</DisplayName>

<Properties/>

<Set>

<Verb>POST</Verb>

<Headers>

<Header name="accept">application/json</Header>

<Header name="content-type">application/json</Header>

</Headers>

<Payload contentType="application/json">

{

"kind": "bigquery#queryRequest",

"query": "SELECT count(*) FROM `glass-scanner-408200.testdataset.calendar`",

"useQueryCache": true,

"useLegacySql": false,

"location": "australia-southeast1",

"timeoutMs": 60000

}

</Payload>

</Set>

<IgnoreUnresolvedVariables>true</IgnoreUnresolvedVariables>

<AssignTo createNew="true" transport="http" type="request"/>

</AssignMessage>

and configure the proxy endpoint:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<ProxyEndpoint name="default">

<PreFlow name="PreFlow">

<Request>

<Step>

<Name>AM-BuildCalendarQuery</Name>

</Step>

</Request>

<Response/>

</PreFlow>

<Flows/>

<PostFlow name="PostFlow">

<Request/>

<Response/>

</PostFlow>

<HTTPProxyConnection>

<BasePath>/calendar</BasePath>

<VirtualHost>default</VirtualHost>

</HTTPProxyConnection>

<RouteRule name="default">

<TargetEndpoint>default</TargetEndpoint>

</RouteRule>

</ProxyEndpoint>

and target endpoint:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<TargetEndpoint name="default">

<Description/>

<FaultRules/>

<PreFlow name="PreFlow">

<Request/>

<Response/>

</PreFlow>

<PostFlow name="PostFlow">

<Request/>

<Response/>

</PostFlow>

<Flows/>

<HTTPTargetConnection>

<Authentication>

<GoogleAccessToken>

<Scopes>

<Scope>https://www.googleapis.com/auth/cloud-platform</Scope>

</Scopes>

<LifetimeInSeconds ref="{variable}">3600</LifetimeInSeconds>

</GoogleAccessToken>

</Authentication>

<SSLInfo>

<Enabled>true</Enabled>

<IgnoreValidationErrors>true</IgnoreValidationErrors>

</SSLInfo>

<URL>https://bigquery.googleapis.com/bigquery/v2/projects/glass-scanner-408200/queries</URL>

</HTTPTargetConnection>

</TargetEndpoint>

4. Deploy the proxy as apigee-sa@custom-altar-408201.iam.gserviceaccount.com

and it works with 200 OK!

Refer to this page https://cloud.google.com/bigquery/docs/error-messages for BQ error, 404 NOT FOUND error is:

| notFound | 404 | This error returns when you refer to a resource (a dataset, a table, or a job) that doesn't exist, or when the location in the request does not match the location of the resource (for example, the location in which a job is running). This can also occur when using table decorators to refer to deleted tables that have recently been streamed to. | Fix the resource names, correctly specify the location, or wait at least 6 hours after streaming before querying a deleted table. |

I checked the resources and location and they are all good. This is only happening in our corporate account environment. Is there any chance Apigee can alter the request somehow before sending to BQ API in our corporate account environment?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK that's progress.

Is there any chance Apigee can alter the request somehow before sending to BQ API in our corporate account environment?

I wouldn't think so. I don't think there is anything special Apigee would be doing in your corp environment to change the request. But obviously something is different.

Looking at the query you tried in your own trial environment, it is different than the one that is not successful in the corp environment. Is that correct?

I mean I think you are using this from Apigee in the trial environment

SELECT count(*) FROM `glass-scanner-408200.testdataset.calendar`

whereas you are using THiS more elaborate query in the corp environment

SELECT SUBSTR(CAL_ID,6,3) as state,formatted_date, FORMAT_DATE('%Y', formatted_date) as year ,

FORMAT_DATE('%Q', formatted_date) as quarter

FROM `PROJECT_ID.TestDataset.calendar_vw`

where CAL_ID like 'A24AU%' and FORMAT_DATE('%Y', formatted_date) = '2024' order by state, formatted_date asc

Did you try the simpler query in the corporate environment?

Did you try the CDATA thing I suggested?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply.

I tried the CDATA to wrap around the query payload like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<AssignMessage continueOnError="false" enabled="true" name="AM-BuildA24CalendarQuery">

<DisplayName>AM-BuildA24CalendarQuery</DisplayName>

<Properties/>

<Set>

<Verb>POST</Verb>

<Headers>

<Header name="accept">application/json</Header>

<Header name="content-type">application/json</Header>

</Headers>

<Payload contentType="application/json"><![CDATA[

{

"kind": "bigquery#queryRequest",

"query": "SELECT count(*) FROM `<projectId>.TestDataset.a24_nontradingdates`",

"useQueryCache": true,

"useLegacySql": false,

"location": "australia-southeast1",

"timeoutMs": 60000

}

]]></Payload>

</Set>

<IgnoreUnresolvedVariables>true</IgnoreUnresolvedVariables>

<AssignTo createNew="true" transport="http" type="request"/>

</AssignMessage>and also simplified the query to just return a count on a table in both trial and corp environment. In the corp environment, the proxy is stilling returning the same "404 Request couldn't be served" error.

In my corp environment, I then create a skinny version of the proxy like I did in my trial environment from ground up with only 1) an AssignMessage policy to set the request message and 2) target endpoint to point to the BQ REST API of the target BQ project. Surprisingly, this time I got a different error:

{

"error": {

"code": 403,

"message": "BigQuery API has not been used in project 1041173351815 before or it is disabled. Enable it by visiting https://console.developers.google.com/apis/api/bigquery.googleapis.com/overview?project=1041173351815 then retry. If you enabled this API recently, wait a few minutes for the action to propagate to our systems and retry.",

"errors": [

{

"message": "BigQuery API has not been used in project 1041173351815 before or it is disabled. Enable it by visiting https://console.developers.google.com/apis/api/bigquery.googleapis.com/overview?project=1041173351815 then retry. If you enabled this API recently, wait a few minutes for the action to propagate to our systems and retry.",

"domain": "usageLimits",

"reason": "accessNotConfigured",

"extendedHelp": "https://console.developers.google.com"

}

],

"status": "PERMISSION_DENIED",

"details": [

{

"@type": "type.googleapis.com/google.rpc.Help",

"links": [

{

"description": "Google developers console API activation",

"url": "https://console.developers.google.com/apis/api/bigquery.googleapis.com/overview?project=1041173351815"

}

]

},

{

"@type": "type.googleapis.com/google.rpc.ErrorInfo",

"reason": "SERVICE_DISABLED",

"domain": "googleapis.com",

"metadata": {

"service": "bigquery.googleapis.com",

"consumer": "projects/1041173351815"

}

}

]

}

}which suggests I need to enable the BigQuery API in the Apigee project to call the BQ Rest API from Apigee as target endpoint. Is it a pre-requisite for Apigee to call BQ API as target endpoint?

While we are waiting for the BQ API to be enabled, I'm still trying to figure out:

1. Why I got different responses in my first proxy and the "skinny" proxy I created later on.

2. I also tried using the BQ Rest API using ServiceCallout policy and it works. If I have to enable BQ API in order to use it as target endpoint for Apigee, why ServiceCallout policy works but not as target endpoint? I would only imagine I need to enable BQ API in the BQ project because the target endpoint is referring to the BQ project in the URL. Why the Apigee project also have to be enabled with BQ API?

-

Analytics

497 -

API Hub

75 -

API Runtime

11,665 -

API Security

178 -

Apigee General

3,041 -

Apigee X

1,288 -

Developer Portal

1,910 -

Drupal Portal

43 -

Hybrid

463 -

Integrated Developer Portal

89 -

Integration

310 -

PAYG

13 -

Private Cloud Deployment

1,069 -

User Interface

77

Twitter

Twitter