- Google Cloud

- Cloud Forums

- Apigee

- To much latency in a POST Request with image attac...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are working with a proxy which is located in northamerica-northeast1.

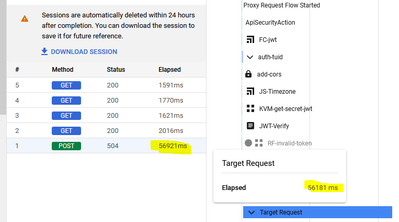

The problem is that the latency is really high and this causes errors to upload images through this proxy (using a REST API and in Frontend page).

The proxy is currently communicating with a backend in Uruguay, so this means that the request must go and return almost 4 times in the path.

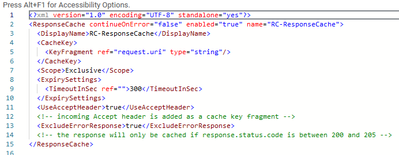

We are using cache policy already, this is the policy:

Added in the Request PreFlow and Response PostFlow

But we think that this times are imposible to working with, on premises it working really fast, but we want to work on the cloud with the backend on-premises.

There are a solution for this?, maybe an external apigee tool to get cache or to fix the upload file times.

Thanks.

- Labels:

-

Apigee X

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where are the client apps (or users) for this API?

You mentioned a frontend page. Sounds like a Web UI. Are you running website requests directly through Apigee? That's not really what Apigee is intended for.

And you mentioned cache - the cache will help if the request to Apigee is a GET. I understood from your word "upload" , and the trace data showing a POST request, that the client is SENDING image data up into Apigee. The cache won't help with that, because each new upload of an image will be different. Cache will help with GET, but not POST.

Have you considered using Apigee as the control channel and having the upload go direct to some storage system, like Google Cloud Storage? It's a good pattern for large uploads, that you want to protect with an API. In this pattern, the client sends a POST to Apigee, saying "I want to do an upload". Apigee can do its verification (JWT verify and so on), and when that succeeds, create a signed URL and send THAT back to the client. For this initial request/response, the client does not upload the data to Apigee. Instead, the client can uploads the image to the signed URL, which points to a system that is not Apigee. Google Cloud storage has builtin support for signed urls, as does AWS S3. You can configure Apigee to work with either of those. But if you do not use one o those, but you could build your own system that implemented a similar model , if you are in control of the upstream image storage system in Uruguay.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

APIs are allocated on premises.

That's right, we are sending files through UI website to Apigee as well, becase the website it's like blog so we allow user to post images and text.

We already implement caching policies for GET requests only.

We need to save the images in external storage, so in your proposal I think we should save that image in cloud storage and then send it to external storage at the same time front page shows it to user.

Our files have a maximum size of 0 KB to 5 MB.

If so, will that process be faster than Apigee's current time? and we should use cloud function to execute an event after uploading images to cloud storage right?

-

Analytics

497 -

API Hub

75 -

API Runtime

11,664 -

API Security

177 -

Apigee General

3,036 -

Apigee X

1,283 -

Developer Portal

1,909 -

Drupal Portal

43 -

Hybrid

463 -

Integrated Developer Portal

89 -

Integration

309 -

PAYG

13 -

Private Cloud Deployment

1,068 -

User Interface

76

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter