- Google Cloud

- Cloud Forums

- Apigee

- Source Control Strategy for APIGEE - API Proxies, ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are planning for developing a complete solution for CICD for APIGEE and we started with the Source code management and Repository Creation.

I was referring to : https://community.apigee.com/articles/34868/source-control-for-api-proxy-development.html.

- I didnt understand whether we need to create SCM project based on each version of the API Proxy?

- How do we go ahead with the shared flows? and configurations source control?

What we thought was having the Repo per API Proxy and maintain all revisions. And we will have a common SCM Repo for Shared flows and one Repo for all Configurations?

@Nagashree B Can you please tell me how we should proceed with the Repo creation and folder structure to be precise ?

And also if there is any good way where we can do revision control for the resources?

Is it the right path we are following?

Thanks,

Naveen

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a great question. The recommendation is to use single repo for a proxy version. For example, if you have an API called "/v1/foo", have a repo called "Foo-v1". Similarly when you have newer versions coming up, you create new repos like "Foo-v2" and so on. With this you get complete control of who gets access to the repo and also work on your pipeline, automation, etc. It also in the future helps you with retiring/deprecating APIs. The other important aspect is to make changes to the APIs without impacting the others and getting complete audit on the changes being done using your SCM tool.

For Shared flow - since they are used across different proxies, I recommend them to be a different repo altogether. For example if you have SF for Security - have a repo called "SF-Security" and use that. SF will have its own pipeline

For Configurations, I recommend having them in the same repo as the proxy/sharedflow. You can use a resources directory and put all your configurations under that folder. If you are using the Maven plugin, you can refer to this sample to get an idea of how this plugin allows you to organize the configurations and use that in your pipeline.

There are configurations that needs to be pushed before the API proxy is deployed like Cache, Target Server, etc and there are some configured after the deployment like API Products, App, etc. All that can be controlled by your pipeline.

I have a sample repo that has the setup (using Jenkinsfile). You can try it out and let me know. Has an example of pushing some configurations (based on env) before deploying the API Proxy. You can enhance it according to your requirements. This repo uses Apigee Maven plugins for deployment and configurations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Naveen Chalageri,

I believe Sai has given you the information to get started on source control and configure CI/CD for Apigee deployment.

You have mentioned multiple versions of the API proxies and sharedflows. In reality you will have not more than two versions of the API live. So you can also think of a strategy to hold multiple release branches and then merge to master with appropriate tags (a gitflow sort of model) to maintain the codebase in a single repository. Obviously, with this approach your CI/CD should be robust enough to handle build and deployments for multiple versions of the APIs.

For the config repo, the generic approach would be to maintain the proxy related configurations in the same repo as the code. You can also have a separate config repo common to all proxies as well. The advantage of it is having a single place where all the configuration is stored. The downside is, understanding the config location structure and educating developers on how config changes need to be made for their specific components without tampering with others'. The CD for proxy deployment will also need to ensure that the config is deployed first and then the proxy is deployed.

I am not very clear on your statement "The same configurations have to be a part of both API Proxy as well as SF". If you can be a bit more specific, I might be able to help.

Lastly, I would like to mention that I have used Azure devops and Azure git repos for configuring the CI/CDs for all Apigee related code and configuration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a great question. The recommendation is to use single repo for a proxy version. For example, if you have an API called "/v1/foo", have a repo called "Foo-v1". Similarly when you have newer versions coming up, you create new repos like "Foo-v2" and so on. With this you get complete control of who gets access to the repo and also work on your pipeline, automation, etc. It also in the future helps you with retiring/deprecating APIs. The other important aspect is to make changes to the APIs without impacting the others and getting complete audit on the changes being done using your SCM tool.

For Shared flow - since they are used across different proxies, I recommend them to be a different repo altogether. For example if you have SF for Security - have a repo called "SF-Security" and use that. SF will have its own pipeline

For Configurations, I recommend having them in the same repo as the proxy/sharedflow. You can use a resources directory and put all your configurations under that folder. If you are using the Maven plugin, you can refer to this sample to get an idea of how this plugin allows you to organize the configurations and use that in your pipeline.

There are configurations that needs to be pushed before the API proxy is deployed like Cache, Target Server, etc and there are some configured after the deployment like API Products, App, etc. All that can be controlled by your pipeline.

I have a sample repo that has the setup (using Jenkinsfile). You can try it out and let me know. Has an example of pushing some configurations (based on env) before deploying the API Proxy. You can enhance it according to your requirements. This repo uses Apigee Maven plugins for deployment and configurations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Sai Saran Vaidyanathan,

Thanks for the quick response. I have some doubts regarding this solution

Suppose we have 50 API Proxies so considering 1 version we will have 50 Repo and for the next version 2 we will have 100.

So before considering the deprecating any of the version if we have to have atleast 4 versions to be maintained it will have 200 Repo.

Does this lead to more number of Repo and difficult to manage and maintain?

And regarding SF we can have all SF grouped together and have a single Repo right? if this is true then how can we maintain versions?

Or is it similar to API Proxies and maintaining versions also in a similar way?

And regarding configurations - The same configurations have to be a part of both API Proxy as well as SF if I am not wrong?

And thanks for giving a sample for jenkins I will definitely try out that. We are trying to use Azure Devops and Gitlab for the same.

And I have used Maven for Packaging and deployment.

And we have to consider the above source control strategy and we use Maven - As we have shared-pom for both API Proxy and SF we will have to create a single Pom which contains whole info in each repo right?

Thanks for the Help.

And Please let us know if any suggestions you have for building the complete devops for APIGEE. that will help us alot.

Thanks,

Naveen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The recommendation is not have more than 2 live versions. Even if you have 4, its ok to have 200 repos as you mentioned. Treat them as 200 different application services that provide some capability. Again 200 is not a big number 🙂

Its easier to manage the repos like that than combining them. Imagine you do combine, then your CI/CD should pick the correct version to push to Apigee. For example you have a single repo called "Foo" and then within that you have two directories for versions "v1" and "v2". If "v1" had some change, your build system should find that and only deploy "v1" to Apigee. All this will really complicate your pipeline. Hence I recommend having them as separate repo.

For SF also - same as above applies. If you combine them all in one repo, you make changes to 1, then you need to find way to just deploy that. Also think of access to this repo. Imagine there are multiple teams creating shared flows. Will you give access to everyone? What if someone updates other's sharedflow because they have access? To avoid all that, its better to have all these as separate repos so that you have better control and less complicated CI/CD process.

Shared Flows are not like Proxies, so you dont have versions exactly. But if you want you can introduce versions as well. The later version could have some additional logic that is applicable only to later version of APIs. So yeah - you could do versioning on SF as well.

Reg configurations, it depends on how you structure. If there are common configuration, you could have that as a separate repo and push those as part of your pipeline. I have not seen that with other customers but surely can be done.

I know a lot of customers who use Azure DevOps.

Please try the repo I shared. If you see that repo, there is no shared-pom.xml. I have a single pom file that does everything (Static Code Analysis, Unit Testing, Code Coverage, Configurations, Package, Deploy and then finally Functional testing). You can do the same for your repos as well.

Hope that clarifies.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to use the Jenkins deployment which you have suggested and going through the jenkins file to understand each step.

- I am not getting what this step :

| stage('Package proxy bundle') { |

| steps { |

| sh "mvn -ntp apigee-enterprise:configure -P${env.APIGEE_PROFILE} - Ddeployment.suffix=${env.APIGEE_PREFIX}" } |

would do wrt API Proxy?

2.And what is overall packaging do? When i used the below maven command -

mvn package "pom.xml" -Dusername -Ptest -Dpassword

created a copy of the APIProxy bundle along with the Zip with version.

3. In CICD we need not have a version control or save the package in Git?

4. If we use the Apigee management api's also we cannot use the Zip file for deploying the bundles - correct me if I am wrong?

So overall I want to understand the importance of the Packaging stage to be included in the CICD Pipeline. If you can just give me some hints on that it would be great help.

Sorry my questions might be very basic I have started working on APIGEE recently please help me out

Thanks,

Naveen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Naveen Chalageri,

For #2 - Maven package option will package all the proxy components (policies, scripts, proxy endpoints, target endpoints) into a ZIP proxy bundle which can be used for deployment.

For #3 - You should not store the ZIP proxy bundle as part of your proxy/configuration git repo. The ZIP proxy bundle is like a build artifact. It should be managed by the CI/CD tool you are using. The proxy bundle should be stored in a separate artifacts repository with build numbers marked so that specific builds can be pulled from there.

For #4: You can use Apigee management APIs to deploy the zip bundle. Its a two step process - first import the zip bundle and then deploy it to the required environment. Please check the below links

Import proxy bundle: https://apidocs.apigee.com/management/apis/post/organizations/%7Borg_name%7D/apis-0

Deploy Proxy: https://apidocs.apigee.com/management/apis/post/organizations/%7Borg_name%7D/environments/%7Benv_nam...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

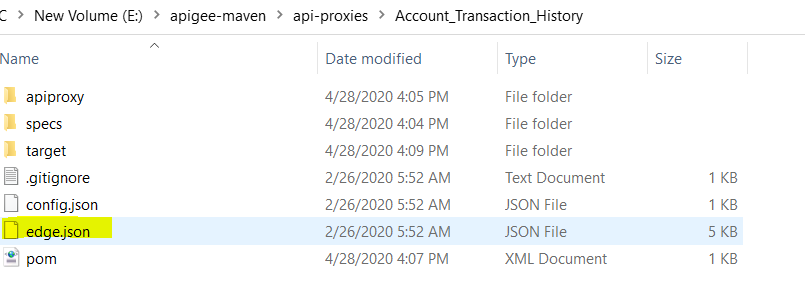

Hi @Nagashree B,

Since we use the Maven for packaging and once the packaging is done it wont be having the Configurations like KVM, Cache Target servers etc..they will not be a part of the Zip file.

How can we manage the configurations? if we are using the Repositories later for deploying?

Attached the screen shot for the folder structure.

Thanks,

Naveen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please check my repo, its got the config as well. Configurations are not part of the bundle (zip), so you need to run it before. You can control what to execute when in the pom.xml using the phases. You will be using the Apigee Maven Config plugin for pushing these configurations. You can check samples there as well

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

+1 to all that @Nagashree B mentioned

1) I have defined that as a separate step as its part of packaging. In that step, the plugin is taking the code from the repo and creating the bundle (zip). This is usually done in most of the build. They use this as a separate stage in their build. This can also be used to push to the artifactory system to deploy to higher environments. Pushing it to artifactory will be the last step after testing is complete and successful.

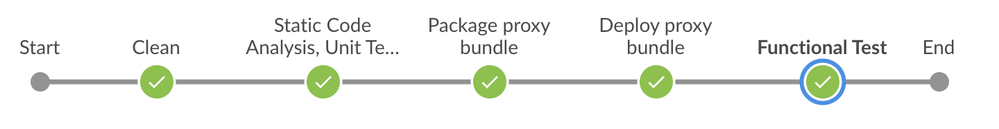

2) Maven has a lifecycle when you execute the phase. Please see this link. It executes in that top-down order. So if you have other build plugins in the pom with those phase enabled, they all get executed in that order. So if I run mvn package, it also runs the unit test. So I wont be able to capture it as a separate stage in my pipeline. Hence I split it by calling the individual task for each stage so that the pipeline is cleaner to visualize. See the screenshot

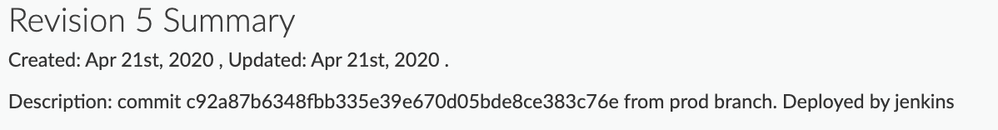

3) As @Nagashree B mentioned, it depends. In my example, I have not used any artifactory system but you could. I am relying completely on my source control and branches to maintain that. In my example you will see that when it deploys, it automatically adds the Git branch, commit Id, deployed by info to the Description of the proxy. So you know exactly the source from where this was pushed. See screenshot

4) No you can. In fact the Maven plugin after creating the zip uses the Management API only to push it to Apigee. I would just recommend stick to one tool if it does all you need. Don't complicate using many tools (like using Maven for packaging and apigeetool for deploy). Although you can, I am recommending to one tool for ease of use and maintainability.

To summarize - packaging is an important portion of your CI/CD process. Like any other language. In java, you create a jar, war, ear and thats always a separate task. So just using the same approach here. Hope this helps !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Nagashree B, @Sai Saran Vaidyanathan,

Thanks alot for such a quick response got many doubts cleared.

So all the artefacts can be saved in the server or those can be separately source controlled right?

And can we have custom revision method in case of APIGEE? Like suppose if we assume we had a release for API-Proxy V1 containing some 3-4 new features, then we found a bug while manual testing. can we make the version as V1.1 similar to any java application?

@Sai Saran Vaidyanathan, As you mentioned I am trying to use Maven tool for everything so trying to understand that as much as possible.

Thanks,

Naveen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No - there are artifact management tools like JFrog, Nexus, Archiva and many more

With your second question you are heading towards another topic - API Versioning. There are many community posts for that. Please refer to those. Please create another post for versioning if you still have questions. Would like this post be used for Source code management and CI/CD

High level answer to your question - try to stick to major versions and not minor versions. In other words dont do 1.1, etc. If your code fix introduces a breaking change, then release it as v2. If not a breaking change, you can release it as v1 itself. Hopefully this answers. If not, please check out other posts on Versioning and create a new post with your questions on that topic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Sai. I will check that Post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Naveen Chalageri,

I believe Sai has given you the information to get started on source control and configure CI/CD for Apigee deployment.

You have mentioned multiple versions of the API proxies and sharedflows. In reality you will have not more than two versions of the API live. So you can also think of a strategy to hold multiple release branches and then merge to master with appropriate tags (a gitflow sort of model) to maintain the codebase in a single repository. Obviously, with this approach your CI/CD should be robust enough to handle build and deployments for multiple versions of the APIs.

For the config repo, the generic approach would be to maintain the proxy related configurations in the same repo as the code. You can also have a separate config repo common to all proxies as well. The advantage of it is having a single place where all the configuration is stored. The downside is, understanding the config location structure and educating developers on how config changes need to be made for their specific components without tampering with others'. The CD for proxy deployment will also need to ensure that the config is deployed first and then the proxy is deployed.

I am not very clear on your statement "The same configurations have to be a part of both API Proxy as well as SF". If you can be a bit more specific, I might be able to help.

Lastly, I would like to mention that I have used Azure devops and Azure git repos for configuring the CI/CDs for all Apigee related code and configuration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Nagashree B,

Thanks for the response. About configurations I meant the common configurations if there are any wrt API Proxy and SharedFlows.

Can you please tell me Build stage of your pipeline ideally what should be a part of it wrt APIGEE?

I thought of including Static Code analysis and Unit testing etc.

And next is Packaging stage - Do we need to version control the Packages ? - I am Using Maven plugin for the same.

Do we really need the package stage as a part of CICD?

Can we go directly for deploy?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Naveen Chalageri,

The CI (build) comprises of static code analysis and creating the proxy bundle as a build artifact. The build artifacts are published and stored in Azure devops artifacts repository.

I am using maven plugin with package option to create the Apigee proxy bundle and push it to the artifact repository.

The CD will retrieve the build artifact and deploy the proxy bundle to the respective environments of Apigee. For the CD you can use apigeetool node module or the maven plugin with the deloy option.

Separating the CI/CD helps to ensure the same build can be deployed to multiple environments.

I would not advise to go for a direct deployment as it might be difficult for you to track which code commits are deployed across environments.

-

Analytics

497 -

API Hub

75 -

API Runtime

11,664 -

API Security

175 -

Apigee General

3,036 -

Apigee X

1,277 -

Developer Portal

1,909 -

Drupal Portal

43 -

Hybrid

463 -

Integrated Developer Portal

88 -

Integration

309 -

PAYG

13 -

Private Cloud Deployment

1,068 -

User Interface

76

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter