- Google Cloud

- Cloud Forums

- Apigee

- GatewayTimeout 504 error on production

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

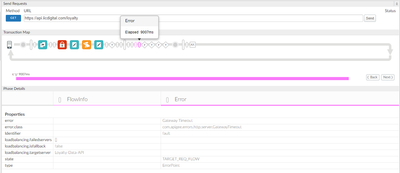

We are receiving the following error once in every 5 times when we try to make the GET request from Apigee to our microservices:

{"fault":{"faultstring":"Gateway Timeout","detail":{"errorcode":"messaging.adaptors.http.flow.GatewayTimeout"}}}

Do you know what is the reason for this error? When I target the prod environment with the postman directly, I don't receive this error at all.

I traced the request and pasted one part of it below:

<Point id="FlowInfo">

<DebugInfo>

<Timestamp>06-12-21 09:17:51:088</Timestamp>

<Properties>

<Property name="current.flow.name">PostFlow</Property>

</Properties>

</DebugInfo>

</Point>

<Point id="FlowInfo">

<DebugInfo>

<Timestamp>06-12-21 09:17:51:089</Timestamp>

<Properties>

<Property name="loadbalancing.targetserver">Loyalty-Data-API</Property>

<Property name="loadbalancing.isfallback">false</Property>

<Property name="loadbalancing.failedservers">[]</Property>

</Properties>

</DebugInfo>

</Point>

<Point id="Paused"/>

<Point id="FlowInfo">

<DebugInfo>

<Timestamp>06-12-21 09:18:00:092</Timestamp>

<Properties>

<Property name="Identifier">fault</Property>

</Properties>

</DebugInfo>

</Point>

<Point id="Error">

<DebugInfo>

<Timestamp>06-12-21 09:18:00:092</Timestamp>

<Properties>

<Property name="error">Gateway Timeout</Property>

<Property name="type">ErrorPoint</Property>

<Property name="state">TARGET_REQ_FLOW</Property>

<Property name="error.class">com.apigee.errors.http.server.GatewayTimeout</Property>

</Properties>

</DebugInfo>

</Point>

Can you help me with explaining how to further debug this issue on ApiGee? If you need any more data I can also add it here.

Thanks,

Ivan

- Labels:

-

API Runtime

-

Apigee General

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's a frustrating problem.

Just looking at what you've got there, I cannot think of anything inside of Apigee that would cause that kind of timeout.

Are you saying that it's reliably, one request of every 5 that receives the timeout?

The first thing I would do is see if there are logs on the microservices side. The Apigee side is observing a timeout. What is the receiving side observing? Is there logging at the ingress gateway? Can you look there to find information that might help you to diagnose the issue? I don't know why you'd be seeing a difference when you use Postman, but I cannot think of anything within Apigee that would cause the kind of behavior you are reporting.

What is the target server configuration? Is it simply an HTTPTargetConnection with a static URL ? Are you using TargetServers? Do you have load balancing and health monitoring configured for the target endpoints within Apigee? If so, can you try different variations of the configuration there to see if it has any effect on the behavior?

Good luck on your investigation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Following up on ijancic's behalf.

When sending requests directly to the microservice's ingress, we are consistently getting quick responses. However, when going through apigee, every few requests are slow, sometimes it's 3,5,6 seconds, sometimes it timeouts at 9. The timeout is not on the microservice side, and it happens before the request is sent. Also, I see that the average proxy response time for this endpoint is 785ms - so that is the time spent to process the request and forward it to backend server. Do you know what can cause this poor performance?

In the flow for this proxy we have steps:

Verify and remove API key

Extract root path

Extract key from the Key Value Maps

Add authorization header

We have other proxies who have similar steps, but perform much much better.

Also to note, this issue only persists in the prod environment. It performs normally on development, and the revision is the same.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The timeout is not on the microservice side, and it happens before the request is sent. Also, I see that the average proxy response time for this endpoint is 785ms - so that is the time spent to process the request and forward it to backend server. Do you know what can cause this poor performance?

YES. I have some ideas.

The #1 reason I see for large latency between Apigee and some upstream system is.... network latency. Apigee can run "anywhere". If you are using Apigee SaaS (Edge or X), then Google runs Apigee on your behalf, in some cloud datacenter, which itself is sited in some geography. That datacenter might be in the US (in the west, the east, central, or south), or in the EU (Frankfurt, Netherlands, Belgium, Zurich, London), or Asia (Seoul, Osaka, HK, Taiwan)... you get the idea. OR, if you're using customer-managed Apigee (aka OPDK or also hybrid), then the datacenter can be literally anywhere.

Connecting from a client system (let's say postman) directly into a microservice, will be fast, if the client and the service are near each other on the network. Let's suppose your workstation is in an office building in New York City, and you are using a cloud-based Apigee that is running in the northern virginia region for GCP. The time to send data from NYC to Virginia will be pretty quick. Less than 10ms roundtrip transit time. Now imagine inserting a proxy between the client and the service, and that proxy runs in Zurich. Now the data must go from NYC to Zurich, then to the microservice back in Virginia, then the response does the same. This can add hundreds of milliseconds of latency to the call. Add in things like VPNs and other network inefficiencies, and it is easy to attain latency in excess of 700ms on a call. To optimize this, you need to know where on the network your client (postman or whatever) is, your microservice, and your Apigee proxy. You want the path between those three parties to be as short as possible.

Also keep in mind the payload size will affect transit time. If you send a 10mb request, that will take many more packets than a single GET /hello call. And that means more latency. It will take time to transmit all the data in the request. This compounds with the network distance I described above.

The next thing I recommend checking is the TLS handshake negotiation at each connection point. There will be TLS between the client and Apigee, and TLS between Apigee and the microservice. If you have no Keep-alive on either end, you will see unnecessary, avoidable latency. If you are using HTTP1.0, switch to HTTP 1.1.

Then I would look at the API proxy. Operations like Verifying an API Key, extracting a path, removing or injecting a header....those are all in-memory operations. The VerifyAPIKey will require a lookup to persistent store on the first call, but subsequent calls will read information from cache, so it will be fast. All of those things should execute within a total of 1-2 ms. That's not where the latency will be. On the other hand if you have lookups in the API proxy, to do things like ServiceCallout to retrieve an authorization table, or ServiceCallout to perform message logging to some remote system... those things can consume time, because again, they are calls across the network. All the same issues I mentioned above apply here too. I dealt with one customer recently that had 4 logging calls in their proxy, each one was consuming 25-30ms. Collapsing those logging calls to just one, and stuffing it into the postclientflow, avoided over 100ms of latency in the API proxy itself.

Last thing - if you are seeing timeouts before the request is sent, then ... check the TLS connection. The Apigee target has timeouts for keepalive, I/O, and connection. It sounds like it is failing on the connection timeout.

when going through apigee, every few requests are slow, sometimes it's 3,5,6 seconds, sometimes it timeouts at 9. ... We have other proxies who have similar steps, but perform much much better.

Also to note, this issue only persists in the prod environment. It performs normally on development, and the revision is the same.

Your observations, together, suggest a network issue. It's probably not an Apigee proxy issue. Maybe there is a "virtual IP" between Apigee and the upstream prod system. A network device like a F5 or Netscaler or something. Maybe that thing is misconfigured and has an incorrect target.

It's possible that your Apigee prod environment is much more stressed than your Apigee non-prod, and that extra stress is leading to contention in the message processor which leads to a higher rate of timeouts. If you are using Apigee cloud, that should never happen. If you are managing the apigee runtime yourself (OPDK or hybrid) , then check the health of the MPs to see that they are not over-stressed.

But I suggest that you investigate "network" before "MP".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do not think it is a latency issue because of a few reasons:

Other proxies on the same APIGEE account work well.

When I say the average proxy response is 785ms. It is not consistently around that time. So when it works good it takes about 150ms for the whole request. And when it does not work, it times out, even before the request is sent to the backend server.

It's true that there is much more traffic on the production environment, but we are not experiencing issues like this on other proxies.

We are using HTTP 1.1.

Our payloads are not big in size, they are usually a simple JSON.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ndragasI am facing same issue, Can you please share root cause of you issue and what fix you applied.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mody !

It wasn't an APIGEE issue.

It was an issue with DNS. We had two domain -> IP mappings and one of them was outdated. So when a load balancer decides to send the request to the wrong outdated service it was creating a delay. So the issue was fixed by removing the invalid (outdated) DNS mapping.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Appreciate for quick reply @ndragas. Thanks!!!

-

Analytics

497 -

API Hub

75 -

API Runtime

11,664 -

API Security

175 -

Apigee General

3,030 -

Apigee X

1,275 -

Developer Portal

1,907 -

Drupal Portal

43 -

Hybrid

461 -

Integrated Developer Portal

87 -

Integration

309 -

PAYG

13 -

Private Cloud Deployment

1,068 -

User Interface

75

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter