- Google Cloud

- Cloud Forums

- Apigee

- Calling APIGEE Internally

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a requirement of uploading huge data through the apigee. But since there is an size restriction in the proxy itself I needed to put the upload service in a public facing interface (securing against ddos etc is another case, no need to care about it here). I am basically planning to send the image request to my image store service directly with the token and then going to utilize the ValidateAccessToken policy with a proper, binded to a simple proxy endpoint. Is there a specific way to implement this specific token validation endpoint, on APIGEE, callable only from that upload service.

Sorry that I am not sure If I used the proper terminology.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The AccessControl policy is a good one if you know that the IP address of the caller is stable and reliable. If the caller is on a mobile phone, it will get a different IP depending on the network that it is running on, but if your storage service has a stable IP, then you can use a simple AccessControl policy to restrict access to your Apigee proxy. I don't see any issues with that.

@olgunkaya wrote:

how can I validate the second token ? Upload service token will be handled by apigee itself automatically via PreFlow policy e.g ValidateAccessToken, and then how can I handover the client's/second token to a validation (since it will be somewhere in the request body or url param) policy ?

Well if you're using AccessControl, then the IP address itself is the thing that "authenticates" the storage service and you don't need to Validate the token of the storage service. I guess the way I think of it is, there are three alternatives we've discussed for authenticating the storage service.

- TLS

- An Apigee-issued Token

- the IP Address

I don't think you need more than one of those, but you might have a different opinion. Regardless of which of those cases you choose, or even if you choose more that one of those, you ALSO will want to validate the token of the uploader.

how can I handover the client's/second token to a validation (since it will be somewhere in the request body or url param) policy ?

To validate a token that is not passed in the Authorization header as a bearer token, you can use the AccessToken element in the OAuthV2 policy. And an example would be:

<OAuthV2 name='OAuthV2-Check-Token'>

<Operation>VerifyAccessToken</Operation>

<!-- by default, the policy pulls the token from Authorization header

as per OAuthV2.0 spec. This setting tells the policy to retrieve

the token-to-check from a query param.

-->

<AccessToken>request.queryparam.token</AccessToken>

</OAuthV2>

Take note - the token checking proxy itself must be included in the API Product, for which the client app is authorized.

I mean: the App has authorization for one or more API products.

The API Product wraps one or more API Proxies.

The token checking proxy must be one of those proxies, for the VerifyAccesstoken to succeed. You may already be aware of this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @olgunkaya - Have you looked as using a signed URL for this case? Explained in detail here by Dino.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark, thanks for the heads up. Unfortunately signed url requires too much dev for our custom image store solution (as we are not planning to use big cloud providers’ storage solutions). Or I totally misunderstand the implementation details of signed URL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Got it, Dino has already added an excellent response below on what you need to think about if you're not planning on using a service which already supports signed URL access to the storage endpoints.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, what Mark said.

Your meaning is coming through clearly enough. I imagine you are thinking, you want the upload to happen between the client and the storage service, and before the storage service allows the upload, you want the storage service to call Apigee to ask Apigee to validate the uploader's token. I have some views on that.

You asked if it is possible to make the "token validation endpoint" callable only from a specific set of callers - the storage service in your case. One way to make a proxy endpoint callable from only a specific client, is to use transport-layer security (TLS) with mutual authentication. In that case the Apigee endpoint can authenticate the caller, and only a caller with the right certificate will be able to call the Apigee endpoint.

The interaction sequence I suppose would be something like this:

- uploader client sends a request-for-token to Apigee, presenting some sort of credentials (maybe client id and secret)

- Apigee evaluates that request and if valid, generates a token and sends it to the uploader client as response.

- uploader client then sends the received token, along with its to-be-uploaded file, to the storage service

- storage service calls Apigee to ask Apigee to validate the uploader's token.

- Apigee allows the inbound call only if the TLS certificate presented by the storage service is trusted. (Transport layer authentication of client)

- Apigee evaluates the token and response "200 OK" or "403 unauthorized" depending on token validity

- Storage service makes the allow/deny decision based on that response.

As an alternative to the transport layer (TLS) trust, you could rely on something at the message/application layer. The storage service would send its own special credential (API Key or token) to Apigee, at the time it requests token validation. In this model, the storage service would call Apigee with 2 pieces of data - its own token, and the token provided to it, by the uploader client . You would need to configure Apigee to validate both tokens. First the token from the caller ("is this caller allowed to ask me to validate a token?") , and then the relayed token from the uploader. Only if both tokens are valid, would Apigee respond "200 OK" to the storage service, and then the storage service would know it should accept the upload.

The reason the storage service needs to contact Apigee is only Apigee knows the status of the token. Only Apigee can determine if the token is expired, valid, good for this particular file, and so on.

But you could use a different interaction pattern if you rely on public/private cryptography. If the storage service could validate the token on its own, then you could avoid the step in which the storage service explicitly asks Apigee to validate a token. This is possible if the token is a "federated token", like a signed JWT. In that case the storage service could validate the token all by itself, without asking Apigee. To validate the JWT, the storage service needs only the public key of Apigee. The interaction sequence would be: uploader client contacts Apigee, presents credentials, and requests a JWT that would grant it "upload rights" for a specific payload. Apigee sends the generated JWT to the uploader in response. The uploader then sends its file along with the JWT to the storage service. The storage service can validate the JWT, inspect the claims, and then make an authorization decision (allow or deny the upload?) based on those claims.

The "Signed URL" model that Mark mentioned, which I described in detail elsewhere, is basically the same idea, except that:

- cloud storage systems like Azure, AWS S3, GCP Storage , already support signed URL access to their storage endpoints. You don't need to "build" a storage service yourself; the existing cloud service already does this.

- the signature in these cases are not wrapped up in a JWT, but a different signature format, which is "proprietary" and specific to each storage service.

You can still use Apigee to generate the Signed URL. The interaction sequence is similar to what I described above for a JWT:

- uploader client contacts Apigee, presents credentials, and requests a signed URL that would grant it "upload rights" for a specific payload.

- Then the uploader receives the signed URL,

- uploader client invokes that signed URL, passing its file as payload to the storage service.

- The storage service validates the signature in the query param, and then makes an authorization decision (allow or deny?) based on that signature.

If you already have a storage service, or if you don't want to depend directly on one of the big cloud provider's storage services, then you could use the JWT model. If you don't wish to write a storage service, then you could use signedURL and one of the pre-built services.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dino,

Thanks for great explanation 🙂 since I am kinda new to apigee and it's capabilities I was going through the guides and reference pages.

I tried a very raw AccessPolicy which accepts the requests only coming from our storage service instances based on the service IPs configured with CIDR. My motivation is that in the end we will know our services IP addresses.

Is this too raw to go with, any security issues with this foreseen on your side ?

Regarding to signed JWT, even we know that it's pretty secure, Our company has no JWT implementation around its products and it's kinda out of the picture 😞

@dchiesa1 wrote:You would need to configure Apigee to validate both tokens. First the token from the caller ("is this caller allowed to ask me to validate a token?") , and then the relayed token from the uploader.

TLS and above quoted app layer solution seems to be promising but one thing I could not figured out is how can I validate the second token ? Upload service token will be handled by apigee itself automatically via PreFlow policy e.g ValidateAccessToken, and then how can I handover the client's/second token to a validation (since it will be somewhere in the request body or url param) policy ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The AccessControl policy is a good one if you know that the IP address of the caller is stable and reliable. If the caller is on a mobile phone, it will get a different IP depending on the network that it is running on, but if your storage service has a stable IP, then you can use a simple AccessControl policy to restrict access to your Apigee proxy. I don't see any issues with that.

@olgunkaya wrote:

how can I validate the second token ? Upload service token will be handled by apigee itself automatically via PreFlow policy e.g ValidateAccessToken, and then how can I handover the client's/second token to a validation (since it will be somewhere in the request body or url param) policy ?

Well if you're using AccessControl, then the IP address itself is the thing that "authenticates" the storage service and you don't need to Validate the token of the storage service. I guess the way I think of it is, there are three alternatives we've discussed for authenticating the storage service.

- TLS

- An Apigee-issued Token

- the IP Address

I don't think you need more than one of those, but you might have a different opinion. Regardless of which of those cases you choose, or even if you choose more that one of those, you ALSO will want to validate the token of the uploader.

how can I handover the client's/second token to a validation (since it will be somewhere in the request body or url param) policy ?

To validate a token that is not passed in the Authorization header as a bearer token, you can use the AccessToken element in the OAuthV2 policy. And an example would be:

<OAuthV2 name='OAuthV2-Check-Token'>

<Operation>VerifyAccessToken</Operation>

<!-- by default, the policy pulls the token from Authorization header

as per OAuthV2.0 spec. This setting tells the policy to retrieve

the token-to-check from a query param.

-->

<AccessToken>request.queryparam.token</AccessToken>

</OAuthV2>

Take note - the token checking proxy itself must be included in the API Product, for which the client app is authorized.

I mean: the App has authorization for one or more API products.

The API Product wraps one or more API Proxies.

The token checking proxy must be one of those proxies, for the VerifyAccesstoken to succeed. You may already be aware of this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

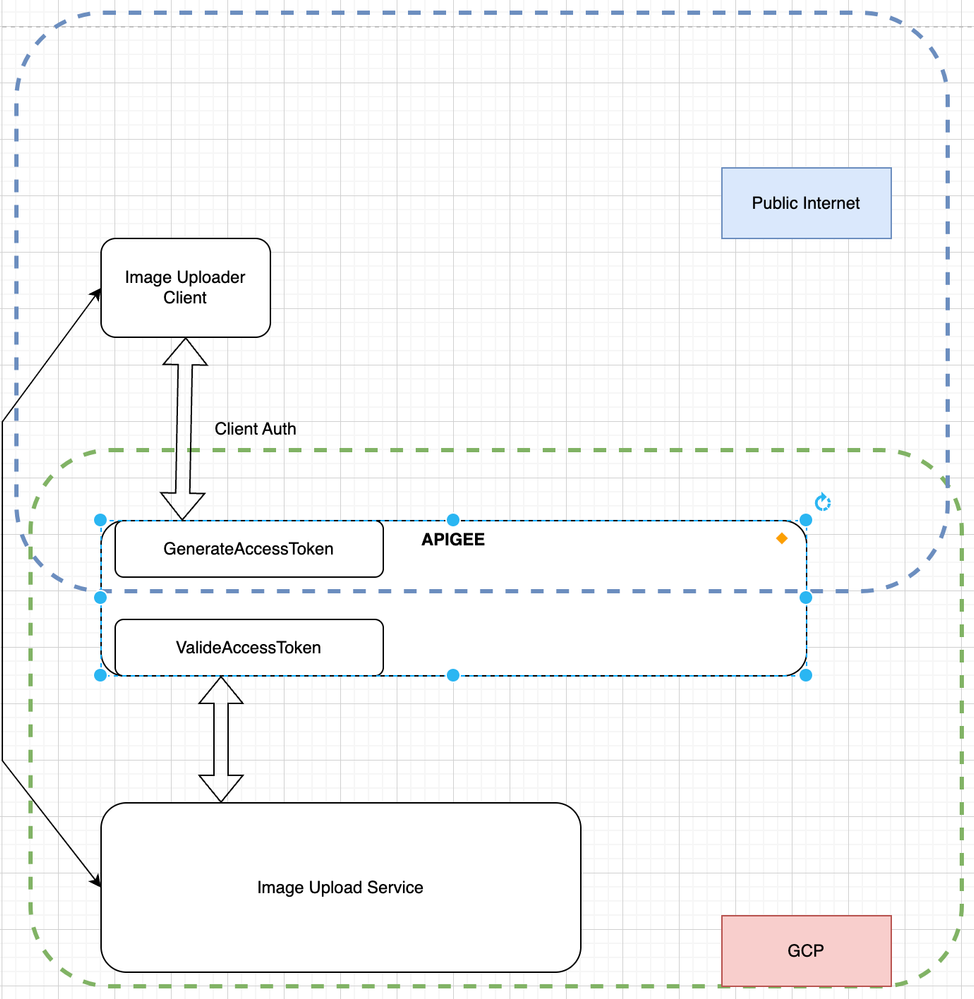

Although I already accepted this one as a great answer. My last thought is if somehow I can restrict the endpoint (image service's token validation endpoint) to public (not even visible) and make it only available to my service deployed in my GCP/GKE instance. I tried to illustrate below 🙂

Can this be achieved via internal only product definition ?

From the product generation page, "Access" options below;

Private

Visible only to external developers with explicit permission during app registration

Public

Visible to any registered developer during app registration

Internal only

Visible only to developers of this org during app registration

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, you can do that.

One way is to deploy the Validate API in a different Apigee environment, which is grouped under a different environment group, which is accessible only to the GCP-internal network. This page talks about creating an additional environment. Follow steps 7 and 8 to get an environment that is accessible for "internal only" access.

Another thing you can do is... either as a complement to, or as an alternative to, configuring the access routing, is check the GCP access token. Because the Image Upload service is running in GCP, that service itself will have a GCP identity, and can easily obtain an access token. It can do so by sending a GET to the well-known endpoint: http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/default/token

The result will be something like

{

"access_token": "ya29.a0AeTM...redacted...iA0174",

"expires_in": 3226,

"token_type": "Bearer"

}

The first member there is a GCP-issued access token. And then the upload service can send that access_token to your validate endpoint, running in Apigee. The Apigee proxy that handles that request will not validate that GCP-issued access token with the Apigee-internal OAuthV2 policy. Instead the Apigee proxy can validate the GCP-issued token by sending a GET to the GCP tokeninfo endpoint: https://www.googleapis.com/oauth2/v3/tokeninfo

..and passing access_token query param with the value of that access token. Do this from within Apigee via the ServiceCallout policy. With a bonafide token, the result will be 200 OK, with a payload something like

{

"azp": "102462798848081388931",

"aud": "102462798848081388931",

"scope": "https://www.googleapis.com/auth/cloud-platform",

"exp": "1670603948",

"expires_in": "3411",

"access_type": "online"

}

If you get a non-200, then you know the token is no good, so the Validate API should reject the validate call. If you get a 200, then the Apigee proxy needs to compare the azp with the known client_id corresponding to the identity of the Image Uploader Service. If they match then the Apigee proxy "knows" that the inbound request to validate an Apigee token came from the GCP-based uploader service. So the proxy should proceed with the validation of the uploader client token.

-

Analytics

497 -

API Hub

75 -

API Runtime

11,665 -

API Security

178 -

Apigee General

3,041 -

Apigee X

1,288 -

Developer Portal

1,910 -

Drupal Portal

43 -

Hybrid

463 -

Integrated Developer Portal

89 -

Integration

310 -

PAYG

13 -

Private Cloud Deployment

1,069 -

User Interface

77

Twitter

Twitter