- Google Cloud

- Cloud Forums

- Apigee

- Re: Apigee Hybrid on Anthos GKE on prem

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Folks,

Thanks in advance if someone can provide any guidance.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I provisioned a public static ip address.

gcloud compute addresses create runtime-ip --region $REGION

Then I added a load balancer's address pool in my cluster:

kubectl -n cluster-cluster-1 edit cluster cluster-1

I edited istio-operator.yaml to have service annotation refer to the public-ip address pool

And provisioned ASM/Istio

As a result, I can see my public IP allocated at istio ingress gateway as an external IP:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

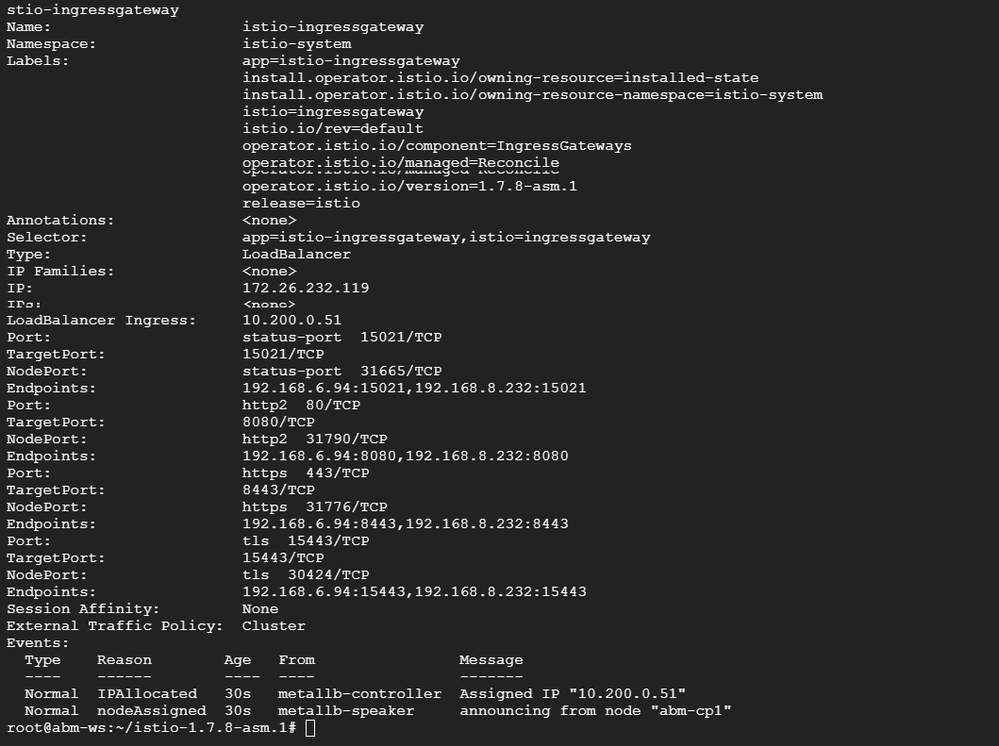

describe svc command for istio-ingressgateway service should give you the reason(s) why load balancer is not provisioned.

kubectl describe svc -n istio-system istio-ingressgateway

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey

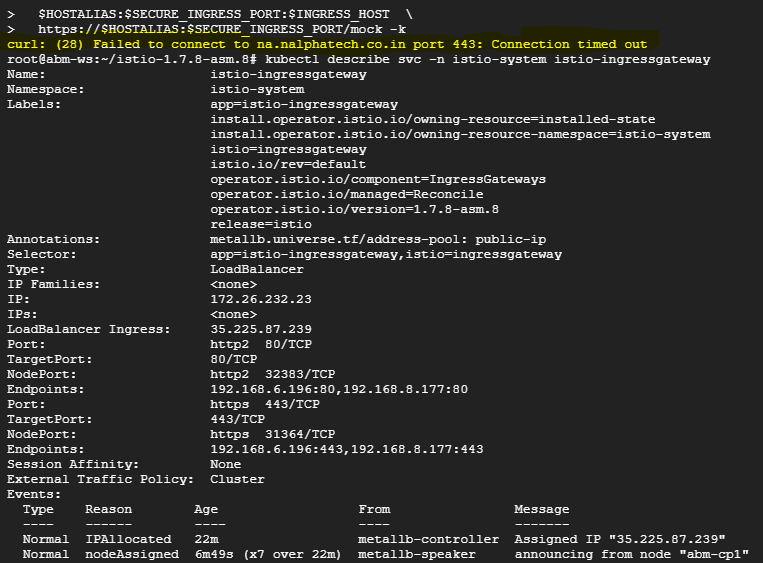

Thanks for the reply. After hitting the above command I get this description file{snap of it is attached}.

For a Clear Picture of my question:

I'm done with the setup of apigee hybrid on anthos but I'm not able to hit the API's from outside using the rest-client tool{not able to access the service of API}. Could you suggest me way to get traffic into my cluster from the outside world?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The output of your describe command says that MetalLB correctly provisioned a load balancer with a Private Network IP (10.200.0.51).

As you said, you're done with Apigee hybrid install. It's your on-prem networking configuration now.

Because it's an on-prem setup, it depends on how your networking team defines those processes.

We can see from the output that metallb is responsible for load balancer.

Therefore, to expose your ingress gateway externally, you would need to follow this https://metallb.universe.tf/concepts/ page and configure a pool of external IP addresses that metal lb can allocate an external IP.

Then you add service annotation to use that pool:

https://metallb.universe.tf/usage/#requesting-specific-ips

kind: Service

metadata:

...

annotations:

metallb.universe.tf/address-pool: production-public-ips

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the suggestions of loadbalancer. As per your guide we tried working with metal LB in anthos cluster by creating config file and pushing changes using Kubectl commands. We're facing with two different ways of setting up anthos cluster LB as follows:

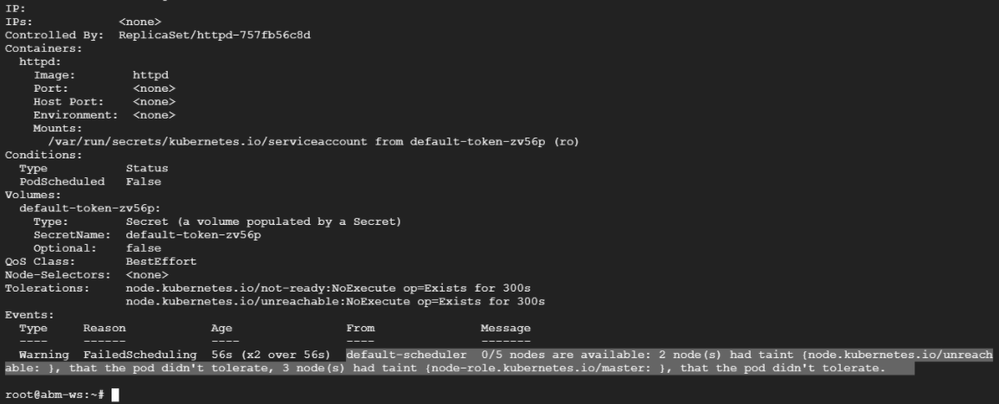

1. We setup anthos cluster and installed Metal LB in it and when try to push config file we find below error:

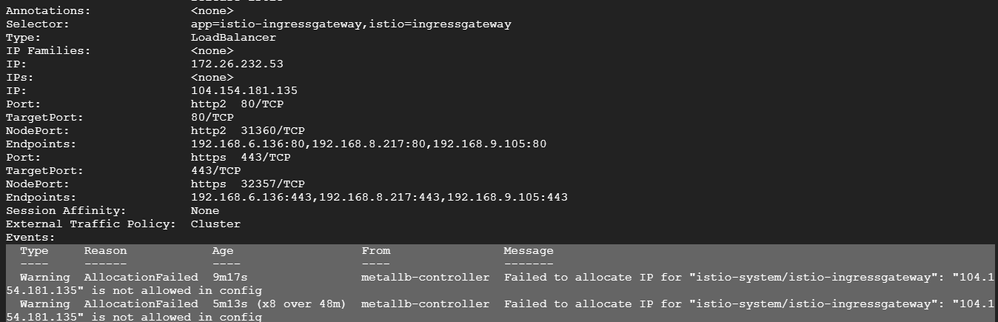

2. We followed this repo https://github.com/yuriylesyuk/ahr/wiki/Anthos-On-Prem-Apigee-Hybrid-1.3-AHR-Manual in our configured anthos cluster and input Reserved static IP as RUNTIME IP. so find the following issue:

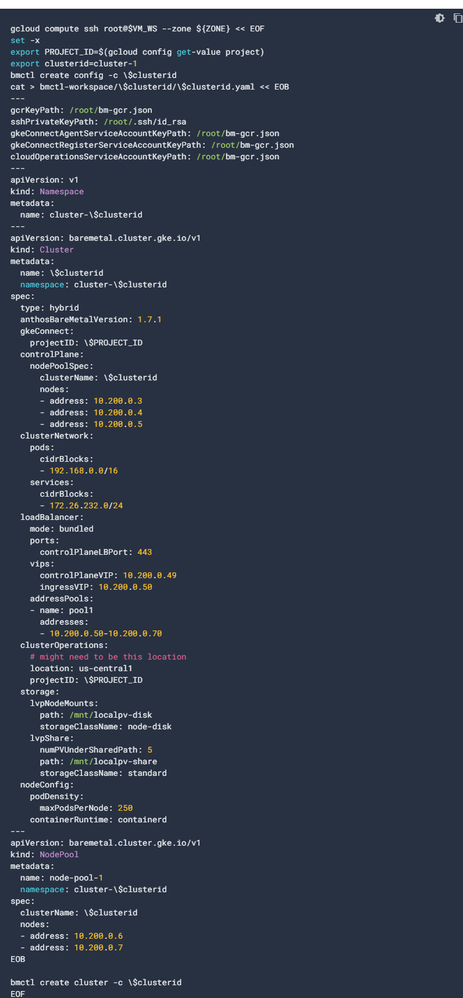

NOTE: FYI in the both the setup of anthos cluster we're following this doc of six VM's https://cloud.google.com/anthos/clusters/docs/bare-metal/1.7/try/gce-vms where we create anthos cluster using BMCTL with this pre-written config file where load balancer type is bundled.

If we're making an error in creating anthos cluster or we've to make any changes in config file then please help me with that.

Any kind of suggestion or changes would be of great help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I provisioned a public static ip address.

gcloud compute addresses create runtime-ip --region $REGION

Then I added a load balancer's address pool in my cluster:

kubectl -n cluster-cluster-1 edit cluster cluster-1

I edited istio-operator.yaml to have service annotation refer to the public-ip address pool

And provisioned ASM/Istio

As a result, I can see my public IP allocated at istio ingress gateway as an external IP:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried reserving static IP and then added it as public-IP in load balancer section of cluster config file. after that edited the ASM/istio file and provisioned it with the changes. This install my istio core ingress service into cluster. I got the external IP attached to the istio-ingressgateway.

But our main objective is to get the external traffic into our cluster so that I can hit API proxies and access those but when I tried hitting API after following the procedure it fails with the error of Connection time out.

Note: This we're also not able to hit proxies inside the admin VM that was possible last time when we're not initiating any reserved external IP

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, let me backtrack slightly from my last answer.

I've discussed this issue with my colleagues, @NICOLA and @Daniel Strebel and combining our experiences, the current situation looks like this:

It doesn't seem that Anthos BareMetal with MetalLB at GCE nodes can directly expose traffic against public static IP. The reason being: it's VxLAN implementation that cannot cross L2 boundary [either via L2/ARP or BGP]

For Details, See https://metallb.universe.tf/installation/clouds/

We might try to ask ABM/MetalLB experts, but our analysis leads to this conclusion.

Now, saying that, I believe the question to expose the Apigee API externally [to the Internet] can be solved by many different ways at GCP.

More complex one, production-ready, scalable, with CloudArmour for WAF, is described here:

https://cloud.google.com/apigee/docs/api-platform/get-started/install-cli#external, Configuring External Routing. Please, don't be distracted by the fact that it's Apigee X [not Hybrid] documentation. The starting point is the same: we have an Apigee Endpoint we want to expose to the internet.

More simple one, suitable for quick experiments, demo-friendly, cheap-and-cheerful way consists of those steps:

0. Reconfigure the metallb pool to the original one, so that you can successfully call your APIs from abm-ws VM.

* We are going to use abm-ws as a proxy machine. and Envoy to implement reverse tcp proxy

* We exploit the fact that abm-ws already has docker installed.

* Make sure that abm-ws has https_server network tag and Firewalls/Allow HTTPS traffic checkbox ticked.

?. Log into abm-ws VM.

?. Create an envoy.yaml file:

admin:

address:

socket_address: { address: 127.0.0.1, port_value: 9901 }

static_resources:

listeners:

- name: apigee_listener

address:

socket_address: { address: 0.0.0.0, port_value: 443 }

filter_chains:

- filters:

- name: envoy.tcp_proxy

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy

stat_prefix: ingress_tcp

cluster: "apigee"

clusters:

- name: apigee

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: apigee-endpoints

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 10.200.0.51

port_value: 443

Change endpoint.address.socket_address.address as appropriate.

?. pull an envoy image

docker pull envoyproxy/envoy:v1.18-latest

?. Run envoy

docker run --rm -d -p 443:443 -p 9901:9901 --name envoy -v $(pwd)/envoy.yaml:/etc/envoy/envoy.yaml -e ENVOY_UID=$(id -u) envoyproxy/envoy:v1.18-latest

?. Make a note of public IP of abm-ws VM. Now you can execute request from anywhere:

curl -k https://$RUNTIME_HOST_ALIAS/ping -v --resolve $RUNTIME_HOST_ALIAS:443:$RUNTIME_IP * Added bm-poc-yyy-hybrid-apigee.net:443:34.xxx.yy.zzz to DNS cache * Hostname bm-poc-yyy-hybrid-apigee.net was found in DNS cache * Trying 34.xxx.yyy.zzz... * TCP_NODELAY set * Connected to bm-poc-yyy-hybrid-apigee.net (34.xxx.yy.zzz) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: ... * Server certificate: * subject: CN=api.exco.com * start date: May 18 23:40:41 2021 GMT * expire date: Jun 17 23:40:41 2021 GMT * issuer: CN=api.exco.com * SSL certificate verify result: self signed certificate (18), continuing anyway. * Using HTTP2, server supports multi-use * Connection state changed (HTTP/2 confirmed) * Copying HTTP/2 data in stream buffer to connection buffer after upgrade: len=0 * Using Stream ID: 1 (easy handle 0x7fe3ce00ce00) > GET /ping HTTP/2 > Host: bm-poc-yyy-hybrid-apigee.net > User-Agent: curl/7.64.1 > Accept: */* > * Connection state changed (MAX_CONCURRENT_STREAMS == 2147483647)! < HTTP/2 200 < host: bm-poc-yyy-hybrid-apigee.net < user-agent: curl/7.64.1 ... < date: Thu, 03 Jun 2021 21:28:17 GMT < server: istio-envoy < * Connection #0 to host bm-poc-yyy-hybrid-apigee.net left intact pong * Closing connection 0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, @ylesyuk, and @nicola for your support and ending up my anthos bare metal setup on six VM with some great conclusions.

Your soln helped me a lot and worked for me

-

Analytics

497 -

API Hub

75 -

API Runtime

11,664 -

API Security

175 -

Apigee General

3,030 -

Apigee X

1,275 -

Developer Portal

1,907 -

Drupal Portal

43 -

Hybrid

461 -

Integrated Developer Portal

87 -

Integration

309 -

PAYG

13 -

Private Cloud Deployment

1,068 -

User Interface

75

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter