- Google Cloud Security

- Google Security Operations

- Google Security Operations Forums

- SIEM Forum

- Re: How to find duplicate events ingestion into ch...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

Is there any way that we can find the duplicate events ingested into chronicle. If yes, could you please share more information.

With Regards,

Shaik Shaheer

- Labels:

-

Ingestion

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jstoner @mikewilusz @manthavish - Could you please help me identifying the duplicate logs into chronicle.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

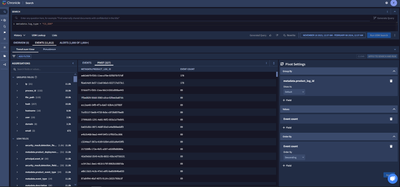

This should be possible by using a pivot table, on the basis that the log contains a unique identifier (Global Event ID, Event ID, Log Id etc). In the following case we are using Google Chronicle's demo instance, and utilizing the 'Crowdstrike Falcon' log source, with the UDM field that contains an event's unique identifier being "metadata.product_log_id".

[1] - First we search for the log type we want, in this case 'Crowdstrike Falcon' is the following: metadata.log_type = "CS_EDR"

[2] - Navigate to 'Pivot'

[3] - Apply Pivot settings like the screenshot below (grouping by the unique identifier}

[4] - Click on the :, export the data into a .csv, and remove all the ones that are equal to "1" (which if you order by Descending will be at the bottom) :).

This should show you the Event count based on the UDM field that is grouped (in this basis we are implying that metadata.product_log_id for the 'CS_EDR' logs is a unique identifier for each log). Depending on the need of this, it is likely that the creation of a dashboard may be better suited.

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ayman C,

Greetings...!!!

Thank you for your suggestion, and we attempted to implement this method. However, it makes the analyst's job tedious as they have to manually export and individually check the logs. Is there an alternative automation process available?

With Regards,

Shaik Shaheer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shaik,

Google Chronicle SIEM customers can leverage several automation strategies to check for duplicate ingested data. Here's a breakdown:

1. Hash-Based Deduplication

-

Mechanism:

- Calculate a cryptographic hash (e.g., MD5, SHA-256) of the essential components of each event (consider a combination of timestamp, source IP, key fields).

- Store the hash values in a fast-access data structure (like a bloom filter or a hash table).

- Before ingesting a new event, check its hash against the stored values. If a match is found, it's likely a duplicate.

-

Pros:

- Reliable for detecting exact duplicates.

- Can be implemented at the pipeline level.

-

Cons:

- Minor changes to an event will produce a different hash, potentially leading to false negatives.

2. Similarity Detection with Chronicle Rules

-

Mechanism:

- Create Chronicle detection rules that compare essential event fields using similarity matching thresholds. Consider features like:

- Near-matching timestamps

- Similar IP addresses (perhaps within the same subnet)

- Matching key fields (e.g., usernames, file names)

- Optionally, use fuzzy matching or string comparison algorithms (Levenshtein distance) for more flexible comparisons.

- Create Chronicle detection rules that compare essential event fields using similarity matching thresholds. Consider features like:

-

Pros:

- Detects duplicates even with slight modifications.

- Leverages Chronicle's built-in rule engine, making it accessible to security analysts.

-

Cons:

- Can be resource-intensive with large data volumes.

- Requires careful rule tuning to avoid false positives.

3. External Data Deduplication

-

Mechanism:

- Send a stream of normalized events or pre-calculated hashes to a dedicated deduplication service or utilize a log management/SIEM platform that has deduplication features natively.

-

Pros:

- Offloads computation from Chronicle.

- Potentially more advanced deduplication algorithms and centralized management.

-

Cons:

- Adds complexity to the data pipeline.

- Might introduce latency.

-

Admin

24 -

AI

1 -

API

17 -

Applied Analytics

2 -

BigQuery

3 -

Browser Management

2 -

Chrome Enterprise

2 -

Chronicle

10 -

Compliance

5 -

Curated Detections

9 -

Custom List

1 -

Dashboard

9 -

Data Management

32 -

Ingestion

46 -

Investigation

24 -

Logs

2 -

Parsers

52 -

Rules Engine

65 -

Search

30 -

SecOps

2 -

Security Command Center

1 -

SIEM

20 -

Siemplify

1 -

Slack

1 -

Threat Intelligence

18

- « Previous

- Next »

Twitter

Twitter