- Google Cloud

- Articles & Information

- Community Blogs

- Looker x GenAI: BigQuery Semantic Search Block

Looker x GenAI: BigQuery Semantic Search Block

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Introduction

- What is Semantic Search in BigQuery?

- What does the Looker Block enable for customers?

- What are example use cases for this Block?

- How can I configure the block in my own Looker instance?

- What are different ways I can use the block?

Introduction

In this post, we'll introduce our latest addition to our Looker x GenAI open-source solution, the Looker BigQuery Semantic Search block. This block uses BigQuery Machine Learning (BQML) and a large language model to enable vector searching for structured or unstructured data stored in your database.

What is Semantic Search in BigQuery?

BigQuery now offers vector search capabilities, also known as approximate nearest-neighbor or semantic search. This feature enables users to perform vector similarity search on their BigQuery data.

The process involves:

- Converting the input query into a vector using a large language model from Vertex AI. This ensures the query and stored data share the same vector space.

- Utilizing the

VECTOR_SEARCHfunction in BigQuery to efficiently identify vectors most similar to the query vector. An optimized index structure facilitates this process. - Mapping the similar vectors back to their corresponding text data, representing the most relevant information from the knowledge base.

Key features of BigQuery's vector search:

- Simple SQL syntax with CREATE VECTOR INDEX

CREATE VECTOR INDEXandVECTOR_SEARCHfunctions. - Compatibility with BigQuery's embedding generation capabilities and externally generated embeddings.

- Automatic updates to the vector index as the underlying data changes.

- Optimization for analytical workloads, offering efficient batch processing and low-latency online predictions.

This semantic search functionality opens up new possibilities in BigQuery, such as:

- Retrieval-augmented generation (RAG): Retrieve relevant data to provide context for a large language model.

- Semantic document search: Identify semantically similar documents, even if they don't contain the same words.

- Multimodal AI applications: Combine structured data, unstructured data, and generative AI models.

In essence, BigQuery's vector search powered by Vertex AI empowers users to harness advanced AI and ML capabilities. This enables sophisticated semantic search and retrieval on enterprise data, unlocking new and innovative data-driven use cases.

What does the Looker Block enable for customers?

With the Looker block, customers can directly utilize BigQuery's semantic search capabilities within their Looker instance. End users can dynamically create searches and perform similarity matching in real time. These functions execute at query time, enabling searches on any field or combination of fields in a Looker Explore, accommodating any user's classification queries. This capability unlocks numerous use cases related to semantic searching, affording customers endless possibilities.

What are example use cases for this Block?

Implementing semantic search on eCommerce data opens up many valuable applications for generating insights and utilizing the data. For instance, using a natural language prompt like "90's punk rock" or "country chic," we can instruct BigQuery to scan through tens of thousands of SKUs and pinpoint products that closely align with the prompt. The results are ranked by relevance, enabling us to refine or broaden the search as desired. This capability unlocks users' potential to discover innovative opportunities and fully utilize their data, enabling them to achieve greater outcomes.

Now, we have a tailored list of products that pertain to our search that we can begin to activate:

- Matched segment analysis. We can analyze how this custom product segment has performed historically.

- Revenue: How much revenue has this custom product segment generated historically?

- Top Brands/Product Categories: Identify the top-selling brands and product categories associated with this segment.

- Profitability Comparison: Compare the profitability of this segment to other segments within the business.

- Forecasting. In addition, we can leverage forecasting techniques to gain insights into the matched products.

- Seasonality. Analyze the seasonality of sales for this segment.

- Future performance. Assess how the products will perform over the next 6-12 months.

- Trends. Identify any upward or downward trends.

- Adapt in real-time. Leverage current trends by aligning products and target audiences with trending topics in the present.

- Audience building.

- Analyze customer engagement metrics (page views, purchases) to spot customers interested in specific products.

- Estimate the potential market size and pinpoint openings for targeted marketing

- Leverage identified audience and optimize product mix to enhance the campaign's effectiveness.

Before these semantic search functionalities, these use cases required manual efforts and couldn't be accomplished dynamically in real time. However, now, any business user can seamlessly input a natural language prompt and instantly receive their product segment and related audience. This capability empowers users to effortlessly test new ideas and perform rapid analysis during the process.

How can I configure the block in my own Looker instance?

Pre-Requisites

Before getting started, please ensure the following steps have been completed:

- Your user OR the Service Account being used has these permissions in a Google Cloud Project.

- Additionally following API’s have been enabled in your Google Cloud Project:

- BigQuery

- BigQuery Connection

- Vertex AI

- A Remote Connection in BigQuery has been configured and the service account provisioned has the `Vertex AI User` role assigned.

- You have dev access to a Looker Instance, that Looker instance has a BigQuery connection setup, and the Service Account for that connection has the “BigQuery Connection User” role

Setup Looker / BQML Semantic Search Block

- Navigate to the open source GitHub repository.

- Clone or Fork the repository to your personal or org’s GitHub

- Follow the steps for creating a Blank LookML Project

- Connect the prior git repo to your Looker project & pull in the source code files

*To Note: the block uses a public ecomm dataset by default. You will need to adopt this pattern to your own dataset in production.

Understanding the Semantic Search Views

In the `views/product_semantic_search.view.lkml` file you will see a few different LookML view objects. We will describe these in further detail.

Product Embeddings Model

view: product_embeddings_model {

derived_table: {

datagroup_trigger: ecomm_monthly

sql_create:

CREATE OR REPLACE MODEL ${SQL_TABLE_NAME}

REMOTE WITH CONNECTION `@{BQML_REMOTE_CONNECTION_ID}`

OPTIONS (ENDPOINT = 'textembedding-gecko@002');

;;

}

}This view uses the `sql_create` command (what we can use to run DDL in LookML) to create a BQML Model using the existing name of the view. This model uses the remote connection established earlier to connect to the foundational Embeddings Model on Vertex AI.

If you are not familiar with remote connections, they are essentially a way for BigQuery to connect to external resources (like API’s, Models, Webhooks, etc.), more often than not to augment and/or enrich existing data directly in the database!

Now this model really only needs to be created one time, so feel free to modify the `datagroup_trigger` to a much longer interval of preference.

Product Embeddings

view: product_embeddings {

derived_table: {

datagroup_trigger: ecomm_daily

publish_as_db_view: yes

sql_create:

-- This SQL statement creates embeddings for all the rows in the given table (in this case the products lookml view) --

CREATE OR REPLACE TABLE ${SQL_TABLE_NAME} AS

SELECT ml_generate_embedding_result as text_embedding

, * FROM ML.GENERATE_EMBEDDING(

MODEL ${product_embeddings_model.SQL_TABLE_NAME},

(

SELECT *, name as content

FROM ${products.SQL_TABLE_NAME}

)

)

WHERE LENGTH(ml_generate_embedding_status) = 0; ;;

}

}Here we are again using the `sql_create` command, this time to create a table to hold our product embeddings. BQML has a few different functions to invoke Models and each contain their own parameters/arguments. `ML.GENERATE_EMBEDDING` is how we create vector embeddings for our data directly in the database. No longer do you need to export data or bring it into a dataframe just to generate embeddings.

What we are doing here is generating embeddings for each row in our product table. This product table might contain metadata like descriptions, categories, brands, and a product name among other things all which can be used to help us match a natural language query to a set of recommended offerings.

Product Embeddings Index

view: product_embeddings_index {

derived_table: {

datagroup_trigger: ecomm_monthly

sql_create:

-- This SQL statement indexes the embeddings for fast lookup. We specify COSINE similarity here --

CREATE OR REPLACE VECTOR INDEX ${SQL_TABLE_NAME}

ON ${product_embeddings.SQL_TABLE_NAME}(text_embedding)

OPTIONS(index_type = 'IVF',

distance_type = 'COSINE',

ivf_options = '{"num_lists":500}') ;;

}

}Now just because we have our embeddings doesn’t mean that we can begin searching right away. Imagine if our product table had millions of SKU’s. After generating embeddings for each of those SKU’s we need a way to ensure that our search data is indexed to give us our recommendations fast and timely. The Product Embeddings Index view does just that. We create an index on the `text_embedding` column from the prior view and specify a few options like “index_type” “distance_type” and “ivf_options” which allow us to fine tune the search algorithm as an every day SQL user.

Product Embeddings Search

view: product_semantic_search {

derived_table: {

sql:

-- This SQL statement performs the vector search --

-- Step 1. Generate Embedding from natural language question --

-- Step 2. Specify the text_embedding column from the embeddings table that was generated for each product in this example --

-- Step 3. Use BQML's native Vector Search functionality to match the nearest embeddings --

-- Step 4. Return the matche products --

SELECT query.query

,base.name as matched_product

,base.id as matched_product_id

,base.sku as matched_product_sku

,base.category as matched_product_category

,base.brand as matched_product_brand

,distance

FROM VECTOR_SEARCH(

TABLE ${product_embeddings.SQL_TABLE_NAME}, 'text_embedding',

(

SELECT ml_generate_embedding_result, content AS query

FROM ML.GENERATE_EMBEDDING(

MODEL ${product_embeddings_model.SQL_TABLE_NAME},

(SELECT {% parameter product_description %} AS content)

)

),

top_k => {% parameter product_matches %}

,options => '{"fraction_lists_to_search": 0.5}'

) ;;

}

....LookML fields defined belowNow how do we actually match a natural language query to this large table of random vector embeddings? Well, without having to dive head first into the math powering this all BigQuery provides us with a handy function 'VECTOR_SEARCH()' that allows us to implement this all within SQL!

To keep it simple, the VECTOR_SEARCH() function takes in among a few others:

- Table containing vector embeddings to search

- The actual column from that table to search (this is the indexed column)

- A subquery that contains an embedding, and the corresponding natural language query

- The number of matches to return specified with `top_k`

- An `options` variable for additional customization

There are also a few tasteful LookML additions in this SQL snippet that are worth pointing out.

-

{% parameter product_description %}- If you are not familiar with Liquid syntax, this is how we make SQL and LookML dynamic. Specifically the product description parameter allows us to search for products matching a given description, all done through the Looker Frontend without having to hardcode a variable or run the raw SQL itself. {% parameter product_matches %}- Similarly, product_matches allows an end user to specify how many recommendations/matches they want returned instead of this being pinned to a hard value.

The output of this search is our matched products, up to the amount we requested, as well as a distance field. Distance is returned as a part of the Approximate Nearest Neighbor method that VECTOR_SEARCH uses, and can be used as a scoring metric to rank the closest matches.

So what does this look like from an end user standpoint? Well let’s walk through a few examples!

What are different ways I can use the block?

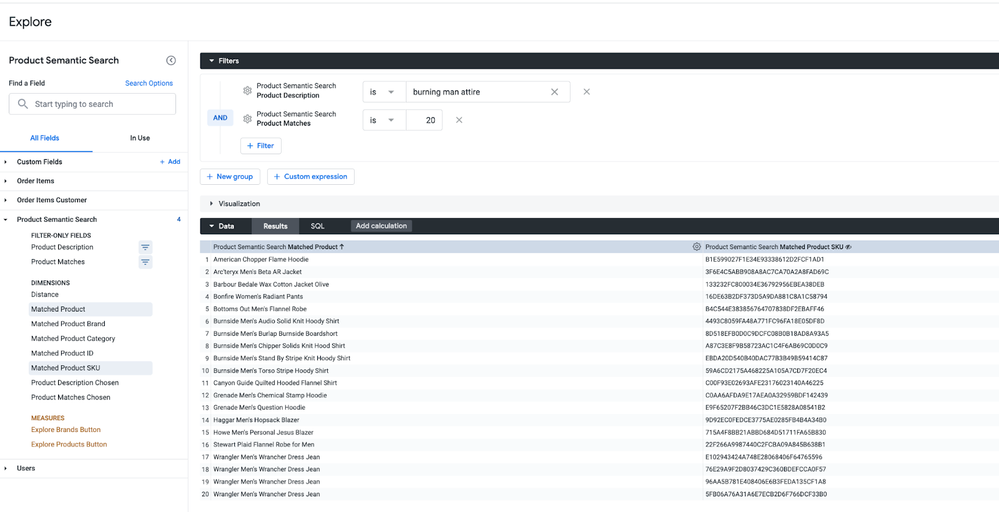

Example 1: Matched Product Segment Analysis

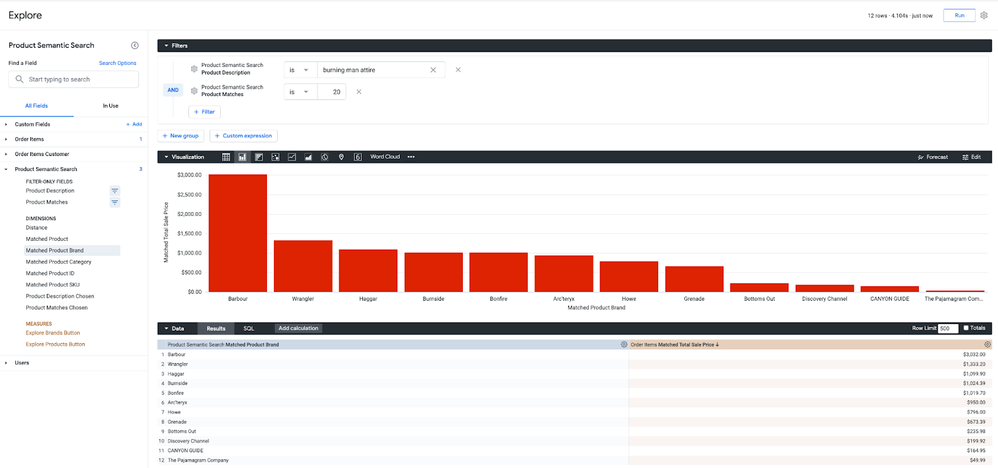

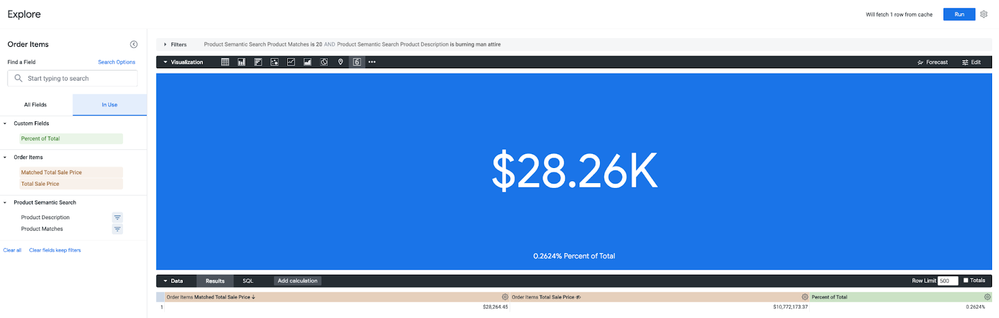

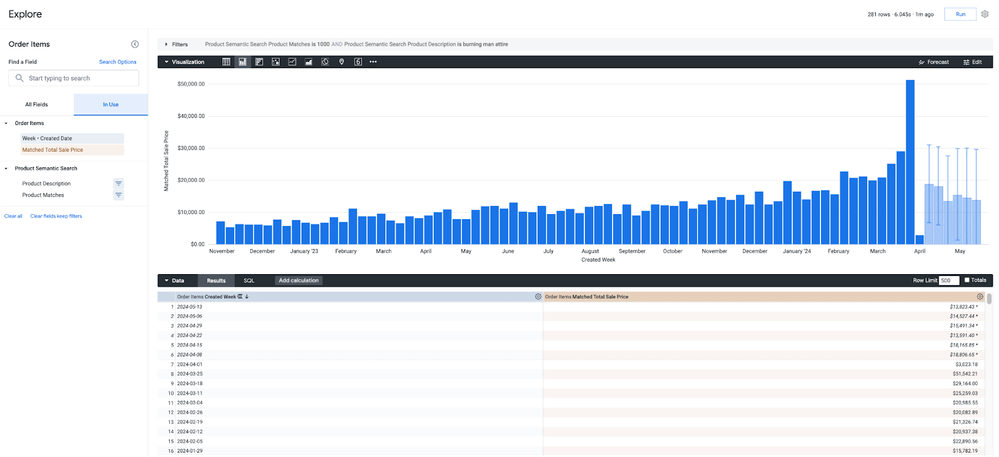

Starting with our search query “burning man attire” and number of matches “20” we can easily identify to the SKU level our top matched products:

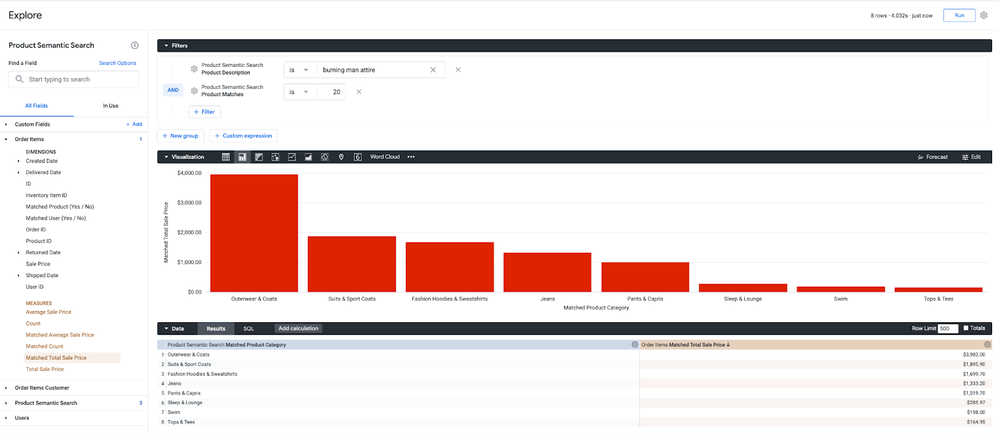

Where this gets more powerful, is joining this data back to our transactional ecommerce data. In Looker we can easily accomplish this join and begin to analyze this data as a standalone segment or as a percentage of total. For example, what categories perform well from a revenue standpoint based on the matched products?

How about our top performing brands in this segment?

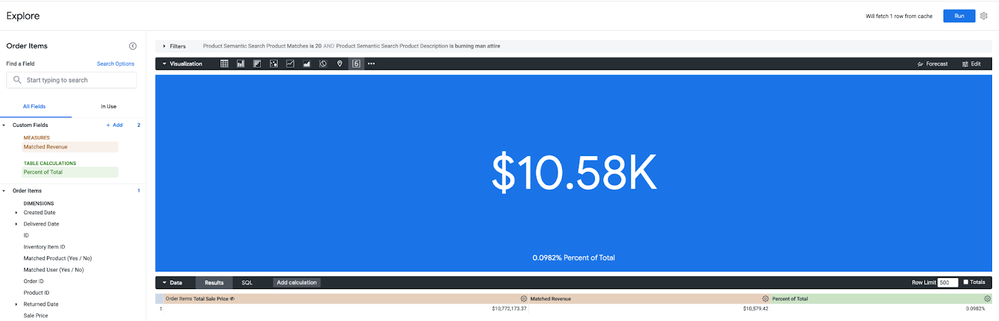

What about this segment of products as a percentage of total revenue?

Example 2: Audience Building

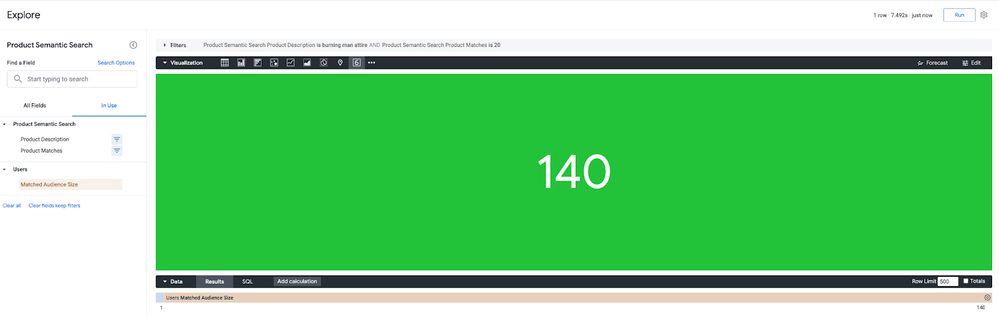

We just walked through joining the matched products back to our transactional data for analyzing product segments.. But what about customer segments? Well in bringing in our User table, paired with some smart filtering we can easily begin to analyze and segment customer audiences based on the same natural language search! Goodbye complex rule based and manual customer segmentation.

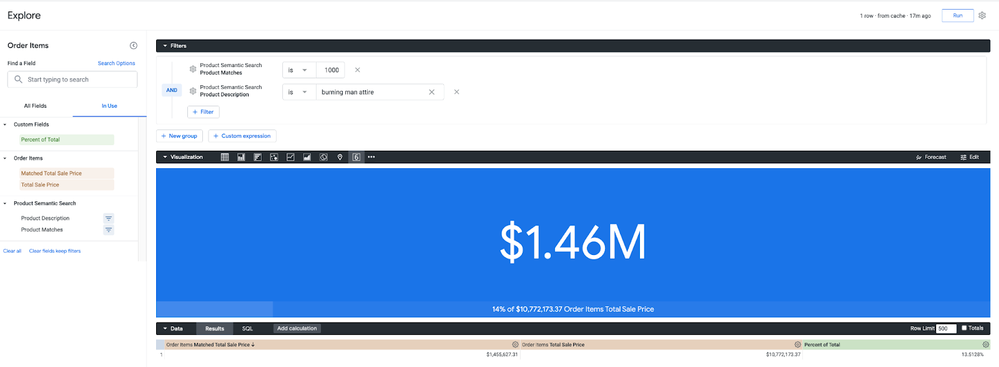

The first question we might ask is, how large is this audience segment?

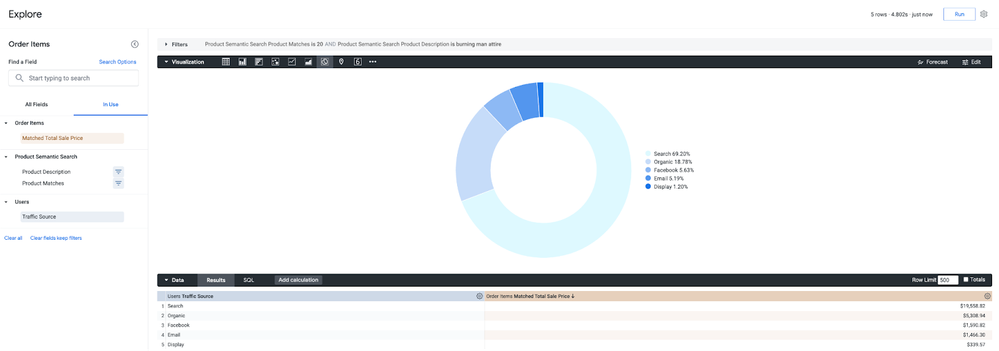

For this audience which Acquisition channels drive the most revenue?

How about this audience’s lifetime revenue as a percentage of total?

Ok, maybe that’s too small an audience.. What if we increase the audience size?

Ah, ok that’s better. Looks like they capture 14% of total revenue for our business. Is this audience valuable to target this season however as I know Burning Man happens in late August? What’s their expected revenue for the next 6 weeks?

- Building Enterprise-Ready Generative AI Applications with Vertex AI Vector Search

- Function calling: A native framework to connect Gemini to external systems, data, and APIs

- AI Face-Off: Vertex AI Search vs. Conversation

- Gen AI for Business Intelligence: Supercharge the way you consume data with Looker and Vertex AI

Twitter

Twitter