- Google Cloud

- Articles & Information

- Cloud Product Articles

- When can the Average Total Response Time be less t...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The Analytics capability in Apigee Edge is *really nice*. It's super valuable to be able to see so many statistics for all the API calls flowing through Edge for you.

But sometimes Analytics shows things that look surprising. Here's a puzzle for you: When can the Average Total Response Time be less than the Average Target Response Time?

Some definitions are in order: The Total Response time is the total time for a single call into the proxy to return. The Average of that quantity is just an aggregate over all calls into the Edge Proxy: Tally up all the API requests sent by all the apps on all the various devices out there, and divide by the aggregate total response time, and you have the Average Total Response time.

The Target Response time is similar, except it quantifies the response time observed for the target - in other words the backend system. The system *behind* the proxy.

And the Average of that quantity is likewise, just an aggregate over all calls that get returned by the Target.

Now, clearly you can see that for any given call, the Total Response time will be greater than the Target Response time. Apigee Edge is pretty cool, but one thing it does not do is violate the laws of physics, or exceed the speed of light. Sometimes the policies in an Edge proxy run very quickly - in fewer than 10ms total. But that number is always greater than zero. Edge takes *some time* to do its work. Total Response time = Target Response Time + Time spent in Edge. Therefore Total Response time will always be greater than Target Response time. Right?

The question is: Can it ever be possible that the Average Total Response Time is less than the Average Target Response Time? If, for every call, the Total Response time is greater than the Target Response time, then the averages ought to exhibit a similar relationship, right?

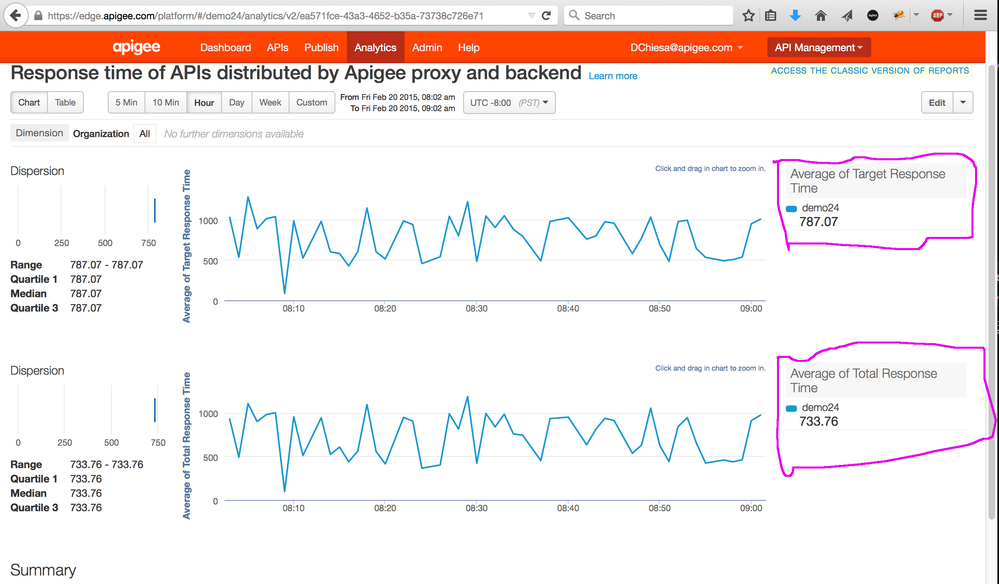

Well, check this out:

This shows clearly that the Average Total Response time is LESS than the Average Target Response time. How is this possible?

Here's how:

Not all calls that are processed by Edge flow through to the backend system. There are some calls that are handled entirely by Edge itself. For example, any API call which is satisfied from cache, will not invoke the backend target system. An API call that issues an OAuth token, or revokes an OAuth token, will likewise not connect to a backend target system.

Also, it stands to reason that the calls into Edge that do not connect to a backend system will complete more quickly than the calls that DO connect to a backend system. These "faster" calls tend to cause the computed Average Total Response time to decline.

The mystery dissolves when we acknowledge the unfounded assumption. The assumption is that Total Response time is always greater than Target Response time. This is true when there is a call to the target. It isn't strictly true when there is no target call at all.

Problem solved.

So if you see a chart that shows your Average Target Response time is higher than your Average Total Response time, now you know how it is possible.

Thanks to Oscar Ponce @oponce@apigee.com for explaining this to me!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Interesting! Great write up. I think you could make the case that the target response time for in-proxy-only responses should be zero, but that could have the consequence of skewing the target time downwards which may also be misleading.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

We have a Node JS target with asynchronous calls being made to the backend system. The reports indicate that total response time is less than target response time.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

yes. And are there any calls that do not proceed to the (nodejs) target? It would make sense according to the explanation I provided above, if that were the case.

Twitter

Twitter